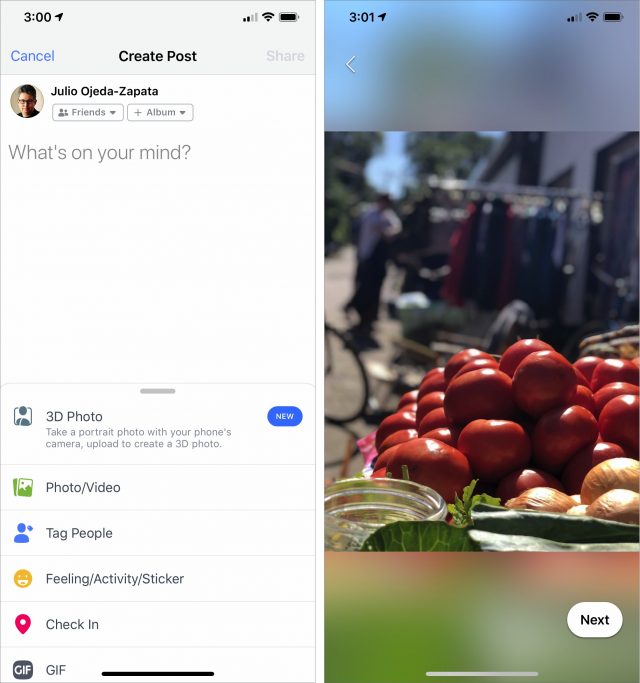

Photo by Julio Ojeda-Zapata

Google and Facebook Use the iPhone’s Portrait Mode for Fun Effects

Apple has a hit in Portrait mode, the Camera app feature that uses computational photography to keep foreground subjects in focus while blurring the backgrounds—an effect sometimes called “bokeh.” Initially, Portrait mode required one of Apple’s dual-camera models, but the company managed to tap the processing power of this year’s A12 Bionic chip to extend it to the single-camera iPhone XR.

Portrait mode has proved so popular since its 2016 introduction that Google and Samsung have replicated it in their own phones.

Apple, meanwhile, has permitted third parties to integrate Portrait mode into their own iOS apps. Facebook and Google are among the latest to do so, incorporating the tech into their apps in recent weeks to give their users some interesting new capabilities.

What Is Portrait Mode?

Portrait mode simulates using a DSLR camera lens with a wide maximum aperture to create a background blur. A DSLR achieves this effect optically, whereas the iPhone depends on software to pull off this stunt. Photography purists may turn up their noses at this “fake bokeh,” but it’s amazing when it works as intended.

Apple introduced the feature in 2016 on the iPhone 7 Plus and later built it into the iPhone 8 Plus, iPhone X, iPhone XS, and iPhone XS Max. Each of these phones has a dual-camera system on the rear, which was required for Portrait mode until the advent of Apple’s new iPhone XR. The lower-cost iPhone XR has only a single rear-facing camera but still supports Portrait mode. It’s a more limited version though—it works with people, but not pets or inanimate objects.

Every Portrait mode picture has embedded depth and focus data from which the bokeh is derived. This data can be tapped in a variety of algorithmic ways, making such images deliciously malleable.

Apple, for instance, has recently made Portrait mode’s background blur adjustable. This Depth Control feature, available with the new iPhone XR, XS, and XS Max phones, lets users tweak the depth of field to blur the background as much or as little as they want.

But Apple isn’t alone in taking advantage of the embedded depth and focus data.

Google’s Depth Control

Google has now added a depth-control feature similar to Apple’s to the Google Photos app, giving iPhone users another way to tap into this depth-of-field adjustability.

And there’s a twist: Google Photos provides this capability not only to users of the iPhone XR, XS, and XS Max, but also to those with older iPhone models with Portrait mode cameras—including the iPhone 7 Plus, 8 Plus, and X.

When editing a Portrait picture in Google Photos, you see a Depth slider underneath the Light and Color sliders. Move the Depth slider back and forth to decrease or increase the blur.

You can also change the focus point by sliding around a little white ring that appears atop the picture. Doing this lets you control what part of the photo stays in focus while boosting background blur.

The tricky part with Google Photos is finding your Portrait mode photos to edit. While Apple’s Photos app collects all of these photos in a Portraits album so they’re readily available, Google Photos makes you search for them. (Or you could use Apple’s Portraits album for reference.)

Facebook’s 3D Photos

As a social network, Facebook cares less about letting you edit your photos and more about helping you make your photos compelling fodder for social sharing. It’s all about the clicks, baby.

Facebook’s 3D Photos do not have adjustable blur, but they do incorporate a subtle three-dimensional “parallax” motion that’s also derived from a Portrait photo’s embedded depth and focus data. You’ve seen this effect before if you enabled iOS’s Perspective feature while picking a new wallpaper for your iPhone.

When viewing such a picture in the Facebook app on any modern smartphone, tilting the phone causes the in-focus background to shift in relation to the blurred background. If you are viewing these photos on a Mac, you’ll see the parallax motion as you move your mouse around or scroll the Facebook feed.

Any Facebook user can view 3D Photos, regardless of phone model or platform. However, not all browsers support them—Chrome and Safari do, but Firefox doesn’t. And only users of Portrait mode-capable iPhones can create them. Here’s how to do it:

- Start a new post in the Facebook iOS app.

- From the scrolling list of options at the bottom, choose 3D Photo to display all Portrait mode photos on your iPhone.

- Tap to pick one, and Facebook performs the 3D conversion.

- Click the Next button at the bottom to see your post.

- Enter descriptive text as desired.

- Tap Share.

You’ll notice a few problems right away. Some 3D photos suffer from unsightly smudging during the parallax shifting. In one photo of me with my biking pals, our helmets detached creepily from our heads as I tilted my iPhone to and fro. (And no, we don’t know why the embedded photos have Chinese characters in them.)

Other odd things can happen—but some of my Portrait photos look sensational as 3D Photos.

To avoid some of these problems, follow Facebook’s detailed advice on how to create good 3D Photos, which includes looking for subjects with textures, contrasting colors, or multiple depth layers.

Portrait Mode Evolves

As noted, Apple has been generous in sharing its Portrait-mode capabilities with developers of third-party photo apps. Dig around Apple’s developer site, and you’ll come up with fascinating articles and videos on how depth can be incorporated into iPhone photography.

And these capabilities continue to evolve. With iOS 12, Apple is refining how pictures of people (that is, portrait photos) look by making software-generated effects more accurate and realistic. This is largely achieved through a new capability called Portrait Effects Matte or PEM.

App developers, such as the creators of the popular Halide app, are pretty pumped about PEM, which is how the iPhone XR’s single rear camera is capable of executing excellent human-focused Portrait photos. Halide blog posts and a SlashGear post delve into PEM as well, giving more of a sense of how it can be used. For instance, Halide can export silhouettes of people as detected by PEM and can extend Portrait mode to non-human subjects on the iPhone XR. And the SlashGear post notes that PEM could enable green-screening—the capability to replace a background wholesale.

So keep an eye out for new Portrait mode–related effects and capabilities in iPhone photo apps. Google and Facebook may be the best-known developers taking advantage of it, but they’re not the only ones.

You’re misusing the word ‘Bokeh’. It is not the actual out of focus effect when you have a shallow depth of field it is the aesthetic quality of the blur produced. Different lenses render the out of focus area in different ways and Bokeh is a ‘measure’ of this quality.

Thanks for noting this—it’s been something we need to research further, and I’ve needed an excuse to dive in. A quick lookup of the word in Apple’s dictionary and Google’s dictionary agrees with you, defining bokeh as

(Both Apple’s dictionary and Google’s dictionary license their contents from OxfordDictionaries.com from Oxford University Press, which is a good source.)

Similarly, Wikipedia says:

In general, Wikipedia isn’t itself a source, and one of the references in the definition points to the Dictionary of Photography and Digital Imaging. I don’t have a sense of how canonical that is.

However, Merriam-Webster defines bokeh as:

And American Heritage defines it as:

These last two are pretty clearly defining bokeh more the way Julio used it, rather than as a measure of the aesthetic quality of the blurriness.

So it may be that bokeh started out as a more strictly used word in the way you suggest but has subsequently become more loosely associated with the overall effect.