How iOS and macOS Dictation Can Learn from Voice Control’s Dictation

Speech recognition has long been the holy grail of computer data input. Or, rather, we have mostly wanted to control our computers via voice—see episodes of Star Trek from the 1960s. The problem has always been that what we want to do with our computers doesn’t necessarily lend itself to voice interaction. That’s not to say it can’t be done. The Mac has long had voice control, and the current incarnation in macOS 10.15 Catalina is pretty good for those who rely on it. However, the simple fact is that modern-day computer interfaces are designed to be navigated and manipulated with a pointing device and a keyboard.

More interesting is dictation, where you craft text by speaking to your device rather than by typing on a keyboard. (And yes, I dictated the first draft of this article.) Dictation is a skill, but it’s one that many lawyers and executives of yesteryear managed to pick up. More recently, we’ve become used to dictating short text messages using the dictation capabilities in iOS.

Dictation in iOS is far from perfect, but when the alternative is typing on a tiny virtual keyboard, even imperfect voice input is welcome. Most frustrating is that you cannot fix mistakes with your voice while dictating, so you end up either having to put up with mistakes in your text or use clumsy iOS editing techniques. By the time you’ve edited your text onscreen, you may as well have typed it from scratch.

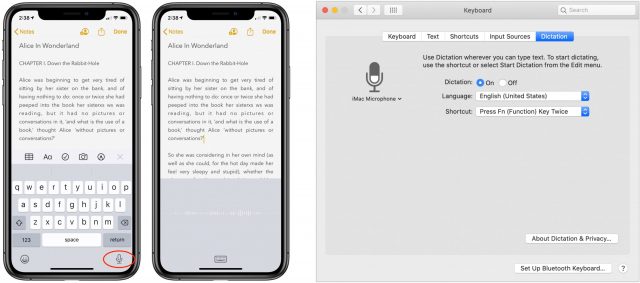

macOS has also had dictation features for years, but it has been even less successful and less commonly used than iOS’s feature, in part because it requires so much more setup than just tapping a button on a virtual keyboard.

With iOS 13 and Catalina, Apple significantly beefed up its voice control capabilities and simultaneously introduced what seems to be an entirely different dictation technology—call it “Voice Control dictation,” which I’ll abbreviate to VCD here. In many ways, VCD is better than the dictation built into iOS and macOS. An amalgamation of the two technologies would be ideal.

What’s Wrong and Right with iOS and macOS Dictation

The big problem with dictation in iOS and macOS is that, when it makes mistakes, there’s no way to fix them. But there are other issues. To start, you have to tap a microphone button on the keyboard (iOS) or press a key on the keyboard twice (Mac, set in System Preferences > Keyboard > Dictation) to initiate dictation. That’s sensible, of course, but it does mean that you have to touch your keyboard every time you want to dictate a new message. And that, in turn, means that you cannot just carry on a conversation in Messages, say, without constant finger interaction, which defeats the purpose.

Another problem with dictation in iOS and macOS is that it works for only a certain amount of time—about 60 seconds (iOS) or 40 seconds (macOS) in my testing. As a result, you cannot dictate a document, or even more than a paragraph or two, without having to restart dictation by tapping that microphone button.

But the inability to edit spoken text is the real problem. There is little more frustrating than seeing a mistake being made in front of your eyes and knowing that there is no way to fix it until you stop dictating. And once you have stopped, fixing a mistake is tedious at best, even now that you can drag the insertion point directly in iOS. iOS just isn’t built for text editing. Editing after the fact is much easier on the Mac, of course, but you can’t so much as click the mouse while dictating without stopping the dictation.

On the plus side, dictation in iOS and macOS seems to be able to adjust its recognition based on subsequent words that you speak. You can even see it doing this sometimes, changing a word back-and-forth between two possibilities as you continue to speak. Other times, changes won’t be made until you tap the microphone button to start or your dictation time runs out. Regardless, it’s good—if a little weird—to see Apple adjusting words based on context rather than brute force recognition.

What’s Right and Wrong with Voice Control Dictation

The dictation capabilities built into Apple’s new Voice Control system are quite different. First, instead of navigating to Settings > Accessibility > Voice Control (iOS) or System Preferences > Accessibility > Voice Control (macOS), you can enable Voice Control via Siri—just say “Hey Siri, turn on Voice Control.” Once it’s on, whenever a text field or text area has an insertion point, you can simply speak to dictate text into that spot. You can, of course, also speak commands, but that takes more getting used to.

Unlike the standard dictation, however, VCD stays on indefinitely. You just keep talking, and it will keep typing out whatever you say into your document.

The most significant win, however, is that you can edit the mistakes that VCD makes. For instance, in the previous sentence, it initially capitalized the word “However.” (It has a bad habit of capitalizing words that follow commas.) By merely saying the words “lowercase however,” I was able to fix the problem. Those who are paying attention will note that the word “however” has appeared several times in this article. How does Voice Control know what to fix? It prompts you by displaying numbers next to each instance of the word; you then speak the number of the one you want to change. It’s slow but effective.

There is another approach, too, although it works best on the Mac. If you select some text, which you might do with a finger or a keyboard on an iPhone or iPad, or with a mouse or trackpad on a Mac, you can then direct Voice Control to act on that particular text. For instance, in the previous sentence, VCD didn’t initially capitalize the words “voice control.” That wasn’t a mistake; I’m capitalizing those words because I’m talking about a particular feature, but they would not generally be capitalized. Nevertheless, I can select those two words with the mouse and say, “capitalize that,” to achieve the desired effect. This is a surprisingly effective way to edit. It’s easy and intuitive to select with the mouse and then make a change with your voice without having to move your hands back to the keyboard.

Some mistakes are easily fixed. When I said above, “it prompts you,” VCD gave me the word “impromptu.” All I had to do was say, “change impromptu to it prompts you,” and Voice Control immediately fixed its mistake. When that works, it feels like magic, particularly in iOS. Whenever I’m using a Mac, I prefer to select with the mouse and replace using my voice.

Of course, there are situations where voice editing falls down completely. Several times while dictating this article, I used the word “by.” VCD interpreted that as the word “I” most of the time, and no matter how I tried to edit it with my voice, the best I could do was the word “bye” and the command “delete previous character.” Or, when I wanted the word “effect” above, I ended up with “affect.” It was likely my fault for not pronouncing the word clearly enough. But when I tried “change affect to effect,” Voice Control treated me to “eat fact” the first time and “ethernet fact” the second time. Maddening! It’s strange, because if I just say the word “effect” on its own while emphasizing the “ee” sound at the start, it works fine.

There are other annoyances. With all dictation, you must, of course, speak punctuation out loud, which is awkward and requires retraining your brain slightly. If VCD interprets a word as plural instead of possessive, you can move the insertion point in front of the “s” and say, “apostrophe,” but will put a space in front of the apostrophe, requiring yet more commands to fix the word. And just try getting VCD to write out the word “apostrophe” or “colon” or “period” instead of the punctuation mark.

Another issue that afflicts all dictation systems is the problem with homonyms. Without context, there is simply no way to distinguish between “would” and “wood,” or “its” and “it’s,” or “there” and “their” and “they’re,” by sound alone. VCD has no advantage here; standard dictation may do better.

Careful elocution is essential for recognition success when working with VCD (not that it ever recognizes the word “elocution” correctly). It is probably a good habit to get into. Many of us—myself included—slur our words together while speaking. It’s amazing that speech recognition works at all, given how sloppily we speak.

Unfortunately, VCD doesn’t work everywhere. On the Mac, I can’t get it to work in BBEdit or in Google Docs in a Web browser. In iOS, it has fewer problems, although I’m sure I’ve hit some in the past. I haven’t attempted to produce a comprehensive overview of where it works and where it doesn’t, so suffice it to note that it may not always work when you want.

Another problem, primarily in iOS, is that leaving VCD on all the time is a recipe for confusion because it will pick up other people speaking as well, or even music or other audio playing in the background. Luckily, you can always ask Siri to “turn off voice control” to disable it. Also, if you leave VCD on all the time, it will negatively impact your battery life.

Why Can’t We Have the Best of Both Worlds?

It doesn’t seem as though Apple would have that much work to do to bring the best of VCD’s features to the standard dictation capabilities in iOS and macOS. All that’s necessary is for the company to stop seeing VCD as purely an accessibility feature, instead of something that could be of use to everyone.

The most important change would be to enable dictation to be invoked easily and stay on indefinitely. In iOS, I could imagine tapping the microphone button twice, much like tapping the Shift key twice turns on Caps Lock. On the Mac, perhaps tapping the dictation hotkey three times could lock it on until you turn it off again. That would let you dictate longer bits of text without having to leave Voice Control on at all times or rely on Siri to turn it on and off.

Next, all of VCD’s voice editing capabilities need to migrate to the standard dictation feature. I see no reason why Apple has made VCD so much more capable in this way, and it shouldn’t be hard to reuse the same code.

Finally, you should be able to move the insertion point around and select words while dictating. It’s ridiculous that any such action stops dictation in iOS and macOS now.

If it sounds like I’m suggesting that Apple replace standard dictation with a form of VCD that’s more easily turned on and off, that’s correct. Apart from occasionally improved recognition of words by context as you continue to speak, standard dictation simply doesn’t match up to VCD in nearly any way.

Unfortunately, as far as I can tell in the current betas of iOS 14 and macOS 11 Big Sur, Apple has made no significant changes to either standard dictation or VCD. So we’ll probably have to wait another year or more before such improvement could see the light of day.

macOS Big Sur beta 1 was released at WWDC on Monday, June 29, 2020. I coughed up the money for a developer account, and downloaded and installed it on Tuesday, because I was keenly interested in what new functionality/features Apple added to Voice Control. I went through the command list to see if Voice Control had any new commands. Specifically looking for the spelling command/mode I’ve been asking for. Remember I have no use of my limbs. So if Voice Control misrecognizes a word, and does not have an appropriate alternative in its correction list, I can’t just grab the keyboard and type in the appropriate word. I need to make the correction by voice and if Voice Control has spelling functionality I could make the correction by voice. Unfortunately no spelling commands/mode yet.

There are a few new commands in macOS Big Sur beta 1. The new commands by category are as follows:

Basic Navigation:

Overlays & Mouse:

Dictation:

Text Selection:

Text Editing:

Accessibility:

Note: Accessibility category is brand new in Big Sur. It was not there in Catalina.

In addition to the new commands, Big Sur Voice Control seems to be quicker. Show numbers in Safari now numbers links on web pages. This makes it much easier surf the web completely hands-free.

If you think that it is frustrating to use the voice options, you try to do it without sight. My father’s macular degeneration has finally reached the point where is both legally and practically blind. While he can see enough to navigate the house, he can no longer interact with the computer or phone. The OS is a real disappointment when it comes to solving these problems. You should try to read some of his email or text messages, Siri really butchers things quite often. You have to develop a bit of skill at deciphering cryptic messages to be successful.

-Chip

The ability to do mouse actions by voice: click and double-click.

I work a lot in Blackboard, which requires a great deal of clicking to do most things a teacher needs to.

Extra Scripts used to be able to do that, but then a system upgrade disabled it.

If anyone has any ideas how to click by voice, I would love to hear about them!

Thanks!

Thanks so much for the comparison list, @tscheresky!

I’m glad you noted that there is no spelling mode, since that’s a capability I’ve wanted as well, though I didn’t know enough to know what to look for or how to tell it wasn’t there.

I’d encourage everyone interested in Voice Control to submit the lack of spelling mode as feedback to Apple.

@pellerbe, it’s built in! With Voice Control turned on, move the pointer to the right spot and say “Click” or “Double-click.”

Well, I’m glad to hear that! [so are my arms].

Which is the earliest system that has voice control with that included?

Thanks so much!

Polly

PS I now have a reason to buy a new Mac, never a bad thing to have.

I would assume, though I don’t know for sure, that it was part of the major Voice Control revamp in Catalina.

Voice Control, the Accessibility feature, was first introduced with Catalina.

Because I could not find the complete list of Voice Control commands online, and I wanted a complete list I could review to come up to speed on Voice Control more quickly, I created the following documents and shared them:

2020 iPadOS 14 Voice Control Commands

https://drive.google.com/file/d/1qD_V3YlZmSJ5UOJJlP47-PYNnk1OTDsr/view?usp=sharing

2020 macOS Big Sur Voice Control Commands

https://drive.google.com/file/d/1P4dh1H9pzEedCv2-1xXyE37Ej0QW_7-U/view?usp=sharing

Please note: the tabs (a.k.a. sheets) at the bottom of the spreadsheet (a.k.a. workbook) represents the categories for the voice commands. Each tab has the commands for the particular category.

Check out the 2020 macOS Big Sur Voice Control Commands link I just shared. It contains all the voice commands by category. Including mouse commands.

Dictation is something of great interest to those of us with manual dexterity problems, like me. Having used dictation in both macOS and iOS since its inception for this reason, it’s been interesting to track the ups and downs of its usability over this time period, and developing workarounds for its most annoying foibles. (I’m using “interesting” in its most diplomatic sense here.)

This article is greatly appreciated — while I am unwilling to upgrade my Mac to Catalina yet, I’ll see how Voice Control behaves on my iOS devices using the latest system.

By the way I used to use Dragon Dictation and I’m a little surprised the article did not mention it at all. It had pretty good voice editing capabilities.

That’s because I’ve never used it. I can’t pretend to be an expert in dictation in general—what has piqued my interest is how it has become sufficiently available to everyone now, and sufficiently good that even those who don’t need to use it might be tempted.

I can’t pretend to be an expert in dictation in general—what has piqued my interest is how it has become sufficiently available to everyone now, and sufficiently good that even those who don’t need to use it might be tempted.

I haven’t experienced any time limitation with dictation for MacOS–through Sierra anyway. I do download ‘enhanced dictation’ for offline use (a GB or two depending on system). Editing by voice was easy on El Cap, though you need to turn on “Enable advanced commands” in Accessibility/Dictation/Dictation Commands… There’s a list of the editing commands there, and you can keep a list open while dictating for reference. In principle it should work the same way on Sierra, but for for some reason instead of editing it just parrots back the editing commands on my system. I may have installed a conflict of some sort.

Clicking the mouse and otherwise editing via mouse and keyboard doesn’t dismiss dictation on either el cap or sierra. It might time out after awhile if you wander off or spend a lot of time just thinking, but mine has just been open and idle for about 5 minutes and is still there.

I haven’t tried dictation in Mojave or Catalina yet since my modern mini has no microphone, but all of the preferences look the same. Has dictation really regressed so much? If so is it because Apple severed relations with Nuance a while back?

Enhanced dictation, as you point out, does behave slightly differently. I’ve tried it many times over the years, especially when my power goes out (as it does frequently) and I lose Internet connectivity, but I always end up going back to the Internet-based dictation because it’s slightly more satisfactory for my use. I do appreciate the reminder, though, because I recently upgraded to high sierra and I don’t think I’ve tried it yet.

I would say dictation has definitely regressed, although of course it’s impossible to say why. For example, lately on my Mac I have not been able to get it to capitalize anything to save my life. Dictation on my phone tends to work a lot better.

I can’t comment on El Capitan and Sierra—I haven’t used them in many years. I don’t remember them as being sufficiently functional for dictation that I ever considered using it.

I don’t remember them as being sufficiently functional for dictation that I ever considered using it.

Perhaps @tscheresky is more familiar with what was possible in the past, though he would have been relying on Dragon Naturally Speaking then.

“Sufficiently functional” is relative. In my case I can only type with two fingers, so even in its early days, dictation was a lifesaver.

Adam, you are right. The only part of macOS built voice recognition I’ve used, going back to Snow Leopard, was its ability to turn Dragon Dictate for Mac’s microphone on after I have turned it off, and restarting Dragon Dictate for Mac after it has crashed.

I don’t see a need for macOS Dictation because Voice Control has everything Dictation has and more. macOS Voice Control is only missing a few features that would make it a complete replacement for Dragon Dictate for Mac.

I still use Dragon dictate for the Mac to this very day and it has a very high accuracy rate. The accuracy rate is the key thing you want in voice recognition because as you noted, it gets frustrating to have non-sensical words show up in your text. One thing that you did not mention in the article is the use of a high quality microphone to improve accuracy on the Mac. A high quality microphone can greatly increase the accuracy rate. When errors do occur, I find it much easier/intuitive and far more efficient to edit errors with the keyboard and mouse.

I do too. I’m using it on my primary computer under Catalina. I even have it working on my secondary computer under macOS Big Sur beta. However those days are numbered. Not only has Dragon for Mac not been supported since October 2018, Dragon speech engine is x86 based. Therefore I’m guessing anyone wanting to move to Apple Silicone (me) won’t be able to do so unless Apple’s Voice Control comes up to speed and adds the missing features needed to become the alternative for Dragon for Mac.

As I see it, here is the path for running Dragon on the Mac. You tested it on Big Sur and it worked and that was something I was personally wondering. Next on Apple silicon it should work just fine with Rosetta 2. If Apple removes Rosetta 2 in the future, then we head for virtualization. Will Parallels and Fusion have a solution for Apple Silicon? My guess is yes. So I think Dragon for the Mac will be sustainable for the foreseeable future.

Apple engineers are some of the best in the business. I’m sure they have done an excellent job on Rosetta 2 emulation. However the constraints for emulating an x86 speech engine are a lot more challenging than emulating say a word processor. I’m sure it can be done but what’s the performance going to be like. Is it going to be quick enough to be usable. Will it have the hooks necessary to control the non-emulated environment.

Here I’m not sure if you’re talking about virtualization to run an older version of macOS, or Windows to run the Windows version of Dragon. Regardless of which, neither of them would work for me. I’m part of the mobility impaired group. I need native Voice Recognition (VR) to command-and-control my Mac in addition to being able to reliably dictate text. If you’re not interested in command-and-control of your Mac, then you should really try Voice Control right now (assuming you’re on Catalina or newer). The majority of the things I’m talking about Voice Control not having are primarily for those individuals, like myself, that need to operate their Mac completely hands-free. If you do not need to command-and-control your Mac by voice, then the current dictation capabilities and editing by voice with Voice Control should meet your needs.

Rossetta 2 does translation and not emulation. It translates the x86 instructions into arm just once at installation time. This results in excellent speed perfomance. So the constraints for running a speech engine and a word processor are no different on Rossetta 2. Apple demonstrated this capability at WWDC using Maya which is far more CPU intensive than a word processor or Dragon.

Hands down, Dragon is the best dictation software. Accuracy in dragon is really good and that alone is the deciding factor for me. So it really comes down to price and since for the Mac you can’t buy Dragon anymore, it is essentially free for those who already have it.

Yup, I have to agree and continue to do medical dictation in Dragon successfully even to Big Sur. Fortunately there will likely be better products that are cloud based and am pleasantly surprised by Fluency by m.modal (though still difficult to correct). If there is a case for machine learning, this is it.

Is there some kind of speech recognition users group? I find these conversations cropping up here and there, but I wonder if there is a more central place.