Get More Info from Photos in iOS 15 and iPadOS 15

Back in “How to Keep Facebook from Snooping on Your Photos’ Locations” (31 May 2021), I lamented the fact that Photos in iOS and iPadOS had no way to display Exif data. Little did I know that just a few days later, Apple would announce that feature in Photos for iOS 15 and iPadOS 15. However, Apple didn’t stop at displaying basic metadata, adding the Visual Lookup feature that can identify various types of objects in photos.

For more on all the new features of iOS 15 and iPadOS 15, check out my book, Take Control of iOS 15 and iPadOS 15.

Exif Metadata

Exif (short for “Exchangeable image file format”) is a standard for metadata that’s embedded in pretty much every digital photo you take. It includes information like the camera make and model, date and time, ƒ-stop, and ISO. For photos taken with an iPhone or other smartphone, the Exif data also includes the exact location the photo was taken.

To view a photo’s Exif info, open it in the Photos app and either swipe up on the image or tap the new info ![]() button in the toolbar. For pictures taken on an iPhone, Photos shows:

button in the toolbar. For pictures taken on an iPhone, Photos shows:

- The date and time the photo was taken (you can change this)

- The iPhone model, image format, and if it’s a Live Photo, an icon

- The specific camera that captured the image, along with lens size and ƒ-stop

- The megapixel count, resolution, and file size

- The ISO, lens size, exposure value, f-stop, and shutter speed

Below this information is a thumbnail of the image on a map. Tap the map to see a full-size map from which you can zoom out to see where you took the photo.

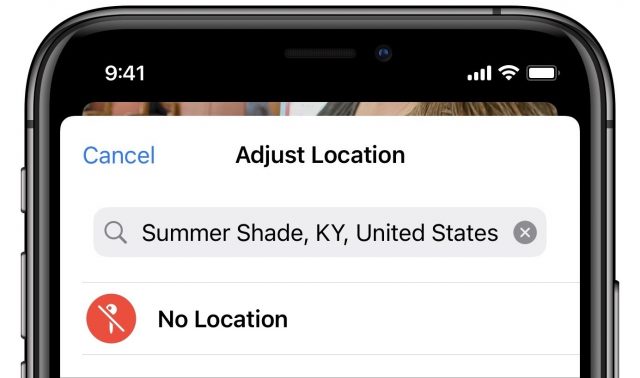

For the most part, Exif data is read-only, but you can change two values—date/time and location—by tapping Adjust next to either data point. When editing location data, you can tap No Location to strip location data from the photo, which you may want to do before sharing certain images publicly.

Of course, if you strip the location data, you won’t be able to find the image on a map anymore. It might be better to use the shortcut I created that shares photos without location data. Sadly, Apple didn’t see fit to add an option to iOS 15 and iPadOS 15 that mimics the “Include location information for published items” checkbox in Photos for macOS.

Visual Lookup

Metadata is data that describes other data. While Exif metadata is undeniably useful, Apple saw an opportunity to extend the metadata it can associate with photos to the objects pictured in the image itself. A new feature called Visual Lookup can identify:

- Popular artworks

- Landmarks

- Plants and flowers

- Books

- Pet breeds

As with some of the more computationally intensive features of iOS 15 and iPadOS 15, Visual Lookup requires an iPhone or iPad with an A12 Bionic processor or better (see “The Real System Requirements for Apple’s 2021 Operating Systems,” 11 June 2021).

If Photos detects something that Visual Lookup can identify, the info ![]() button shows a little sparkle

button shows a little sparkle ![]() . When you swipe up on the image or tap the info button to reveal the Exif panel, a new Look Up entry appears. Icons also appear over the recognized objects. Tap either Look Up or an icon to see what Photos thinks it is.

. When you swipe up on the image or tap the info button to reveal the Exif panel, a new Look Up entry appears. Icons also appear over the recognized objects. Tap either Look Up or an icon to see what Photos thinks it is.

There’s no way to predict precisely if Visual Lookup will be able to work on any given photo, but it does seem to trigger for most pictures of plants, flowers, books, and pets. And when Visual Lookup works, it works pretty well. I’ve been taking pictures of various wild plants around my house to identify them. It correctly identified broadleaf plantain, and it determined that a picture of a volunteer tomato was either a tomato or a member of the solanum family (which it is). It narrowed down an invasive Tree of Heaven as either a Tree of Heaven or a member of the ailanthus family (which, again, it is). It also correctly identified a marigold in my garden.

Visual Lookup’s AI recognition is good but not perfect. It misidentified a perfect specimen of Chinese cabbage as lettuce. Plus, as noted, Visual Lookup can recognize more than one thing at a time, putting an icon over each object it recognizes. I fed it a photo of a dog and cat together (mass hysteria!), and it placed an icon over each. However, Visual Lookup can identify only a few types of pets, ignoring pictures of rabbits and chickens.

With popular artworks, Visual Lookup does well and is welcome, since you may recognize a painting without knowing much of anything about it. What qualifies as a landmark is a little unpredictable, although the picture from the Minneapolis Sculpture Garden below counted. With books, Visual Lookup seems to need a shot of the front cover, and it works best with recent titles. It misidentified Adam’s 1994 Internet Explorer Kit for Macintosh book as the second edition of his Internet Starter Kit for Macintosh from the same year, and it completely biffed that book, identifying it as Gruber’s Essential Guide to Test Taking: Grades 3–5 (by Gary Gruber, not John Gruber). Oops.

How useful and accurate have you found Visual Lookup so far? Let us know in the comments.

I’m on an iPhone SE second generation (spring 2020), but don’t see any Visual Lookup clues in Photos: no plants, no landmarks, no nothin’ … And this with some pretty obvious pictures of sunflowers, Notre Dame Cathedral in Paris. Any idea what is wrong?

Nothing to do with the topic, but I bet I’m the only one who recognized Summer Shade KY, the location of the photo in the screenshot. Nice little town in Metcalfe County.

It wasn’t working for me on my iPhone 12 mini in Australia. It only seems to be enabled for those in the US, according to online discussions.

I see. Might have been worth a mention in the article. I guess that, just as is the case with Maps, this reflects the relative importance of various markets for Apple …