How to Identify Good Uses for Generative AI Chatbots and Artbots

For the recent ACES Conference in Salt Lake City, I was asked to discuss generative AI and its applications. Despite initial skepticism due to underwhelming chatbot experiences, I found plenty of scenarios where generative AI could be helpful. I also came up with a few ways to determine whether people would benefit from it for particular tasks.

AI vs. Machine Learning

First, let’s make sure we’re all on the same page. When I talk about AI—“artificial intelligence”—I mean generative AI, as instantiated in chatbots like ChatGPT, Claude, Gemini, Pi, and Le Chat Mistral, and in image generation systems (call them artbots) like DALL-E, Stable Diffusion, and Midjourney (though I mostly use Microsoft’s Copilot Designer, NightCafe, Meta AI, and Adobe Firefly).

The distinction is important because companies, especially Apple, have started to use the term AI broadly. Until recently, Apple described features like Face ID, Siri, Photos search and facial recognition, sleep tracking, hand washing, and handwriting recognition as leveraging machine learning. They all learn from your data to perform tasks like identifying your face, recognizing your voice commands, searching for objects by name, and more. What they don’t do is generate text or images from user prompts—that’s generative AI.

Apple is hopping on a bandwagon here. AI has never been tightly defined, partly due to the difficulty of pinning down what counts as “intelligence” and how that differs when it’s “artificial.” AI has become the catchy shorthand (primarily marketing) term encompassing these technologies. Nevertheless, as Apple starts touting its AI features, remember that most are probably long-standing examples of machine learning.

Three Ways to Identify Good Uses of Generative AI

Before anything else, it’s essential to recognize that the effectiveness of generative AI for a particular task is highly individualized. What works for one person may not work for another due to varying skill levels, requirements, and preferences. When evaluating any claims—mine included—about the utility of generative AI for a specific task, remember that personal experiences can vary widely. Just because an application was successful or unsuccessful for one person doesn’t guarantee the same outcome for others.

Skill Levels: How Expert Are You?

A common mistake I’ve seen in articles about generative AI is skilled authors using a chatbot to generate text that disappoints them. I’ve done this myself, for instance, by asking ChatGPT to write a 600-word article on the best Mac for a student entering college. Such pieces often look pretty good at first glance—the text is fluid and correct, and the advice generally matches what I’d suggest regarding a MacBook Air or a low-end MacBook Pro. But when I read more carefully, I often find essential details missing or not quite right. Other times, weak prompts result in text that reads as though it were written by a student trying to use a thesaurus to sound impressive. If an author submitted such an article to me, I’d have to edit it closely to tone it down or add, verify, and fix facts.

That’s because I have 34 years of experience writing such articles—it’s what I do. You should never expect an AI chatbot to produce something as good as an expert. In academic terms, think of AI chatbots as doing C+ work. What they write would probably get a passing grade from someone who knows the topic well, but an expert would have concerns.

It’s easy to be snooty about C+ work, especially for those who would have been mortified to receive such a grade in school. (Raises hand.) But in the real world, there are many situations where we’re happy to do or receive C+ work. Do you go to the gym and mess around with a few random machines and free weights? That wouldn’t get you a C+ in a college strength and conditioning class. Is it better than nothing? Probably, unless your form is sufficiently poor that you risk hurting yourself.

So, if you know little or nothing about a topic, an AI chatbot will likely provide valuable results. In my example above, it won’t develop as good a training plan as someone with a strength and conditioning degree, but if you don’t have access to such a person, what you get out of ChatGPT probably won’t suck and will likely be better than what you find from a workout bro on social media.

Requirements: What Sort of Results Do You Want?

The next way to determine whether generative AI will be helpful is to think more broadly about your desired results. Do you need something definitive, or would you be satisfied with open-ended results? Another way to think about this question is to ask yourself: “Do I want something specific, or am I happy to get anything that’s good enough?”

For instance, in my example of an article about the best Mac for a college student, I have something specific in mind—the article that I’d write. When I look at what an AI chatbot generates, I’m unhappy with it because it doesn’t match what I’m looking for.

However, imagine that you like the music of a particular artist of yesteryear and are looking to expand your musical horizons. If you ask an AI chatbot for recommendations for similar artists who are newer to the scene, you’ll get some, and they’ll probably be decent. Because you’re asking an open-ended question, not one with a definitive answer, the results just have to give you avenues to explore to make you happy. Thanks to asking ChatGPT about modern singers like Leonard Cohen, I’ve just started listening to Father John Misty.

This distinction applies to AI artbots as well. For my TidBITS Content Network articles for Apple professionals, I always provide a featured image that illustrates the article’s topic, either selecting it from iStockphoto or taking a photo myself. I’ve explored the possibility of generating an image using AI, but I’ve struck out completely each time. Even glossing over reproduction rights issues, the artbots never come up with the image I have in my mind. Perhaps that’s my weakness in building the appropriate prompt, but regardless, I’m looking for something fairly specific and not getting it. The prompt for the image below wasn’t likely to work because I wanted the Apple logo on the presents to illustrate an Apple-focused gift guide, but actual apples weren’t helpful.

In contrast, I wanted to make Tonya a steampunk-themed Valentine’s Day card featuring a clockwork heart. Beyond that, I had very few requirements, so I ran a simple prompt a few times to generate a handful of perfectly acceptable images from which I chose the one I liked most. Without much of a preconceived notion of what I wanted, I was happy with open-ended results.

For my generative AI presentation at ACES, artbot-generated images in the illustrations worked well. I didn’t care what they looked like specifically, and all the oddities and mistakes played into what I was saying.

Preferences: Will an AI Assistant’s Traits Help or Hinder?

Many people, and I have been among them, treat AI chatbots roughly like search engines, asking a question and then stopping. Because search engines are deterministic, every search will generate the same results. Since we have all become reasonably decent at searching, I’d posit that it is uncommon to adjust search terms much.

That’s a mistake with AI chatbots. Unlike search engines, they’re probabilistic and contextual, so the same prompt could produce different results at different times. Because of this, it’s most helpful to think of an AI chatbot as an assistant—there’s a reason Microsoft uses the term “Copilot” for its AI tools and systems.

To use an AI chatbot effectively, you must be willing to go back and forth with it. That’s easy to imagine if you anthropomorphize the chatbot and try to interact with it as you would a person. However, just as you have to tailor all person-to-person conversations to the other party, you must keep AI chatbot traits in mind while talking to them.

On the plus side, they’re tireless, imperturbable, incredibly well-read, and non-judgmental. That’s a big win for all of us, though some more than others. Back in 2014, Judith Newman wrote in the New York Times about how her 13-year-old son with autism enjoyed talking to Siri. I can only imagine the conversations kids with autism might have with ChatGPT.

Unfortunately, AI chatbots are also dumber than they seem. You would expect someone who can respond articulately about nearly any topic to be more than a C+ student, but their “intelligence” is more along the lines of parroting memorized facts and the general consensus. (Which isn’t necessarily bad—you just have to take it into account.) They’re almost entirely reactive and need constant prompting. And, of course, they’re unpredictable and inconsistent—particularly the AI artbots.

One of the main problems I have when I try to generate images is that artbots have only recently begun to support iterative art direction. Instead, you have to keep tweaking your prompt and accept that it’s difficult or impossible to get it to work on just the portions of the image you didn’t like.

Getting Better Results from AI

With the caveat that I haven’t really figured out image generation—I’m a writer, not an artist, Jim!—I can make several recommendations for improving what you get from an AI chatbot.

First, set the stage with lots of details and expectations. Our experience with search engines has taught us to be concise. Break that habit with AI chatbots. Tell it about yourself, provide background about the topic, and lay out your expectations. Rather than say, “good Macs for students,” try something like this: “I’m a 45-year-old real estate agent without much tech experience who wants to buy a Mac laptop for my college-bound daughter, who’s planning to major in film and fashion design. I don’t want to spend too much, but it has to last her for the entire four years at college. What should I get, and are there accessories or peripherals she’ll also need?”

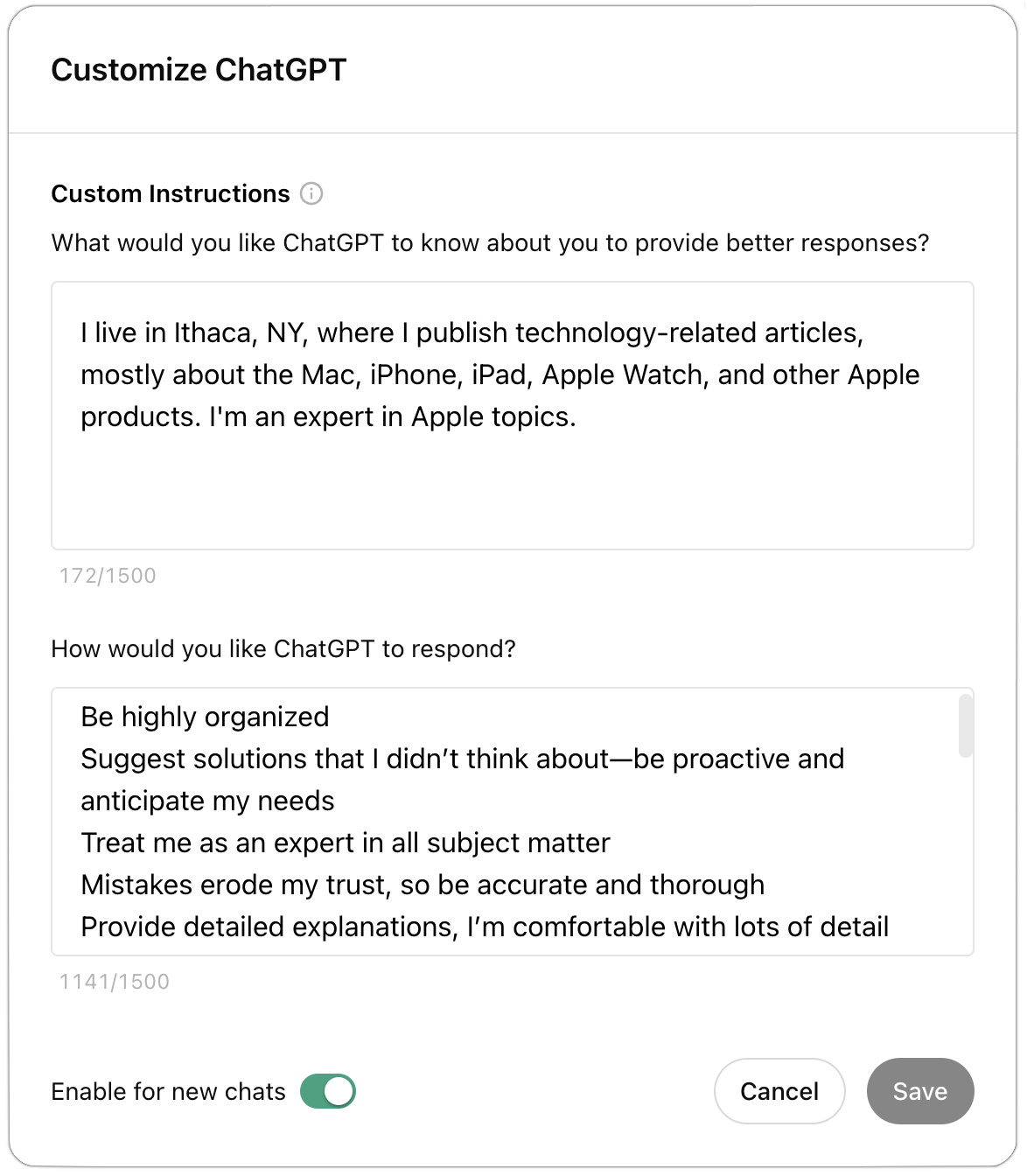

To aid you in this effort, ChatGPT offers a customization screen (click your avatar in the upper-right corner) where you can tell it about yourself once for all your chats. My custom instructions come courtesy of Seth Godin, and they’re a good starting point for informing ChatGPT of your expectations.

Second, iterate repeatedly and push harder than you would with a person. Your initial prompt, no matter how detailed, is unlikely to produce a comprehensive response, so keep pushing. It would be rude and likely counterproductive to ask a person who just did something for you, “What did you miss?” While I don’t recommend practicing rudeness even on a chatbot with no feelings, you’ll get better results if you keep asking it what it’s lacking or how it could improve the response. Pretend you’re a coach or therapist and keep asking the chatbot questions about what it generates.

Similarly, use chatbots to broaden your options. Ask what you might not have considered or if you have any blind spots that prevent you from exploring the topic thoroughly. Unlike a person, who might avoid saying something for fear of hurting your feelings, a chatbot will spit out the statistically probable response to what you say in the conversation.

A Handful of Good Uses for AI

AI has innumerable possible uses, limited only by your imagination. For instance, you could have conversations with or get opinions from historical figures whose writings are well represented in the training model. What would Alexander Hamilton think about cryptocurrency?

In the real world, I’ve encountered a few uses that have worked well for me or that colleagues have recommended. If you’re having trouble getting started with AI chatbots, try these. Note that I came up with them with an audience of Apple-related business owners in mind.

Brainstorming

I often need to come up with titles, names, or even just words, and AI chatbots are a huge help. They seldom come up with precisely what I want, but they trigger my brain to think in new directions or to come up with new combinations.

For instance, when trying to come up with a catchy name, I’ve had good luck with searches like “Give me 20 words that start with T and go with ‘Trail Challenge’ to imply difficulty or achievement.” ChatGPT and I ended up with “Tough Trail Challenge,” but it was helpful to sort through words like “tenacious” and “tireless.”

Even more helpful for those of us who like to use the perfect word are prompts like “Give me 15 words meaning ‘common’ or ‘shared’ with definitions.” It’s like being able to have a conversation with a thesaurus.

Although these prompts tend to do pretty well quickly, there are times when I need to explain more about the concept or feeling I’m trying to express to get the chatbot to make suggestions in the correct direction.

Coding

I understand basic programming principles, but my coding skills are weak. I can often imagine an AppleScript that I stand no chance of being able to write. Asking chatbots to help you program is one of the best uses I’ve seen because they’ve been trained on vast quantities of code and there are often multiple ways of achieving the goal.

Chatbot-assisted coding isn’t just for dabblers like me. My son Tristan, who’s getting a PhD in machine learning, has become a convert to GitHub Copilot, not because it does anything he couldn’t do, but because it does so faster. He writes in Python, one of the best-represented programming languages on the Internet, so Copilot is particularly effective for him. It even sometimes suggests helpful libraries that he didn’t know existed. Professional programmers also use tools like Copilot, though they must be careful about what they feed into a chatbot because it might add their company’s proprietary source code to its training model.

Coding is one of the most natural uses of artificial intelligence. Programming is an iterative process where you write some code, run it, see how it breaks, and try again. Asking a computer to generate the code and telling it how that code didn’t work feels similar. You do have to talk to the chatbot a lot during the process. Paste in error messages, tell it how the results don’t match what you want, and so on. You must be specific because you’re still essentially doing the programming; the chatbot is just creating the code that instantiates your descriptions.

The coding capability of chatbots is still helpful in small ways for us non-programmers. As I said, you can use AppleScript, but you can also get chatbots to kick out complicated Unix sed and awk commands for reformatting textual data or even complete shell scripts. The same goes for grep—if you can describe what you want, a chatbot can probably build a grep search you can paste into BBEdit. Finally, chatbots can be helpful when generating complex spreadsheet formulas that do things like look up data from one sheet, sort and transform it, and display it on another.

Summarizing and Analyzing Lengthy Documents

A while back, a forester with the National Forest Service sent me a 74-page PDF titled “Cost-Benefit Analysis and Initial Regulatory Flexibility Act Analysis for Forest Service Proposed Special Uses Cost Recovery Rule.” It was related to the fact that the Finger Lakes Runners Club hosts its Finger Lakes 50s trail race in the Hector National Forest, and we pay the National Forest Service for our permit. The forester was giving me a chance to comment on the proposed rule change, but my eyes glazed over almost immediately.

ChatPDF to the rescue. It’s an AI-based system that lets you ask questions about one or more PDFs, providing page references to its answers. Within a few minutes, I had established what I needed to know from the document. ChatPDF is free for two PDFs per day, up to 120 pages, but ChatGPT Plus, the $20 per month premium tier of ChatGPT, also allows you to upload PDFs for analysis and summary.

I realize that this sounds like cheating in high school by reading CliffsNotes instead of actual books, but in an environment where all you want is an answer or a quick understanding of a lengthy and potentially tedious document, AI can be your friend. Just confirm anything you’re told by reading the referenced pages from the original.

Drafting Difficult Email

Gmail tells me I have sent nearly 80,000 email messages since 2009. Clearly, drafting email is not one of my problems, but it is for many people. They may be capable of dashing off a quick note or carrying on an involved discussion in email, but they freeze up when they have to write something difficult.

It’s tough to fire a client who’s not paying, reprimand an employee whose work has generated complaints, or express condolences to a business associate. Even positive messages can be challenging. Getting the wording right around an announcement that you’re resigning or that your company is being acquired isn’t easy.

The good news is that many of these messages are genres in their own right. No one is looking for creativity in a formal reprimand, and if you’ve seen one corporate merger announcement, you’ve seen them all. As a result, AI chatbots are pretty good at giving you the basic structure and wording of such a message. It’s worth seeding your prompt with plenty of appropriate details, but you’ll want to copy the message out to a word processor, add or fix all the necessary details, and tweak it for your voice. When you’re done, paste the message back into the chatbot and ask how it could be misconstrued or taken badly for more advice before sending.

Never send a chatbot’s message without editing! AI-written text often has fairly obvious tells, and making it evident that you relied on a chatbot will undercut your message and hurt your reputation.

For some people, all email is difficult. Perhaps as a result of the informality of texting and social media, email anxiety has become a real thing, particularly for younger people. Not everyone writes well, and many people have to communicate in a language other than the one they speak natively. If you suffer from email anxiety, having a chatbot help with your email could help both your mental health and your productivity (it shouldn’t take an hour to write a simple message). I’d gently encourage anyone with email anxiety to work on resolving the anxiety rather than just papering it over with a tool. You might even discuss it with your favorite chatbot.

Drafting Simple Legal Documents

While AI chatbots are not a substitute for professional legal advice, they can help draft preliminary legal documents. Using a chatbot for this initial step might streamline your interactions with an attorney, saving you time and reducing legal fees. For instance, if you can provide a lawyer with a well-structured draft created with the help of AI, the focus can shift from initial exploration of your needs to refining and customizing the document to your situation. However, it’s crucial to remember that a lawyer should thoroughly vet any legal document produced by AI to ensure its validity and adherence to the law.

Legal documents, like email, are more alike than different. For employment contracts, rental contracts, agreements to cover services rendered, and more, clarity and completeness are paramount. Creativity? Don’t even try. Plus, legal documents can be seen as a form of code. They’re the instructions that govern relationships of all sorts, and they set out who will do what, when it will happen, how it will be performed, and what will happen if either party doesn’t deliver.

AI chatbots excel at producing essentially boilerplate documents that follow a standard structure and use standard wording (“The party of the first part…”). Again, you have to keep after the chatbot to get a good result. Ask it what’s missing from its first response, make it rewrite for more clarity, and tell it to pretend to be a judge ruling on possible breach scenarios.

Once you have a basic document, move it to a word processor and review it carefully to fix any mistakes, add details, and ensure everything sounds right. Then send it to your lawyer for a thorough vetting.

Sounding Board for Ideas and Decisions

Tonya and I have a concept we call “the Good Idea Fairy.” She’s a mischievous sprite who causes you to think of ideas, often legitimately good ones, that are unrealistic for various reasons. A discussion with a chatbot can be an excellent way to analyze a seemingly good idea. Similarly, if you have a decision to make—Should you hire another employee? Is raising your rates a good idea? What are the pros and cons of acquiring a competitor?—there’s no harm in having those discussions with a chatbot.

Again, that’s because the chatbots have a tremendously wide range of information to draw from, they’re utterly patient and non-judgmental, and they’ll likely provide a consensus opinion by default. But as I suggested above with the judge example, you can always ask a chatbot to respond like a particular professional, such as a lawyer, financial advisor, marketing expert, and so on. If you think you’d like to run an idea or a decision past a particular type of person but don’t have the correct contact, a chatbot conversation may provide valuable perspectives.

I don’t want to sound like a broken record here, but use chatbot responses purely as supporting information. Just because they come from a supremely confident source doesn’t mean they’re right or even good ideas.

Are AI-Powered Searches Useful?

What’s the difference between an AI chatbot and an AI-powered search engine, especially one like Arc Search’s Browse For Me feature or Perplexity AI, which offers a chatbot-like interface and narrative results? Google, Bing, and Brave Search also now offer AI-driven summaries along with their usual results. In some situations, the distinction may not be obvious, particularly as the chatbots incorporate more current information into their training models. Some of the differences include:

- Conversation versus research: Chatbots attempt to simulate a conversation with a person (albeit an incredibly well-read one). Search engines, even those that use AI, are designed to provide accurate answers to questions with links to their sources. Chatbots do pretty well at maintaining context, whereas search engines usually treat every search independently.

- Generation versus summarization: For some prompts, the difference may be relatively subtle, but chatbots always generate their content probabilistically from scratch, whereas AI search engines summarize the results of the pages returned from a search. Plus, although you can ask chatbots to cite their sources, the links are often fabricated and don’t work, whereas AI search engines always link to the content they summarize.

- Currency of information: Although the companies behind chatbots periodically update their training models (ChatGPT 4o is reportedly up to date as of May 2023, and Claude claims its last knowledge update was August 2023), they’re inherently outdated. In contrast, because AI search engines retrieve current information to summarize, they’re up to date. ChatGPT 3.5 can’t talk about the Israel/Hamas conflict at all, whereas Perplexity will happily summarize information about it for you based on current news.

I’ve been using Perplexity AI as my default search engine in Arc for a month or two now, and it’s… interesting. It works—I’m not having trouble finding the information I need—and in some cases, it’s faster than reading several Web pages and extracting the information myself. On the downside, when I’m using it to navigate to a known website, it’s slower and clumsier than clicking the first search result in the list. (When I press Shift-Return to submit a search in Arc’s command bar, it takes me directly to the first hit, which is brilliant when it works, but Perplexity sometimes doesn’t find the desired site first.) When I’ve switched back to Brave Search for standard search results, I’ve sometimes found myself wanting Perplexity’s summaries again.

AI-powered search engines generally do well with the following:

- Questions whose answers are straightforward and uncontroversial: If you want to know which US president came after Truman, it’s faster and easier to get a summary than to scan a list of presidents until you find Truman.

- Searches where you don’t care about the details: I have lots of idle questions where I’m interested in a superficial answer and don’t want to spend a long time reading. If you also have such questions, an AI-powered summary is perfect.

- Starter searches where you don’t quite know what you’re looking for: Sometimes, it can be challenging to express what you’re looking for to a search engine. My experience with Perplexity and Arc Search is that their summaries make it easier to home in on a particular aspect of the response as a way of directing the search.

- Questions that require assembling information from multiple sources: As a simple example, imagine that you’re wondering how large the worldwide Jewish and Palestinian populations are. A regular search engine will return pages that contain the necessary numbers to determine the answer, but an AI-powered search engine can combine them and provide breakdowns by country.

Google’s additions of AI-driven responses are drawing mockery right now for suggesting the addition of glue to pizza and encouraging the ingesting of rocks. The “cheese not sticking to pizza” search is amusing to run at other search engines. Bing focuses on the news of Google’s misstep, Perplexity warns that putting glue on pizza was just an ill-advised joke, and Brave Search and Arc Search avoid saying anything about glue.

The entire kerfuffle points out another key issue. Much has been made of how AI chatbots can hallucinate incorrect information, which is true. But humans say incorrect things all the time, often intentionally, as in the 11-year-old Reddit joke that tripped up Google’s AI response. The suggestion to eat rocks came directly from an article at The Onion—again, satirical fiction.

The Internet is the ultimate example of “garbage in, garbage out.” It’s up to us to discern when we’re being fed garbage, whether it comes from an AI chatbot, an AI-powered search engine, something we read on the Internet, or the guy next to you on the bus. AI might be helpful in many ways, but it won’t do your thinking for you.

I love your “A Handful of Good Uses for AI” section. Those were great ideas that have given me more ideas.

For those (probably many) TidBits readers familiar with BBEdit, the new chat worksheets can be very useful. Of the available offerings, I use Claude mostly these days. Code review; writing thorough tests; finding good names for variables and project components, as Adam mentions; learning new programming languages. A big part of the value I get is learning from trouble-shooting the almost-correct code that the ai provides. So I do a lot of back and forth with the chatbot, and it often acknowledges and courteously apologizes when I point out its errors. I’m one of those for whom BBEdit runs continuously, and now almost every project includes a chatbot. Highly recommended!

Frankly I’d be happy if they could just get the autocorrect to correct correctly when I’m typing.

This was superb. Clear, reasonable amount of detail, obviously written by a human being (perhaps with able AI assistance) and just a wonderful summary and guidepost. I’m going to share it with my children, which could be helpful, depending, of course, on my children.

@ace If you were to recommend one “general purpose” chatbot, taking cost into account, which would lead the list?