Why AI Web Scraping (Mostly) Doesn’t Bother Me

Within the Apple pundit echo chamber, it seems like everyone is venting about how tech giants and AI startups are using Web crawlers to train their large language and image diffusion models on information sourced from the public Web. Even Applebot is coming under fire because Apple first trained its models for Apple Intelligence and then said that Web publishers could opt out.

John Voorhees and Federico Viticci of MacStories have penned an open letter to the US Congress and European Parliament that encapsulates the outrage, with links to a boatload of supporting articles. If you’re unfamiliar with the topic, you might want to read their piece for context. In short, they consider training AI models on content from the public Web without having requested prior permission to be ethically problematic, and more generally, they worry that the use of these tools will diminish creativity and consolidate “knowledge” in the hands of tech giants.

I can’t say that John and Federico are wrong. They seem to feel threatened, and I’m not one to tell someone that their feelings are invalid. Nor can I confidently say that the future they predict won’t come true. Finally, I don’t mean to pick on them specifically—I’m using their letter merely as a representative of feelings and concerns that are shared by many others in the publishing world.

Nevertheless, speaking as someone with over 34 years of publishing technical content on the Internet—nearly 16,000 TidBITS articles and hundreds of Take Control ebooks—I’m just not that bothered by all this. My overall opinions aren’t usually so divergent from my tech journalism peers, but since no one seems to be acknowledging that there are multiple sides to every issue, I want to explain why I’m largely unperturbed by AI and much of the hand-wringing that seems to permeate coverage of the field.

I publish to enrich the world, not to make money

Much of the concern seems to center around the belief that AI will prevent writers and artists from making money. I don’t believe that’s necessarily true, and even if it is, it’s not as though the Internet hasn’t caused multiple disruptions to business models in the past.

Regardless, I don’t share this concern because I publish TidBITS to help people understand and make the most of their technological surroundings, not primarily to make money. That has always been true—we published TidBITS for over two years before pioneering the first advertising on the Internet in 1992 (see “TidBITS Sponsorship Program,” 20 July 1992). While the income from the TidBITS sponsorship program was welcome, it wasn’t significant for years. It also eventually waned in importance—since 2011, an increasing percentage of our revenues have come from the TidBITS membership program (see “Support TidBITS by Becoming a TidBITS Member,” 12 December 2011), which lets those who benefit from our work support us directly. I don’t see AI chatbots changing that at all.

But TidBITS has an unusual business model. For many other companies, the bottom line is paramount, and reasonably so. I would suggest that they watch for shifts in the ecosystem and be willing to adapt—we’ve had to do that several times in response to competitive and business pressures. Generative AI may be an agent of change for some industries, just as the Internet has been since the early 1990s. Flourishing in the future has long required adaptability.

AI chatbots won’t take over original content

Many people seem to be worried that AI-generated content will “replace or diminish the source material from which it was created,” as the MacStories letter says. It’s unclear to me what would need to happen for this to be true, at least for genuinely original content. When I write about one of my tech experiences, the only place such a story can come from is my head. I fail to see how my creativity would be diminished by what others do.

It’s important to remember that AI chatbots don’t do anything on their own. They produce text only in response to a prompt from a person, so ChatGPT isn’t somehow going to start a newsletter that will compete with TidBITS. A person could do that, and they could use ChatGPT to generate text for their newsletter. But that’s not the fault of generative AI, and it’s not as though I haven’t had to deal with competition from the likes of 9to5Mac, AppleInsider, and MacRumors as they appeared over the years.

Derivative content is valuable mostly in context

Even in TidBITS, most content isn’t entirely original—scoops are few and far between for most publications. Something happens, information becomes known, and I decide if it’s a topic worth sharing. That’s derivative content. But I research everything I write by reading more about the topic and talking to people, and what I learn informs the resulting article. My eventual article may also draw from my personal experience or simply be what I consider a better way of explaining a complex topic. Such content gains value because of how I’ve chosen to relate it, but it’s adding to the discussion, not starting it.

Could someone learn about what I write from other sources? Certainly, and generative AI doesn’t change that. If what you want to learn is widely known by others, numerous websites already contain that information (at varying levels of clarity and accuracy), and searching the Internet will reveal it. Personally, I’m uninterested in playing the SEO game to try to get my version of some article to rank higher in the search results. It will or it won’t, and my experience is that nothing I do will change that in a big way. I’ve focused my business model on serving regular readers, not chasing eyeballs online.

Old content isn’t worth much

Implicit in the complaints about AI crawlers scraping websites is the concern that it will somehow hurt the market for a site’s older content. If you’re the New York Times (which is suing OpenAI), your back content may be extensive enough and historically important enough that it has at least aggregate value.

However, I’ve found that the nearly 16,000 articles in our archive are largely irrelevant from a business perspective. They might attract a few clicks, but too few to make impression-based banner advertising worthwhile. We don’t generate the millions of pageviews necessary to turn the low CPM rates on banner ads into useful amounts of money.

Web publishing requires constantly creating new content—that’s what real people want to read, and while generative AI may make it somewhat quicker to do that, it’s not drastically different from how some websites hire low-paid workers in other countries to churn out unoriginal posts.

Web scraping doesn’t usually cause operational problems

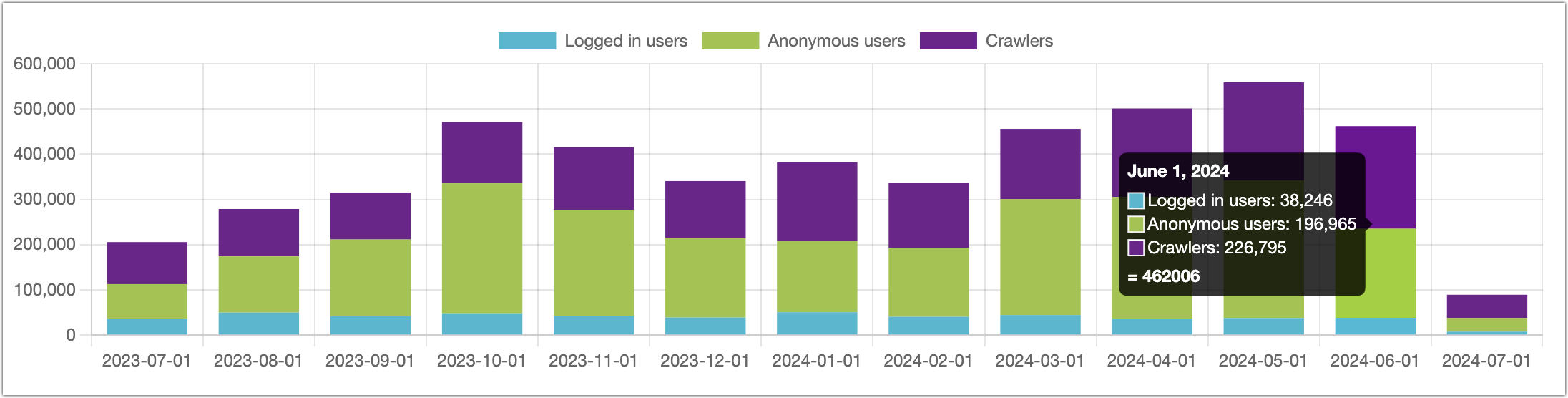

Crawlers are a fact of life in Web publishing. The Discourse software we use for article comments and TidBITS Talk shows that crawlers generate a quarter to a third of all page views. The extra traffic doesn’t cost us anything or slow down the server because our hosting plan includes sufficient CPU power and more data than we need. That’s not expensive—DigitalOcean charges just $14.40 per month for our Discourse server.

However, small groups with limited hosting plans may be more put out by excessive crawler traffic, regardless of the source. The Finger Lakes Runners Club recently incurred several monthly overage charges with its WPEngine host because of traffic spikes caused by badly behaved AI crawlers. Scraping isn’t the problem; the problem is crawlers that assume every website has infinite processing power and bandwidth. That’s one step below a denial-of-service attack. Such bots—whatever their purpose—should be blocked.

Links and citations aren’t as helpful as we’d like

One of the main complaints about AI chatbots is that clever prompts can cause them to derive their answers heavily from specific sources or even come close to regurgitating text without credit.

I had some fun asking ChatGPT to tell me about The Verge’s coverage of WWDC 2021 because Vox Media owns The Verge, and OpenAI’s licensing agreement with Vox Media requires “brand attribution and audience referrals.” Although ChatGPT was happy to credit The Verge when asked specifically, the links it created never worked for me. When I asked for plain text URLs, I could see that everything in the URL was accurate except the necessary article ID. We’re still in the early days of chatbot attribution capabilities.

On the one hand, I do think that credit is important, and if a chatbot derives significant portions of a response from particular sources, they should be credited and linked, much as the AI-driven search engine Perplexity does. However, the desire to receive credit for one’s work is more about manners than business models. It has also been an issue for years before chatbots.

We’ve published plenty of articles that have generated derivative reporting on numerous other sites. The credit has often been weak at best, with some sites giving TidBITS or the author credit and linking back to the article, but many others doing one or the other but not both, and still others failing to provide credit at all. Regardless, apart from commentary sites like Daring Fireball that encourage readers to read the original article to understand what’s being said (which is also what we do with our ExtraBITS articles), we’ve seen very little referral traffic when other publications link to our original reporting. It’s unsurprising—they’ve essentially rewritten our article, so why would anyone want to read it again?

As a result, I write for those who already read TidBITS, not hypothetical readers who might stumble across one of our articles from a link. The vast majority of such people are one-and-done readers and probably couldn’t say where they visited anyway. That’s a fact, not a criticism—beyond a few big names like Wikipedia and YouTube, I can’t remember all the sites I’ve read while researching this article.

More to the point, I wonder to what extent actual conversations with chatbots return text that should be credited. Just because a chatbot can be prompted to do something undesirable doesn’t mean it’s actually doing so in most situations. It’s legitimate to push the limits on what you can get a chatbot to say, but that’s like probing for security vulnerabilities in other systems. Just because a security researcher can break into a system doesn’t mean everyone can, and just because someone has figured out how to get a particular chatbot conversation to jump the shark doesn’t mean most other conversations stray from the straight and narrow.

It’s called the open Web for a reason

I also have trouble with the suggestion that content intentionally shared on the open Web should require prior permission to use. First, it’s not helpful to complain about something that’s patently impossible—there are 1.9 billion websites out there.

Second, the entire point of publishing on the open Web is to make the content available to everyone. If you want to restrict who can read your content or how they can use it, you put it behind a paywall.

Third, there are already numerous ways content can be repurposed on the open Web without permission, including quoting and linking. We accept those, even when we may not appreciate the quote or link, because there’s no way to say who gets to do what with your content.

Search engines are the prime example here. By scraping the open Web, Google has become one of the world’s most valuable and influential companies. We let Googlebot in because of the implicit agreement that Google will drive traffic to us in return for indexing our content. In fact, Google offers two things in exchange for content sourced from the open Web—traffic to Web publishers and the overall utility of a general-purpose search engine to the world.

But that trade isn’t quite as simple as it seems from the publisher perspective. There are other search engines and many other crawlers. I’m willing to bet that none deliver nearly as much traffic as Google—over 90% of our search engine referrals come from Google, less than 4% from each of DuckDuckGo and Bing, and 1% from Yahoo.

From the user perspective, although we’re still learning what AI chatbots and artbots are good for (see “How to Identify Good Uses for Generative AI Chatbots and Artbots,” 27 May 2024), generative AI opens up entirely new possibilities. Perplexity should be slapped for ignoring robots.txt and hiding its user agent to avoid blocking, but it can be quite helpful in ways that traditional search engines aren’t. It extracts and summarizes key information from a handful of pages that may require non-trivial time to read and parse. The combination of Perplexity and an Apple Intelligence-enhanced Siri makes the concepts in Apple’s 1987 Knowledge Navigator video seem within reach. That’s enticing.

AI crawlers don’t copy the way we think of copying

One of the subtleties of how large language models are built is that they don’t store copied content. An AI crawler has to read the content, which could be construed as copying at some level. However, what it actually does with the content is adjust the weights for each token in the neural network, making certain connections between words slightly more probable and others slightly less probable. Adding more content doesn’t inherently make the model bigger. (Props to Bart Busschots for pointing out this key fact.)

Despite this, it’s possible for a chatbot to regurgitate text from a particular source word for word. The statistical probabilities may work out such that the words in a particular source are more probable than others. It’s not good for a chatbot to do this, but it’s not all that far off from what authors of a lot of derivative content do when summarizing or recasting original articles.

It will be up to the courts to decide if what AI companies are doing counts as copyright infringement. I tend to think that it won’t because copyright protects expressions of ideas, not the ideas themselves. Even if the courts decide that copying is happening, what the AI companies are doing may qualify as fair use if it’s determined to be transformative and not pose a commercial threat to the market for the work.

This may speak to my background as a writer, but my feeling is that artists have a significantly stronger leg to stand on than writers with respect to the market effect. It’s not hard to imagine someone asking an AI artbot to generate an image “in the style of” and being happy enough with the results to avoid hiring the artist. I have a harder time believing that a publisher could do that effectively with text, especially as the length and complexity of the desired output increases.

I like feeling disproportionately represented in LLMs

Ultimately, I’m slightly pleased that generative AI models have trained themselves on so much of my content. That means my authorial and editorial voice is far more heavily represented than that of most other people. It’s an unusual legacy that I never anticipated.

An excellent and nuanced take on this subject that led me to examine my reactions a little more closely.

I do think there are reasons to remain vigilant and concerned. The now infamous “eat a rock a day” and “put glue in your pizza sauce” advice should give us pause because the LLM chatbots returned results that were obviously derived from a single source, yet presented those results as though they were some kind of consensus. I think that should be concerning both to authors and consumers of content.

The advent of mass-market printing, newspapers, radio, television, blogs, and social media giants all presented both opportunities and new challenges to writers and readers alike. One of our favorite throw-away lines around my house is “and it was on the internet, so I know it’s true.”

We’ve had to adjust, over and over, to the notion that the content we consume is crafted and delivered in ways that serve the desires of many different actors, and consider all such media critically. Someone who contents themselves with consumption of solely AI-generated content will have an impoverished existence, just as someone who did the same with commercial televised content or even published books.

A thoughtful piece! Thanks for writing it!

Excellent article, Adam! I fully agree. I long ago (back in the 1990s) concluded that any content I didn’t want in the de facto “public domain” had to remain behind a paywall. That doesn’t mean I still don’t own the copyright, but I can’t really complain if someone uses my content without credit or still bits of it.

I do find AI scraping to be suspect if the training text is behind a paywall (like perhaps the NYT which requires a subscription). To me that shouldn’t be used for training. If AI robots are doing that, the owners should be sued and lose.

Tech blogger Manton Reece posted an interesting take on this today which expressed a lot of my own ideas and questions on training. For example, humans learn by reading, so why shouldn’t robots?

Manton Reece - Training C-3PO

And humans sometimes accidentally plagiarize. Who hasn’t written a paper in school where you discovered you’d quoted a line or two from an encyclopedia verbatim without meaning to do that?

Lots of fascinating questions.

This is another variation of asking for forgiveness rather than permission. And in almost all cases, the former is lauded as ‘easier’ or even ‘better’. I am a regular user of iNaturalist - which is a very sophisitcated database of living things on the planet. It has an excellent AI that is supplemented by many human experts. I freely add data to this - and I put in quite a bit of work to do this. But that particular project is one where you give your explicit permission to participate There are also ways to obscure sensitive content - like exact location or even the identifier - if that is what is best for you, or for the endangered organism. Wouldn’t you rather have that model?

Doesn’t it depend on the time scale and what is being done with the information - especially if it is being sold back to the author? A case in point is the current row between Facebook and Australian publishers, in which the former is refusing to pay for news stories generated by the latter, but “scraped” and “sold” to Facebook subscribers. The effect is this is that Australian journalists are being laid off (30 staff from 9News threatened this week, because the company is losing the fees it normally collects).

Part of the equation seems to be the time scale - nobody minds if the news is collected to eventually end up as history in a public medium, but if its IMMEDIACY is being sold without attribution or compensation we have an issue. Another element is the reach of the law - anyone who “steals” news from a US source can easily be pursued there, but an international pursuit is expensive if not impossible.

Your last paragraph gave me a chuckle. It also made me think of the distinctions we fail to make between historical knowledge and current needs. Much of the Tidbits archive is about tech that is now historic, rather than tech for current use. History has its uses, sure, but what we need to know about our current tech has an undeniable precedence. Will AI develop into something that can install, check, and troubleshoot tech we use today in any meaningful way? Perhaps, but it seems still yet to come.

Meanwhile, as a detailed repository of historic value, it can serve a purpose that could help inform later decisions positively, perhaps even more so when summarized by AI, and subsequently interpreted and understood by natural intelligence. So I had to chuckle at the idea of a Tidbits chatbot telling tales of the horrors of System 7 to schoolchildren around an electric campfire in the near future!

A thing I have long found annoying is publishers who deliberately make their content available to scrapers so that it shows up in, say, search results, but then throw up a paywall when I try to access it as an end user. That’s a bait-and-switch tactic (“come to my site, here’s the information you want!” then “oh, now that you’re here, you can’t see it until you pay for it”) and wastes my time with what is basically an advertising gambit. So if paywalled sites got scraped by AI trainers because they were trying to dupe readers into paying for their content, I’ll not shed a tear if their deception opened the door for others to use their content in ways they might not want.

One aspect you don’t mention, Adam, is the wholesale theft by LLMs of content that is not publicly available without payment.

I have written three popular non-fiction books (on meeting design). Copies of these books have been made available on pirate websites for download. ChatGPT has scraped pirate copies of my books without paying me a penny. I know this because I can ask ChatGPT to summarize a chapter that has never been made publicly available and it will provide a detailed summary that could only have been made if the book had been added to their database. OpenAI refuses to share the sources of its training data, but my experience shows that the company has no problem using stolen paid content. I am just one of thousands of authors whose hard work has been appropriated by OpenAI.

I have also published 800+ blog posts over the last 14 years, and ChatGPT has those too. Like you, I have no problem with this except that ChatGPT’s reformulation of them contains so many inaccuracies and distortions that I have to conclude that anyone who is looking for reliable information on a topic, at least in my areas of expertise, is ill-served by anything that ChatGPT spits out.

This is an excellent example why it’s naive to brush away lack of source declaration (or claim it has more to do with “manners” than business).

Imagine if an LLM provider like ChatGPT faced regulation that said a) every answer has to specify the source(s) and b) you could then require ChatGPT to scrub their models’ training data of material derived from your book since the source identifies it as illegal.

And before the usual cop-outs about how this can’t be done, let me add this. Right now on our very own campus we’re testing an experimental LLM toolkit by Google that aims at summarizing and synthesizing large bodies of scientific content. Not only will it tell me the exact sources for all its answers, it also allows removing a specific source.

I agree, but I’ll also point out that many “paywalls” are pathetic and are implemented in a way that may not affect a scraper-bot, even it it doesn’t have deliberate circumvention code.

For example, the New York Times allows a small number (3 or 5, I think) of free views before they ask you to log in. They are almost certainly using cookies to track your visits, since anything else would be useless without a login. Scraper software probably doesn’t support cookies at all, or if it does, it probably discards them between fetches, because they would just be unnecessary overhead.

And I’ve seen plenty of “paywalls” where the entire article is downloaded, and the content is then covered with a giant popup asking you to pay. Putting a browser into “reader” mode or disabling scripting is all you need to do to “circumvent” this mechanism. And again, I would not expect a scraper-bot to run scripts unless strictly necessary for retrieving content, and even then, it will probably be configured to run only those absolutely necessary, simply because it would be an unnecessary waste of CPU cycles. And if the content is already downloaded, then the bot has it, whether or not a script might later cover it with a popup.

Actual paywalls, where nobody gets content (or no more than a few lines of teaser content) without a login do exist, but they are far less common than the useless window dressing scripts that I usually see when browsing.

Absolutely true. The difference is that your third-grader’s homework assignment isn’t published as an original research paper. And by the time he grows up to the point where he may be publishing, he has almost certainly been taught about the laws and ethics of plagiarism. (He might still plagiarize, but almost certainly not by mistake.)

Ow. Yes. This is another vector that hasn’t been discussed so far.

These training-scrapers may have to have code to block pirated content and not just blindly follow all links wherever they may go (as a search engine probably should do).

And not just for copyright reasons. Also because pirated content is often corrupted (e.g. software with malware installed) and in ways that a bot probably can’t detect. You really don’t want it producing malicious answers because it trained itself from malicious source material.

InvokeAI just did an interview with a copyright lawyer and it’s really relevant to this discussion.

I’m still listening to the video, but this is the most powerful statement so far regarding fair use and what I think people are actually upset about: “You’re not just producing information about these works [of art, music, and writing] you’re using these works to create a substitute for these works.” In other words, AI models are taking data that provides income or some other value to the data’s original author, and the AI models are creating good enough copies to supplant the original data and the original author no longer gets value from their creative work.

Personally, I think the issue is bigger though, bigger than AI, and that is that what people consider valuable is changing dramatically. In this video, Rick Beato lays out an argument that music is no longer valuable, and not because of AI.

The line between fact and fiction, reality and parody, was so blurred so long ago that I couldn’t get too exercised about those examples. The glue one was at least unprovoked, but when you ask how many rocks you should eat, it’s easy to see getting a snarky response back. It just keeps coming down to having to evaluate everything you read.

Fascinating take from Manton Reece, indeed!

It’s certainly one approach, but I’m not sure it’s one that makes sense outside its specific application. Text doesn’t just exist on its own in the natural world—people have to create it. And in this context, they have to make it available for others to read, which feels like giving permission to me.

I haven’t been following this closely, not being from Australia and being generally allergic to anything associated with Facebook. But based on some quick searches, it sounds like Facebook was only posting headlines with links to the original articles. I realize there were some payments from Meta to content companies, but wouldn’t that generally fall under the implied contract of “you can scrape my content if you send me traffic”?

It does feel different, but Perplexity does cite its sources and link back to them, being more of a search engine than a chatbot, and while I don’t have the exact dates on hand, the chatbots are generally a year or so out of date. So that doesn’t seem like a huge issue at the moment.

An age-old Internet issue!

That is an issue, though it would seem that the fault lies more with the pirate websites than with ChatGPT’s bot, which would have no way of distinguishing a legitimately uploaded book (by the author, or in the public domain) from one like yours that was stolen.

I actually thought about mentioning this because back when we were publishing Take Control, I fought with the pirate websites constantly. From 2010 through 2015 (mostly through 2012), I sent 287 DMCA takedown notices. They nominally worked, but it was complete whack-a-mole. Some of the sites even told me they didn’t actually have the files; they just generated a faux download link based on searches. As annoying as this was, I never had the impression that it actually cut into sales in a real way.

In our case, the content also went out of date fairly quickly, so even if those books were used for training, they wouldn’t have been revealing anything that was still for sale. It sounds like your books might be more evergreen.

The real question, though, is if the fact of your books being in the training model actually affects your sales. Do you have any evidence that people are using ChatGPT and getting your content in such a way that they don’t buy your book? (Is it even possible to know that?) I’m not asking to be annoying—these were the sort of things I tried to figure out for Take Control whenever something I didn’t like happened. In that situation, the answer was always that the effect was minimal and that we had to focus on serving our most loyal readers.

See my points about chatbot responses being C+ work… I’m curious how you prompted ChatGPT to reformulate them and got distortions and inaccuracies. I tried it with my big article on Dark Mode and found that ChatGPT conflated the article text with the comments, thus somewhat confusing the matter until I asked it to separate the two. Interestingly, Perplexity did not make that mistake. Both ChatGPT and Perplexity properly linked to the original article.

I’m curious how you prompted ChatGPT to reformulate them and got distortions and inaccuracies. I tried it with my big article on Dark Mode and found that ChatGPT conflated the article text with the comments, thus somewhat confusing the matter until I asked it to separate the two. Interestingly, Perplexity did not make that mistake. Both ChatGPT and Perplexity properly linked to the original article.

But it still feels like an artificial example. If I were an average person wondering about Dark Mode, I wouldn’t be asking about specific articles, and when I did that, I got a pretty balanced view and ChatGPT was happy to go into more depth on the criticisms.

I always wonder what counts as a substitute, especially one that can compete commercially? And you’re absolutely right about the issue of what people consider to be valuable.

Another pre-AI story. When Apple opened the iBookstore, we published Joe Kissell’s Take Control of iCloud there, and it was a huge hit at $14.99 for several hundred pages. 1500 copies sold in the first month, I seem to remember. Shortly afterward, however, someone published a book about iCloud that was much, much shorter, but also much cheaper—perhaps $1.99. I can’t remember (or find) all the details, but iBookstore sales for Take Control of iCloud tanked and never recovered. The problem was purely price—just as with the App Store, Apple created a marketplace that drove prices to effectively zero, and price was more important to buyers than quality that they presumably couldn’t determine in advance.

But the continued success of the book to the Take Control audience showed that the most important thing is to find the right audience. The iBookstore audience wasn’t right for us.

Not quite on topic.

And I appreciate it, especially the part that enriches me. Thank you.

Obviously, the pirate websites are the first offenders. But Open AI made the unethical choice of scraping content from them! They didn’t need to make that shameful choice. At a minimum, Open AI could have purchased a single copy of each book, as libraries do, giving them at least a (wobbly) leg to stand on.

I don’t see how I (or Open AI) would be able to determine that ChatGPT users obtained content derived from my books, directly leading to lost sales. So I’ll never know. By its actions, Open AI is essentially saying, “You can’t prove that any harm was done to you by our stealing your content, so it was OK to steal it.”

It’s easy. Just now, I asked ChatGPT: “Tell me ten interesting things about meeting design from the writings of Adrian Segar”. Two of the ten responses are nonsense. A novice won’t know that two are fantasy and eight are decent information.

When I asked ChatGPT: “Tell me ten fun facts about Adrian Segar”, one of its responses was that I was an expert juggler. Who knows how it made that up. I can’t even keep three balls in the air!

In general, if you want to see how deceptive the answers that ChatGPT provides can be, ask it for information about something you know a lot about. IME, garbage will be mixed in.

But that’s precisely the point. The masses that are cheering on ChatGPT et al. are people who have seen it give nice looking responses to questions about matters they are entirely clueless about and hence easily impressed by trustworthy and confident sounding answers that come in fancy bullet lists (heck, even people on this board have started emulating that style). Those of us who are deeply knowledgable about a certain topic and have seen the kind of garbage that some of these tools render on those topics (just like social media before), are well aware of the misinformation, the poor SNR, and the ruse. But fat chance having some boring old librarian type expert get the plebs lusting for the “next big thing” to take a more critical stance. I’d be temped to say, so let them run off that cliff. Except I’m then quickly reminded they’re not just sealing their fate, they’re sealing mine too — just like Jan 6 coup attempted to destroy the oldest democracy not just for FB drones and those who spend half their life on “social media”, but for everybody. Yet still, what else can you do but try to point out at every step that we need to take a far more critical stance than just “let’s cross our fingers and toes” we’ll reap only the good.

Ultimately, the takeaway is what I’ve been saying for years: The biggest danger from AI is the fact that people trust it.

Really liked your article, Adam, especially the section “It’s called the open Web for a reason.” If you put something on the open web, you can hope that people will use the material in a respectful way, knowing that some people won’t, and that many people’s understanding of what respectful means will differ from yours anyway. I agree, if one’s not comfortable with that arrangement, then you shouldn’t publish on the open web.

I think in addition to trusting output of AI products, anthromorphosizing AI products is another problem. Many people tend to project human qualities (e.g. cleverness, mannerism) to such tools, which is understandable especially that they mimic human responses so well; companies have played to this, labelling them as intelligent assistants, etc. While that makes AI tools more accessible, it increases the risk of using fallacious analogies, exaggerating AI tools’ capabilities and dangers, and general misunderstanding of AI tools.

As a message board participant on TB and on other public sites (as opposed to a professional content creator), my main concerns about AI scraping are:

I’ve always assumed that anything I post on Internet message boards would be crawled by search engines and made available in searches. But for me, having entire discussion forums hoovered up, archived, and repeatedly used to train black-box AI processes goes way beyond that.

When I dealt with these sites during the Take Control days, it wasn’t easy to say they were obviously pirate sites. More generally, they were “file sharing” sites that allowed anything to be uploaded and then responded to DMCA takedowns for copyrighted content. So lots of what they held was probably legitimate, and I’m not sure there would be an easy algorithmic way to teach a crawler to avoid them. It seems obvious that most of the AI companies don’t really care either, but that’s not to suggest that it was an easy or even possible solution either.

Ah, that wasn’t what I meant, but I was making some assumptions based on Take Control that probably aren’t true for you. I agree that OpenAI can’t know anything about your sales or what users are generating based on your books. When we were doing Take Control, we had complete insight into all sales channels in real-time, so we could tell when sales went up or down in response to some action or environmental change. Plus, because we had direct communication with all purchasers, we could make sure that they knew certain things. I think I can count on one hand the number of times we heard from someone who had downloaded a book illegally and was confused about something, whereas legitimate purchasers wrote in all the time. (That’s across 500K sales over 14 years.) So while I can’t prove that online file sharing sites didn’t hurt sales, I just don’t think so. In contrast, per my story about the iBookstore, I can prove that Apple driving book prices to near zero hurt sales badly.

Right. But a novice would stop at “Tell me ten interesting things about meeting design” because they won’t know you’re an expert in the field. I’m a novice (though one who runs regular meetings), and when I fed ChatGPT that prompt, what came back was entirely reasonable. Feeding it the prompt that mentioned you did indeed return much different stuff, but since I know nothing about your writings, I couldn’t evaluate its accuracy. I pushed it a bit, and while I learned a bit about fishbowl conversations, for example, I didn’t come away thinking that I knew enough to hold one, and in fact, if I were going to try one, I’d want to learn more about in ways that didn’t require me to keep asking questions. And when I asked for books that would help, it did suggest one of yours.

This gets back to my point about generative AI chatbots doing C+ work. It’s usually not completely wrong, though it usually lacks in detail and nuance, some of which can be recovered by continuing the discussion. Not everyone wants, has access to, or can afford expert information on every topic at all times. If I’m looking for advice on dissuading bats from roosting in my soffit vents, the basics are fine—I don’t want a treatise, and it’s a lot easier to read a summary than try to figure out the agenda behind all the websites trying to sell me a solution.

Yes, that’s undoubtedly happening—there’s very little granularity with respect to specific permissions, and what does exist is only at the site level with robots.txt or authentication requirements. I could easily lock down this site so only people with accounts could read it, keeping all the bots out, but I believe that information should be shared.

Since it’s without our knowledge, it’s not something we can answer. But see above. If you’re worried about being tracked, you shouldn’t post on the open Web. The old saw about how you shouldn’t put anything in email that you don’t want to end up on the front page of the New York Times is even more true with posting on the open Web.

But see above. If you’re worried about being tracked, you shouldn’t post on the open Web. The old saw about how you shouldn’t put anything in email that you don’t want to end up on the front page of the New York Times is even more true with posting on the open Web.

(Just FYI, Bard has been renamed to Gemini, and Microsoft licenses ChatGPT from OpenAI.)

It occurs to me that all this fuss about generative AI is like a talking dog. Everyone is so amazed that the dog can talk, no one is bothering to ask if the dog actually has anything valuable to say!

On the other hand, the same could be said for file sharing networks like Gnutella and Napster - which were really just glorified search engines, providing links to content hosted by millions of individuals worldwide.

But that didn’t protect them from prosecution, resulting in a shutdown of any servers (for centralized protocols) and termination of nearly all client software projects (for decentralized protocols).

Of course, the prosecution didn’t stop anything. These file sharing networks still exist, and many protocols (like BitTorrent) are still really popular. What ended the massive free for all of piracy was the existence of cheap stores and streaming services, for which Apple broke the ice with the iTunes Music Store.

That’s the problem—the dog does have something to say when prompted appropriately. It may not be Mr. Peabody, but even the basic chatbots are useful right now, and generative AI overall has incredible promise. Exactly what the chatbots are useful for varies widely by person, which works against blanket statements.

There’s a blast from our apparently shared childhood past