Behind the iPhone 7 Plus’s Portrait Mode

The soft-focus Portrait mode for the iPhone 7 Plus that Apple promised for later this year has arrived with the developer release of iOS 10.1. A public beta will follow soon. This new mode makes use of both lenses in the iPhone 7 Plus to identify objects, calculate layers of depth, and then silhouette the closest layer and render the rest out of focus. (For more on the iPhone 7 camera changes, see “iPhone 7 and 7 Plus Say ‘Hit the Road, Jack.’” 7 September 2016.)

Portrait mode appears alongside modes like Pano and Slo-Mo in the Camera app, but during the beta, the first time you select it, an explanation appears with a Try the Beta link to tap. After that, it’s just another option. (TidBITS typically doesn’t report on developer betas, but Apple allowed some publications to test and write up the Portrait feature, making it fair game.)

This soft-focus portrait approach combines something called “bokeh” (pronounced “boh-keh”), a borrowed Japanese word that describes a deeply out of focus part of an image, with a close, shallow depth of field — the portion of an image in focus. This approach mimics how our eyes process a person or object seen up close, and adds a kind of visual snap that can be beautiful or gimmicky, depending on the composition. (If you don’t own an iPhone 7 Plus, or if you do and can’t wait for Portrait mode, there are existing apps to simulate bokeh — see “FunBITS: How Out-of-Focus Photos Can Be Works of Art,” 28 February 2014.)

Bokeh for portraiture is easiest to obtain with a telephoto lens paired with a mirrorless or DSLR camera, and hardest with wide-angle fixed focal-lenth lenses, like those on smartphones. Apple’s simulation tries to create the palpable sense of an expensively captured image with the iPhone 7 Plus’s tiny-lensed camera system, which includes a 4mm wide-angle (28mm equivalent at 35mm) and a 6.6mm “telephoto” (56mm equivalent) lens. (Apple is technically accurate in calling the 6.6mm lens a telephoto, but most photographers consider lenses 70mm or longer to be telephoto.)

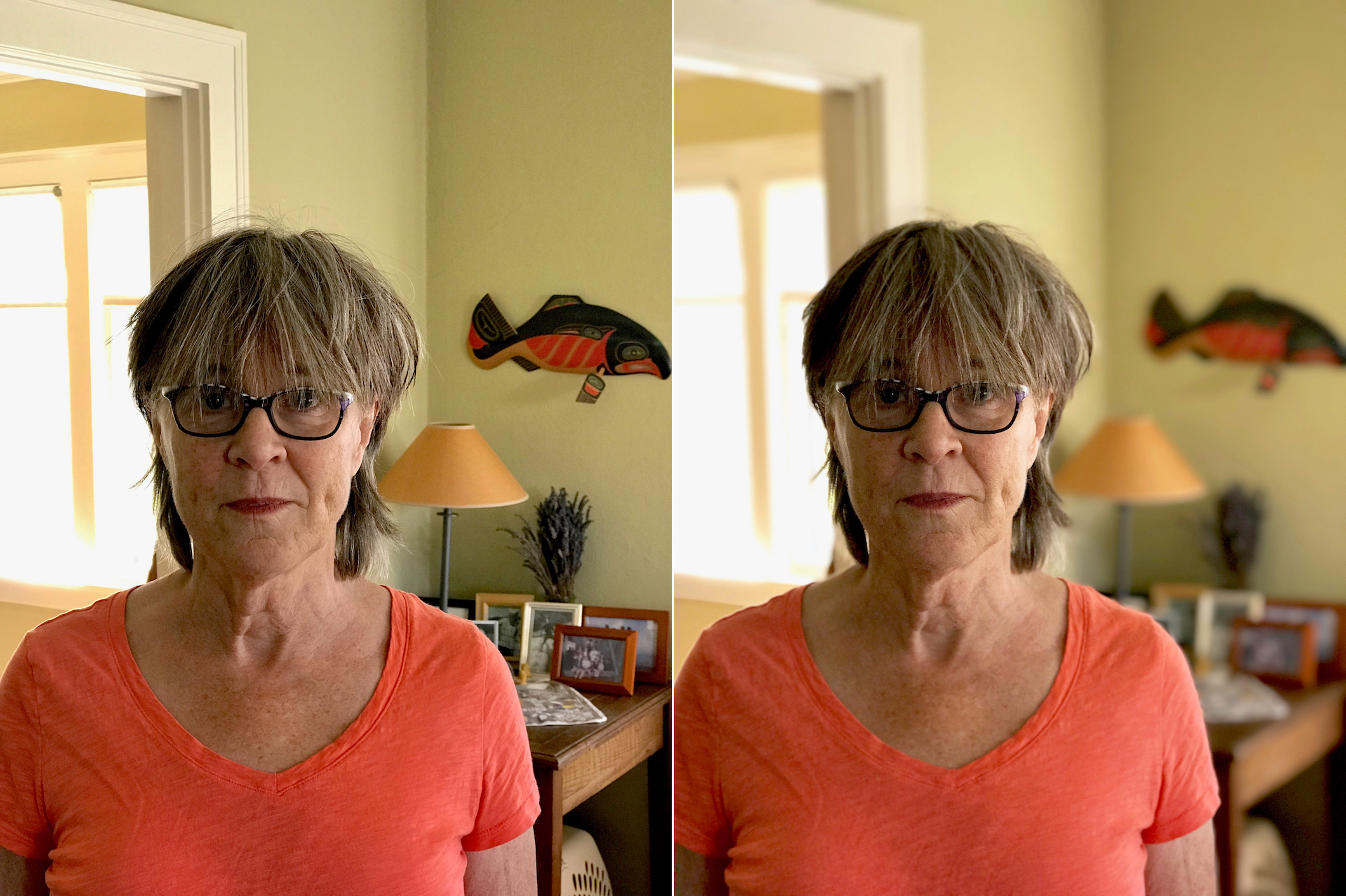

Apple’s new Portrait mode is pretty good, even in beta. In my brief testing, and in looking at photos others have posted, it works better with people and animals than objects. That makes sense, because objects inside the images have to be recognized, and Apple clearly optimized the mode for what it put on the label: portraits.

The Camera app provides useful cues when you’re setting up the shot. If you’re too close (within 1 foot) or too far away (more than 8 feet), an onscreen label tells you to move. It also warns you if there’s not enough light for the shot, as the telephoto lens is just ƒ/2.8, a relatively small aperture for a

lens that tiny, while the wide-angle lens is ƒ/1.8. Like high-dynamic range (HDR) shots, Portrait mode saves both the unaltered image and the computed one into the Photos app, so you don’t lose a shot if the soft-focus effect fails.

The math behind Portrait mode is cool. You don’t need two identical lenses to calculate depth. You just need lenses where the software knows the precise characteristics of each, such as their angle relative to each other and the kind of lens distortions.

The depth-finding software compares photos from each lens and identifies common features across the image using Apple’s increasingly deep machine-learning capabilities. It uses those common features to compare points between the two images, adjusted for what it knows about the cameras, enabling it to approximate distance. Because the depth measurements aren’t exact, the stereoscopic software divides features into a series of planes, rather than placing each feature at a precise distance. These planes contain the outlined edges of every element in the scene. (For more, consult this very readable technical

summary of the approach from 2012.)

A two-camera bokeh feature has appeared previously in Android smartphones, so this isn’t brand-new technology. But images from the earlier two-camera smartphones show much more variability than what I see from my testing and early published examples. A more advanced state of machine object recognition and the combination of the iPhone 7 Plus’s image signal processor and super-fast A10 processor enable it to preview the effect accurately and then capture it instantly.

Even outside of Portrait mode, the iPhone 7 Plus already performs some tricks in combining shots between its wide-angle and so-called telephoto lenses to produce a single image. This fused image is synthetic, but not artificial: it doesn’t add detail, but it combines aspects of each separately, simultaneously captured image. I’ve found that the iPhone uses this approach primarily in good lighting conditions, where the telephoto captures the scene and the wide-angle lens adds luminance information. The larger aperture of the wide-angle lens lets it capture detail better in darker areas and reduce the speckling caused by the telephoto lens’s image sensor not receiving enough light. (This is the same reason you’re warned about

insufficient light when composing a Portrait photo.)

These combinations and the Portrait mode all fall into an evolving field known as computational photography. HDR images are the best-known example, combining multiple successive shots at different exposures into one image with a sometimes supernatural-looking tonal range. The Light L16 camera is an extreme example of what’s possible: when it ships, it will have sixteen lenses across three focal lengths to create huge, high-quality, high-resolution images. You may also have heard of Lytro, which made consumer and pro cameras that used image sensors to calculate the angle of incoming light rays to allow refocusing an image after a picture was taken. That

approach was a little too limited and wacky, and the company discontinued these cameras. (It’s now focused on virtual reality hardware.)

For the moment, Apple isn’t making this depth-finding output available to third-party developers, who also can’t access both cameras at once as separate streams to do their own processing. Third-party apps can grab the image sensor data as a raw Digital Negative format file, and several have already been updated for this. But raw files can be captured from only one lens — if a developer wants to use both, Apple provides a fused JPEG. (Raw image support is available only on the iPhone 6s, 6s Plus, SE, 7, and 7 Plus, and the 9.7-inch iPad Pro.)

Apple’s Portrait mode is just the first computational method I expect we’ll see with the iPhone 7 Plus since there is so much more you can do with the ability to compute depth in real time, from using the iPhone as an input for motion capture or gaming control to 3D scanning of objects. And, if developers are lucky, Apple will open up some dual-stream or dual-image capture options that could result in a blossoming of even more new ideas.

A clear (←no pun intended) explanation. Thanks!

(Demolishes his 400-year-old camera obscura out of sheer envy…)

a few clarifications:

Bokeh refers to the out of focus areas in an image, not the concept of shallow depth of field (DOF). There's good and bad (nisen) bokeh, and it all comes down to the optical characteristics of the lens in question. This fake bokeh is not really bokeh per se, but just blurring based on an algorigthm (you can easily do the same thing in photoshop).

Generating images with visual bokeh usually involves a longer than normal focal length and relatively wide aperture to limit DOF. Telephoto / SLR / mirrorless doesn't really have an effect per se, except that it's easier to get shallow DOF on larger sensors. (You should see an f/2.8 lens on a 4x5" camera... ;-)

Although the iPhone leneses 'appear' to be wide aperture (e.g. 'fast' - 1.8 - f/2.8) they are not wide aperture at all, compared to their focal length and the sensor size. Their 'effective' aperture (for purposes of bokeh) is probably more like f/256 or something, when sensor size is taken into account.

Good eye (no pun intended). I elided two different things, and we’ve updated the article. In common usage, bokeh is often used to refer to the whole photo that contain the effect, but that’s less crisp than defining the term.

“It’s easier to get shallow DOF…”: Precisely my point!

And, yes, Apple is being accurate, but it’s a weird thing to call the apertures large when they’re really small, but there’s no better way to do apples-to-apples (Apples-to-Apples?) comparisons.

also, what's being described here is not a 'soft focus' effect. It's fake bokeh. Soft focus describes the optical properties of a lens that has deliberate abberations to render both sharp and soft focus images at the same time. E.g. some older lenses were designed this way, for example: the Imagon series, various large-format lenses like Verito, Cooke Knucklers, certain Petzval lenses (though not the modern reproductions), etc.

Additionally, re: telephoto - people are confusing the difference between a longer-than-normal focal length lens, and what 'telephoto' actually means. Telephoto actually means that the physical length of the optical path is shorter than the focal length of the lens. It's done optically, to shorten the lens so a 300mm lens doesn't necessarily have to be 12" long physically. Any lens can be telephoto - it's the optical design principle that determines that, not the focal length itself. Normally, most longer lenses these days are a telephoto design,...

(telephoto continued)... but they don't have to be - most large format lenses, regardless of focal length, are rarely telephoto designs (for various reasons), even though they can be 1200mm or longer in focal length.

So, the iPhone 56mm is probably a tele design (to save space = shorter physical length), even though it's very close to a 'normal' focal length in angle-of-view. (probably about a 45-50 degree angle of view, I'd guess)

re: 70mm+ being considered telephoto - what you really mean is 70+mm is usually what people consider when they think of a longer-than-normal focal length lens. (e.g. a portrait lens).