Macs Make the Move to ARM with Apple Silicon

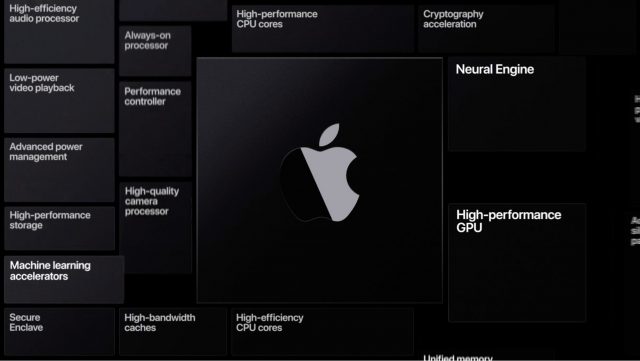

In the WWDC 2020 keynote, Apple finally put the rumors of a Mac processor transition to bed with the announcement that indeed, Apple is designing ARM-based chips expressly for future Macs. As expected, Apple explained the move as a way to get the highest performance per watt, support Apple’s custom technologies, and have a common architecture across all Apple products.

The company has been designing its own custom chips since the initial release of the iPhone, and in 2013, launched the A series with the A4 in the iPhone 4 and original iPad. With the release of the third-generation iPad, Apple introduced the first iPad-specific chip, the A5X, to pair with the iPhone 5’s A5. Since then, Apple has put the A#X chips in iPad models designed for high-performance tasks.

It’s possible that Apple telegraphed the transition to the Mac on nomenclature alone, given that the A12 Bionic that debuted with the iPhone XS was then bumped to the A12X for 2018’s iPad Pro models, and later superseded by the A12Z Bionic for 2020’s iPad Pro models. Apple is now using that A12Z chip in the Mac mini-based Developer Transition Kit (DTK) hardware that developers can use to develop and test their apps, so it clearly has sufficient performance to run macOS and demanding apps.

Compatibility

The primary stress of a processor transition revolves around compatibility. Developers don’t want to have to write and distribute two completely different apps, and users want to keep using their existing apps to the extent possible. Unsurprisingly, Apple attempted to set those worries to rest, although the devil is always in the details, which won’t be known until developers dig in on the DTK hardware.

First, Apple took pains to note that all its macOS apps have been rewritten to be native to Apple silicon. That includes pro apps like Final Cut Pro and Logic Pro. App performance during the keynote demos was entirely fine, and the company only acknowledged during the final segment of the keynote that the earlier parts had been running on a Mac using Apple silicon, likely the A12Z-powered DTK hardware.

WWDC is, of course, a developer conference, and Apple said that its Xcode development environment contained everything developers would need to recompile their apps for Apple silicon. The specifics will vary by app, but Apple claimed that many developers could get their apps running in a few days. Testing may take quite a bit longer, though, since it will have to happen on both platforms. As happened in previous processor transitions, Apple seeded some key developers ahead of time and was thus able to show the Microsoft Office apps (Word, Excel, and PowerPoint) and Adobe Photoshop and Lightroom running as native apps.

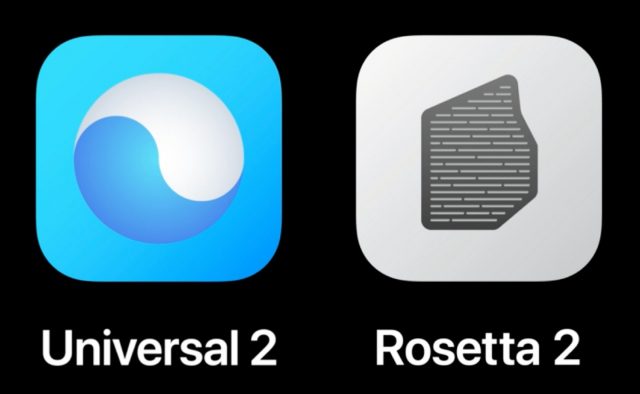

Separate apps for Intel and Apple processors make no sense, so Apple has come up with Universal 2, a way of combining both sets of code for an app into a single file. It was a little surprising that Apple reused the terminology from the Intel transition, when a “universal” app contained both PowerPC and Intel code. Jim Rea, developer of Panorama X, tells us that size is not likely going to be an issue with universal apps since the machine code that differs between platform is usually a minor contributor to the overall size of a modern app, particularly when compared to images, menus, help documents, and so on. Nonetheless, we suspect you won’t be able to switch a boot drive between an Intel-based Mac and a Mac using Apple silicon seamlessly, or perhaps at all.

What about existing apps? In the Intel transition, those were supported by the Rosetta translation environment, which translated the PowerPC code in older apps to run on Intel-based Macs. It shipped with the first Intel-based Macs in Mac OS X 10.4 Tiger in early 2006 and remained part of the operating system until 10.7 Lion shipped in mid-2011, over five years.

In the same vein as Universal 2, Apple said that Rosetta 2, shipping with macOS Big Sur later this year, would enable existing Intel-based apps to run on Macs with Apple silicon. Rosetta 2 automatically translates existing apps when you install them so you’re not taking a performance hit on every launch. Some apps rely on just-in-time code, though, and for those, Rosetta 2 does dynamic translation on the fly. Apple said that Rosetta 2 would be completely transparent to users—you shouldn’t notice it running at all.

We hope that’s true. Translation environments always come with two tradeoffs: compatibility and performance. With regard to compatibility, although Apple implies that Rosetta will support all existing apps, that’s undoubtedly only 64-bit apps that already run in macOS 10.15 Catalina. Lower-level software, like kernel extensions and drivers, is less likely to be supported, but we won’t know until people with the DTK hardware start reporting back.

Performance, Apple said, was “amazing.” You’d expect Apple executives to say nothing else, and the demos of Autodesk’s 3D modeling app Maya and the Shadow of the Tomb Raider game both ran well enough, though it was hard to be sure given the limitations of streaming. Will that carry over to all apps? And how will that performance compare to running on an equivalent Intel-based Mac? For those answers, we’ll have to wait a bit.

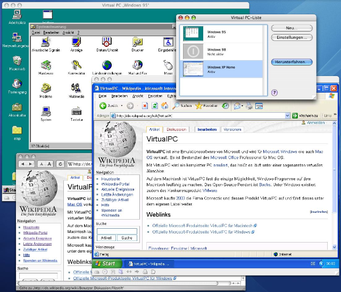

What about virtualization? Apple’s executives made sure to say that Macs with Apple silicon would support virtualization and went so far as to show Parallels Desktop running Linux in a window. Even though they mentioned Linux a couple of times, often in the context of developers (it is WWDC, after all), they said absolutely nothing about Boot Camp or virtualizing Microsoft Windows. There is a version of Windows for ARM, and we fully expect Parallels and VMware to support it, but it comes with a long list of restrictions.

But wait, there’s one more thing, and I mean that in the full Steve Jobs sense of the phrase. The beauty of that A12Z chip in the DTK hardware is that it’s exactly the same chip that powers the current iPad Pro models. And thus, all iPhone and iPad apps will be able to run natively on a Mac with Apple silicon. That was a quick comment during the keynote, but I think it could be a huge selling point. Lots of iOS and iPadOS apps don’t make sense on the Mac, but plenty do. Apple showed them each in their own window, just like little Mac apps, but I could imagine a utility that would let you put them in the Mac’s menu bar, accessible with a single click. Hmm…

Timeline

When can you expect to buy a Mac with Apple silicon and see how it runs your apps? Apple said that the first such Mac would ship by the end of 2020 but gave no details as to where in the product line it would fit.

Speculation has suggested it would be a lower-end laptop, but some of the things Apple was demoing on the A12Z-based DTK hardware during the keynote (and on a 6K Pro Display XDR) were more on the pro side of the equation. So it’s possible that Apple would roll out the DTK Mac mini as the official Mac mini with Apple silicon. Might Apple take the opportunity to come up with some new names? We’ll see, likely in mid-December, if Apple’s past promises of “by the end of the year” hold true again.

The overall transition, Apple said, would take about two years. By that, we suspect the company means, “the point at which all current Macs in the lineup are based on Apple silicon.” During the keynote, Apple executives took pains to emphasize that the company will continue to support and release new versions of macOS for Intel-based Macs “for years to come.” Plus, Apple has “exciting new Intel-based Macs in development.” So there’s no reason to avoid buying an Intel-based Mac now if it’s likely to meet your needs for the next 3–5 years.

That timeframe is a bit longer than the switch from PowerPC to Intel. Steve Jobs announced that at WWDC in 2005 (see “Apple to Transition to Intel Processors,” 6 June 2005) and he introduced a new iMac and MacBook Pro with Intel processors at Macworld Expo the following year (see “Intel-Based iMac and MacBook Pro Ship Earlier than Expected,” 16 January 2006). If I remember correctly, all new Macs in Apple’s lineup were using Intel processors by the end of 2006.

The Mac Pro and iMac Pro may take longer to transition to Apple silicon because they sell in small quantities and because it’s more important for Apple to transition the core of the product line first.

A Good Move?

Is this a good move on Apple’s part? Absolutely. It undoubtedly won’t be as seamless a transition as Apple implied during the keynote, and I’m sure the people who complain about everything Apple does won’t be happy because they never are.

But technology never stands still, and as David Shayer outlined recently in “The Case for ARM-Based Macs” (9 June 2020), transitioning the Mac to Apple silicon gives the company higher margins and more control over its supply chain. Simultaneously, it should give us users Macs with higher performance and significantly more capabilities, thanks to the support for all iPhone and IPad apps.

Wow. Lot of cool stuff coming down the pike. This looks pretty cool.

I am of course disappointed that there was not so much as a speed bump for the 2019 27-inch iMac. As discussed in another post, I need to push my late-2012 iMac off to the side to gain much-needed rendering power and speed for Final Cut Pro and Compressor. It would have been great to see one of those interim Intel iMacs announced for this keynote, but it wasn’t to be.

So: I’ll order a 2019 iMac with the best GPU, add my own memory kit, and be happy. The old iMac is going to end up on my spouse’s desk, still sitting on the network. It will power everything from a Cricut cutting machine to taking on distributed Compressor jobs when I really really need the power.

And when the A-series chips and the Rosetta 2 stuff gets sorted out, I’ll be just about done with my current job and ready to retire! (That last part: not really.) But I plan to do with this one what I’ve always done with Macs: work them way harder than they were ever intended to, and get the absolute best performance and creative output from them. I did that with my first Macintosh SE, which turned out to be a very capable page-setting system once I added a Mobius full-page display and card to it, along with a 30mb SCSI drive. Oh, and a LaserWriter Plus that I bought used for something like $5,000 in 1989, and eventually replaced its motherboard with an accelerated one that also bumped the resolution up to 300x600dpi. It could turn out a complicated PostScript page in less than a minute!

Thanks for the rundown, @ace!

There were no hardware announcements at all, but at the end Tim did say that several new Intel-based machines would be announced (by the end of the year?) and that Intel support would continue for some time. Since the laptop line was recently refreshed, that may mean new Intel iMacs are on the way.

My personal practice is skip buying the first generation of devices with significant new hardware. There are usually significant changes to the 2nd generation that fix the shortcomings that become apparent after more intense use in the field. So, in your position, if replacement isn’t absolutely critical, I would wait a few months to see if there is a last iMac Intel upgrade or if the 2019 one is it. I would jump on which ever one is the last one (and I may take my own advice to replace my 2015 iMac so that I’m port-consistent between my laptop and desktop).

These will probably be the last Intel machines as the first Apple Silicon (AS) Macintosh computers are supposed to be released by year’s end. For the Keynote, they were using a Mac Pro that had an A12Z chip in it, so probably the first AS Macs will be Mac Pro & iMac Pro.

Rumours about announcements of hardware at WWDC happen every year. They are almost never true unless Apple wants to talk about software for unreleased products, like the ARM-based Macs. Something may be happening with iMacs, delivery for me is 2 weeks for a standard iMac up from the usual 3-4 days.

My only hope is that they will allow Rosetta and the Universal 2 binaries to be used for a longer time than they did for the last transition from PowerPC to Intel. Especially because of saying that Apple would continue to make Intel-based Macs for some time to come. I just finished purchasing a lot of new software in the last few months to replace those that were not 64-bit compatible so I could move to Catalina on a brand new MacBook Pro - and I really don’t relish having to buy all new software again for this transition! If I can go 3-5 years with everything I have now fully updated on this new laptop, I’ll be happy.

They said they would support Intel Macs for a long time. With the first AS Macs to be released by the end of this year and a fixed 2 year transition period, I expect that any Intel Macs released in 2021 & 2022 will just be “speed bump” machines.

Rosetta lasted just over 5 years. According to Wikipedia, it was first enabled in Mac OS X 10.4., released in January, 2006, and died with the release of Mac OS X 10.7 in July, 2011. I believe that Rosetta partially depended on non_Apple owned Intellectual Property and the inability to renegotiate those licenses for that helped dictate its termination. I’d be curious if Apple has complete control over the IP for Rosetta 2 so that those problems wouldn’t arise here; the decision to stop supporting it and the applications depending on it will reside solely with Apple.

You write in the article, “So there’s no reason to avoid buying an Intel-based Mac now if it’s likely to meet your needs for the next 3–5 years.” But isn’t there? The Intel-based Macs won’t run all the iOS/iPadOS apps will they?

It sounds like the current Mac are going to be obsolete quicker than usual.

I’ve been wondering if I should upgrade my MacBook Pro late 2013 and decided to wait and see what was announced at the WWDC. Now I feel like waiting more. Am I wrong?

Maybe I’m missing something, but am I the only person who has no desire or need to run iOS apps on my Mac? I can see why developers need to do this - but the average user? Not even games would I want to run in that small iPhone window on my Mac!

I wonder what the Macs are going to cost since they will continue to make one with intel?

I just recently replaced my 2013 13" MBP with a 2020 13" MBP pretty much maxed out. To tell you the truth, I’m feeling pretty good about that right now. The design is sufficiently mature (thanks to going back to the old KB). I would prefer no TouchBar, but whadyagonnado? This thing will hold me over until at the very least the 2nd gen AS MBP. And if indeed the new AS MBP allows for 15 hr battery life, far superior performance over my quad-core i7, and is rock stable, I’ll just put this puppy on eBay and enjoy transitioning to the brave new world early. :)

I’m in the same boat. I just went over all my iPhone apps. I didn’t find a single one I’d like on my Mac instead of what I’m using there. In fact, I’d probably prefer Mac apps on my iPhone (apart from too small screen and no KB/mouse). Notes and Maps would have been obvious candidates but we already had those on Mac and with iCloud sync long before. Wallet maybe? Well TBH, I guess I do prefer iPhone’s TV app over Catalina’s.

Although it was said there would be Intel support for some time doesn’t necessarily mean they will still make them.

I think it’s more the point that a developer can issue a binary that can run on the Mac or the iPhone. Rosetta2 and Universal2 should mean that an appropriately compiled iPhone app can run on your Intel Mac too.

I’m a very happy owner of a 2019 iMac and I foresee a good five years of life in it yet. But if I was looking at an upgrade from my old 2013 MBPro I’d be waiting to see how quickly they arrive and in what form factor the new ARM series Macs take.

Then again my iPad Air 3 and the Combo Touch have meant that MBPro has been left on the side for quite some time now. My next laptop may well be just a better iPad. It really looked yesterday like the day we had wondered about actually arrived, macOS and iOS looking like variants rather than alternatives.

I have quite a few iOS apps that I’d love to run on my Mac. They’re mostly things that are accessed via the browser on a Mac, but have an app for iOS (Flightradar24, Marine Traffic, etc), or just aren’t coming to the Mac (games, mostly).

I’ll be taking the wait and see approach this time around. I have some significant software costs in order to simply get off of High Sierra so I’m simply going to stay where I am until I can no longer. At which point I’ll probably just change my current systems to Windows 10. While not as nice, there is a zero cost to doing that so barring a hardware failure I’ll wait and see from there.

I totally don’t see a point to do the spend now to only get Catalina/Big Sur software compatibility to only have to do it again for ARM-based system within a short period of time. Sure there’s always going subscription but why spend on that purely for software compatibility? During which time it can take a number of years to get fully ARM compliant apps. While the ARM processor may have huge gains in of themselves it’s highly possible those gains will not be immediate through actual performance.

So I’m going to see what Apple ends up with rather than holding their hands, funding both them and also developers, through it like the previous 3 architecture changes. I’m happy to pay for product that realises the benefits… not for products that merely promises it.

I also have a few iOS apps that would be great on Mac. Overcast is one (though I don’t really listen to podcasts on Mac, that might be because there is no Mac app for Overcast.) Also DarkSky, which I like more than the web site, and the Twitteriffic app for iOS is better than their Mac app. Another one are the reader apps for The New Yorker and for the Boston Globe. I’m fine using those on the iPad, but it would be good to have Mac versions.

Of course the developers could do that with Catalyst for Intel Macs as well.

If I remember correctly, Rosetta was licensed from Transitive corporation. When IBM bought Transitive, Apple was unable to continue licensing it for new Mac OS X releases.

Hopefully, Apple learned its lesson and will make sure to own their tech this time, either by developing it in-house, by acquisition, or with a source-code license that allows them to continue development independently.

This is my concern as well. I’m currently using some pretty old equipment (2011-era Macs running Sierra). I had planned to upgrade them this fall. If I get a last-generation Intel box, I know I can get compatible versions of my critical apps (FileMaker, MS Office, Photoshop Elements, SilverFast, a few others), but that computer is probably going to end up being unsupported much sooner than is typical for Apple hardware.

If I get a first-generation ARM box, there will probably be good hardware support, but I doubt any of my critical apps will have ARM versions available at that time. I’m going to have to wait and see how many are compatible with Rosetta 2.

Or (and maybe this is best) I could just stick with what I’ve got for another year or two. My only concern here is that the Mac mini has hard drives in it and they’re getting old, but I could install a 2TB SSD without spending a lot of money.

Apple just started selling a $6000 Mac Pro without a monitor and I find it hard to believe that Apple will stop providing it with updates within a decent lifetime cycle. Plus, of course, Apple is planning new Intel Macs over the coming years. They said they’ll support Intel Macs for a long time; I guess we’ll see what happens.

There’s a couple iPhone apps for which on the Mac side I actually prefer using the browser like FlightRadar and DarkSky. The former is so painfully naggy about in-app purchases, I’ve started to use the browser even on iOS. The latter has a perfectly nice website. And chances are once their data becomes the engine behind Apple’s weather app I won’t be needing it anymore anyway.

That said, I’m usually no fan at all of using a browser instead of a proper app. To my disbelief many of my colleagues actually use mail.google.com instead of a real email client. But for simple tasks like checking brief data (airliners, weather) a browser totally does it for me.

IMHO this is a no-brainer. An SSD will make the whole system feel like a new computer. It’s rare that such an inexpensive upgrade has so drastic consequences. You won’t be spending much and if the mini holds over fine, that way you’d be good to wait for 2nd gen AS Macs. If you still want to migrate early, that mini can continue to serve well (and be useful, unlike a HD version) or if you really don’t want it anymore, just remove that SSD and use it as a nice fast external storage. SATA-to-USB-C adapters/cases are inexpensive and work just like any other external SSD.

I think there is some confusion over this after yesterday’s presentation.

What I took home was that Apple’s first AS Mac will come out by end of 2020. And the last Mac to transition to AS would be within two years, so by end of 2022. Apple has stated they will support Intel Macs for many more years (5? 10?), but that doesn’t mean they’ll be producing Intel Macs for nearly as long. In fact, not few publications have interpreted Apple’s statements as essentially saying no Intel Macs to come out from 2023 onward. Support means macOS runs on them and maintenance, not new hardware releases.

Anybody with a good source please correct me if that’s wrong.

Apple said two things: the Apple silicon transition would be complete in about two years, and that they still have intel Macs in the pipeline (which I interpret to be short-term, maybe through this time next year.).

See https://www.apple.com/newsroom/2020/06/apple-announces-mac-transition-to-apple-silicon/

I would agree if the computer was still supported, but it is a vintage model (nearing “obsolete”). The most recent macOS that can run on it is High Sierra (which I won’t upgrade to because I consider it unstable). It’s probably not a good idea to keep using this computer regardless of what I do with its storage.

I wouldn’t be too concerned about buying an Intel Mac right now if it suits you and your anticipated needs. Apple will easily support the thing for 3+ years.

I’m pretty happy having just bought what was probably the last Intel 13" MBP. This thing will easily get me to the 2nd gen AS MBP which is probably prudent considering Apple’s gen-1 record. And if indeed the AS MBPs are so unbelievably awesome I want to upgrade immediately I’ll just do so and put up this Mac on eBay. Sure its resale value might not be great, but that will easily be outweighed if these new AS Macs are so great. And if not, well this thing will hold over just fine until they’re up to snuff (hopefully).

My guess is that we won’t see any new Intel versions of MacBook Airs or MacBook Pros, as they were just significantly upgraded this year. The current MacPro was introduced in December 2019, so I think that it’s a long shot for another Intel version. The most probable machines to get a final Intel upgrade are the Minis and the iMac family, including the iMac Pro.

After that, it’s Apple silicon all the way. As I mentioned before, I’d be leery of making a long term investment in a first generation Apple silicon machine. The history of major transitions has shown that the first generation usually has some significant flaw that is usually corrected quite quickly in the 2nd or 3rd generation.

From a screenshot during the keynote, it seems that the demo machine was most likely an Apple Developer Transition Kit machine, which means a Mac mini with the A12Z chip in it. You may have been fooled (I was too, briefly) by the use of the Pro Display XDR, but a Mac mini can run it just fine.

True, and while I fall into the category of people who would probably have a couple of iPhone apps I’d run on my Mac, my guess is that’s mostly just a small win for most TidBITS readers. And if you see a big need for an iPhone or iPad app, then wait a little.

I agree. My personal sweet spot is the second iteration, and if there are three base configurations (which Apple almost always does), the middle ground. That has always worked out well for my needs.

In my case, replacement is critical. I’m producing a 30 minute program every week, and most of the material comes on Friday and Saturday. I have to have everything uploaded by Saturday midnight. I can’t imagine how brutally inadequate my late-2012 iMac (2.9 GHz core i5, 24 GB RAM, NVIDIA GTX 660M) would be if I hadn’t transplanted an SSD into it almost 4 years ago, or hadn’t recently added a 6TB Thunderbay RAID back in March when this new reality began.

As it is, and as it has always been, transcoding is the giant constraint. But I also endure abrupt crashes, usually just FCP but sometimes the entire system, which I think is the software outgrowing the hardware.

So the plan is to have this machine available for legacy software like Adobe CS6, which runs fine on 10.13, and for distributed transcoding using Compressor. But I’m unexpectedly back in a position where my needs are production needs, and in this case time = sleep.

Thanks @aforkosh!

I had heard this has as much to do with supply constraints because of the COVID-19 situation as anything else. Respecting the marketing need to avoid cannibalizing current sales in anticipation of an impending release…it’s still very frustrating to be in the dark about imminent sales plans.

To go ahead with my plan, I’ll have to make the emotional choice to ignore any subsequent announcements from Apple. And that’s true even though they will use their legendary persuasive skills to make the machines they’re replacing sound like absolute junk and yesterday’s news—even though today they’re the hottest and fastest things going.

Every day I wait means another day of working with a 7 year old iMac that does many things perfectly well but can’t keep up with my current requirements.

I wonder if that really matters. I can’t think of a single xOS app that I’d want to run on a Mac. I’m sure for some readers they may have one or two. But I’ve always used my Mac to create and feed content to my iPad or my iPhone, as Steve Jobs intended.

Yep, you’re probably correct, Adam. Since the DTK uses a Mac Mini, maybe the first AS Macs coming out by the end of 2020 will be Mac Minis. I didn’t know the ProDisplay XDR could be run by a Mac Mini.

I’ll bet they originally had at least one ARM Mac planned to debut at the WWDC. Apple’s whole hardware supply chain has been thrown up in the air for over six months. Sourcing, manufacturing, delivery and sales channels are going to be screwed up for some time. This probably upended all their plans for delivery of new hardware, and probably the best they could do was to get enough of the ARM Mac Minis into the hands of developers. And they need developers to build new software and optimize existing apps to run on ARM chips.

But Apple does need to get some new Macs out the door in the next few months so their sales results don’t look too awful. So they might have to throw an Intel Mac in there, but I doubt it.

It would also not auger well for the newly released Mac Pro line if they transitioned to 100% ARM in The next 2-3 years. I think it’s one of the reasons they made such a big deal about everything Intel will work beautifully on ARMs going forward.

BTW, I wonder if the new Minis will be rolled out to the general public in the next few months. My guess is this might have been in their original plans.

This is 100% true, and my suspicion about the new Mac Pro is that the previous model was so long in the tooth that Apple needed to get something out to a highly specialized and competitive market before Windows hardware manufacturers started making headway into Apple’s highest end Mac customers. And when you are talking about the ultra highest end graphics software developers, like the companies that make stuff like Flame, who all charge their customers per single seat per annum: Flame ($4,425), Smoke ($1,620), Auto CAD ($1,690), Revit ($2,425), NukeX ($9,289), Katana ($9,458), Hiero ($3,038), Mari ($2,198). With developers like these, Apple is playing in a different league then the WWDC market, one that won’t be willing or able to rewrite their software so quickly.

The WWDC talk on Apple Silicon confirmed that Rosetta 2 won’t run virtualization software, so presumably an ARM Mac can’t run Intel VMware for Intel Windows. So I can’t switch until Windows Quicken runs on ARM Windows, or Mac Quicken finally gets its act together, neither of which seems imminent. No great loss in my case, as my Macs are rarely CPU-bound, and the only iOS app I’d really like to run on a Mac (the talking Shorter Oxford English Dictionary) is 32-bit.

I often use my laptop computer …wait for it… on my lap! It is disruptive and even a little dangerous to dig the phone out of my pants pocket while using my computer. It was a big advance for me to be able to view and respond to text messages without needing the phone. There remain a number of IoT apps (for my car, security cameras, solar energy/backup battery system, landscape watering control) that would be much more conveniently accessed from my laptop.

I’ve been able to view and respond to text messages directly on my Mac for some time with the Mac’s own Messages app…!

You can also accept calls on your Mac. In the phone, turn on Cellular -> Calls on Other Devices. Once this is done, any device signed in to your iCloud account will ring when your phone rings (and is nearby on the same Wi-Fi network), allowing you to accept the call via the FaceTime app.

I’m afraid this was to be expected. We’ll need actual emulation instead of just virtualization to do x86 Windows on Macs. Linux too if it’s for x86. I suppose the Debian Apple demo’ed was already compiled for ARM and that’s why it ran in a VM.

Remember the glory days of VirtualPC? Yeah, welcome back. ;)

I think it is bizarre that Apple should promise new Intel based models to be released later this year when it has already effectively declared that they are on an architecture which it is leaving behind. I feel for people who spend a large amount of money buying a machine that is obsolete before it is even put on sale.

It will be interesting to see how long Intel support lasts. On the switch away from PowerPC, developers fairly quickly started to deliver “Intel only” applications, which meant that they didn’t need to support two versions. So it isn’t just down to how long Apple has OS support for the Intel architecture.

My other worry is that, while Apple may well feel it can produce faster silicon than Intel right now, AMD and Intel push each other forward. In 5 years time will Apple still be faster? And on the graphics front, can Apple beat both Nvidia and AMD?

All that said, it will be interesting to see Apple’s pricing on the new machines relative to performance. Will they just swallow the Intel mark-up and keep prices at the same level (I’m suspecting they will)? Will they be able to consistently move forward the high-end processors on the Apple Silicon architecture when it is a very small market?

I feel that macOS laptops may increasingly struggle to differentiate themselves from the iPad Pro given the new Magic Keyboard cases. And to what extent will developers make the extra effort to produce Catalyst versions of their apps which are then still only semi-macOS native when they can just tell ARM Mac owners to run the iPad app on their desktop?

I get the excitement over the move, but there are still a lot of unanswered questions.

I share that sentiment even though I have no worries about the Intel MBP I just purchased — it will easily last me long enough to await the 2nd gen AS MBP.

Apple could have simply spec bumped the Mac mini and iMacs around the same time it did so for the 13" MBP. The new MP is recently new so that a refresh could have waited until well into 2021 (assuming AS on the MP level would be ready by then). That way they would have only shipped new designs based on AS after the transition announcement.

It will be interesting to see how many Intel iMacs and Mac minis they actually sell now that the cat is out of the bag. Apple of course hides individual sales figures but there are always industry estimates. If Intel sales indeed tank, we’ll hear about it. The silver lining there would be that such a slump would certainly light a fire under Apple. Now that they’ve loudmouthed to the entire world about how great their silicon is and how slow Intel progress was, they have plenty to deliver on.

One wrinkle that surprised me a bit is the hardware specs of the box that developers can “borrow” for $500 for a few months. It fits in a Mini case, but there are differences besides the processor. The elephant in THAT room is the expansion ports. The analogous currently shipping Mini has 4 TB3 ports and 2 USB-A (USB 3) ports. The “preview” box has 2 USBc ports and NO Thunderbolt 3 ports. I don’t get that.

TB support is delivered through Intel’s chipset. Most likely AS support is just not quite ready yet. Keep in mind Axx CPUs previously interfaced to USB-C (iPad Pro) but never before to TB. I have little doubt it will be there by commercial release. Also, USB4 is around the corner and that is effectively a rebranded TB3 so maybe AS Macs will simply ship with that. The connector is USB-C and it is backwards compatible.

A very good point on USB4. Apple will need to replace more than just processors!

I’m also fully in agreement on avoiding the first versions of new Apple kit. I remember all too well the fate of the Mac Pro 1,1 which turned out not to be fully 64-bit and therefore was dropped like a stone by Apple well before its successors. The upside of having invested in Intel kit is that it might tide us through until any initial bumps are ironed out. And we do still have the ability to run Bootcamp and virtualised versions of Windows - which it seems may not be possible on the ARM machines (or, if done at all, may be more akin to the old VirtualPC in performance terms than what Parallels offers on an Intel machine).

That’s because it’s not a preview of anything. It’s a development kit designed to get app developers up and running as soon as possible. Just like the developer transition kit Apple loaned out in 2005 to support the transition from PowerPC to Intel.

The 2005 DTK was nothing like any Mac that ever shipped. It was, for all intents and purposes, a PC motherboard bolted into a PowerMac case, running a hacked-up version of the operating system that couldn’t run on anything else.

The kit Apple is loaning out today is almost certainly the same deal. Something that can host the pre-release software development/test environment, but otherwise completely unrelated to anything that will ship commercially.

Intel supports TB in the CPU only for the latest (10th generation) processors. For everybody else, the interface chips are PCIe devices.

While Apple might integrate TB3 into the Axxx SoC package, it is equally likely that they will ship a SoC with some number of PCIe lanes to drive on-board devices (like Thunderbolt ports, NVMe storage, etc.) On the Mac Pro, they will also connect to expansion slots.

In addition to simplifying the SoC’s design somewhat, it also allows them to leverage existing motherboard designs which use PCIe lanes for all kinds of on-board devices.

I absolutely agree with that. The point I was trying to make is that while Apple had already interfaced Axx CPUs to USB-C, they have not so far done that with TB. And as I already said, I don’t doubt they’ll get it ready by release of the first AS Macs. For now, there’s really no rush because the DTK didn’t need it for its mission.

Ugh. If Apple wants to do both developers and users a favor, why not FREEZE the MacOS for Intel processors where it is? Get all the bugs out and let developers catch up, then stop changing the OS every six months! Developers won’t have to maintain two versions, and a lot of users will be happy to keep using the apps that are working well on their Intel machines (without worrying about what happens next). When it’s really time to upgrade they can get a new Mac with Apple silicon, or jump ship.

Ok, now let’s see if Apple will release a similar Mac mini with the 16GB RAM and 512GB SSD for $500.00 like they are doing for the developers.

That’s a rental machine—you have to give it back at the end of the program. So don’t assume that $500 has any connection with the price of the hardware.

Apparently Microsoft will have to change its licensing for Windows 10 for ARM for people to be able to use it for virtualization. We’ll see what happens.

The initial Mac mini was $499 (in 2005, today that would be $674) so it’s not that absurd. That said, you’re entirely correct, Adam. The DTK is a rental.

For comparison, a Core i3 mini with 16/512 is $1200 or $1500 if you choose a six-core i7. Makes this rental sound rather expensive actually, but of course as dev box that’s besides the point.

This got me thinking about 2FA. If we’ll be able to use App Store apps on our Macs, won’t that at least to some degree defeat the purpose of apps like Google’s Authenticator?

Will app developers be able to choose to not allow their app to run on Macs at all? I realize they can already select not to compile a binary for macOS, but that’s not quite the same thing.

The Pentium 4 PPC to Intel dev kit was a $999 rental. That makes this one look inexpensive.

Oh, that’s another iPhone/iPad app I’d love on my Mac. Why does it matter where the time based one time code app is run? I log in to apps and sites on my iPhone (and iPad) from the same device all of the time (though sometimes I use my watch). The resulting code is the same no matter the platform.

Only if you forget that it came as the Intel equivalent to $3k PPC Power Mac. The ratios are similar though. Each rental box costs about a third of the retail price of the shipping Mac counterpart. Rather consistent of Apple. And I’m sure not a coincidence.

My work requires two separate devices be used for 2FA. It’s always either cell phone app for computer or web browser on computer for cell phone (VPN). The idea is that you require two separate hardware verifications to gain access. If an authenticator app were to run on my Mac, I could no longer use it to generate codes that I use to do 2FA on that same Mac. It’s not about codes being unique, it’s about codes being generated and verified on different devices. The idea is of course that while one device could be stolen or misappropriated, there’s much less chance that will happen on two separate devices simultaneously.

What do you mean by “defeat the purpose of apps like Google’s Authenticator”? Google Authenticator just generates TOTPs (time-based one-time passwords). If you use something that uses TOTP for 2FA, you’ll need something that can generate the codes, that won’t change on an ARM based Mac. And as there are a number of iOS and macOS apps that do so already (1Password for example), I don’t see ARM based Macs affecting this use much.

I doesn’t, at all. The code generated is based on the key you enter when you set it up (either by typing the key or scanning a QR code), and the number of 30 second intervals since midnight on January 1, 1970 (or other values the server and client agree on, those are the defaults). The machine/device the code is generated on doesn’t come into play.

An important point that was revealed on the SOTU Developer video hours after the Keynote announcements: Apple is designing an entire line of ARM based SoC’s that will be specifically targeting each Apple Mac’s unique needs and constraints. That would be physical space, power / energy consumption and cooling solutions.

The A12Z in the Developer Kit (custom Mac Mini) is NOT the production Apple Silicon SoC that will be going into future Macs. In fact, these Developer Kit Mac Mini’s with the A12Z SoC are in limited supply, you have to apply for them and qualify and you need to eventually return the device back to Apple when the program finishes. The A12Z is still a mobile device processor and while it performs remarkably well, there will likely be a large difference in the Mac SoC designs. Yes, they will inherit all the same technology but will be designed for significantly more energy use and performance. While still delivering massive gains on performance per watt for a laptop / desktop processor.

ALL of the Demos seen during the Keynote and the SOTU were all running on the A12Z which is the same SoC in the 2020 iPad Pro but fitted into a custom Mac Mini case. It alone is impressive but just wait till you see the real deal in the first few Macs. This is a very exciting time.

If you see benchmarks published from this A12Z take it with a grain of salt, because it’s not what will be shipping in Macs. It’s merely to give developers something to test with to become familiar with the new way of things.

Actually, benchmarking may be difficult at first. Most of the benchmarks are designed for x86-x64. Apple is working with Blender.org directly and Blender is used in benchmarking tests of CPU cores and GPU.

Yes, since Apple said that developers can opt-out of having their iPhone and iPad apps included in the Mac App Store. I doubt most will, but it’s possible.

As other have said, it doesn’t in general, though some systems may require it. I use Authy, because Google Authenticator loses all its codes in system upgrades, and Authy Desktop lets me access my codes on the Mac so I don’t have to pull out my iPhone and type them in. It’s still a little fussy, but easier. And I’m not too worried about the security of my Mac due to its physical location.

And then you got a “free” 17” intel iMac at the end of the DTK programme. Mine is still in use elsewhere in the family, I think it is the longest lasting Mac that I ever got.

Looking at prices and specs, surely it’ll only be a few years before users ask why they can’t plug their USB-C iPhone/iPads into a screen, and use it as a low end Mac mini.

Already exists. Samsung DeX. It probably sucks, and Apple’s implementation will hopefully be better.

Knowing Apple, they’ll remove the iPhone lightning port to avoid such considerations.

That will definitely be a concern a few years after the last Intel-based Mac ships. I think these days Apple’s Xcode is much more dominant for developing software for the Mac and through it Apple can at least encourage companies to keep their binaries “fat.” Another point of influence is Apple could require all Mac App Store applications be ARM/Intel; the store could also deliver to each Mac just the code compiled for that hardware.

No, and I doubt they’d try. Their competition will be Intel graphics, which they can probably already beat. For whatever reason, Apple and Nvidia have parted ways so they’ll need to work with AMD to make Radeon cards work on non-Intel Macs to satisfy the businesses that buy Mac Pros for GPU work.

The problems with Nvidia were very serious, and Apple was dealing with repairs for MacBooks for years. And for some time Nvidia refused to admit their cards were at fault:

https://support.apple.com/en-us/HT203254

In addition to faulty processors, there were supply chain issues that had big impact on Apple’s product delivery:

I wonder if sometime in the future Apple will start developing their own graphics cards?

I agree. Nothing indicates so far they’re trying to replace dedicated desktop-class GPUs similar to what Nvidia or AMD produce.

Considering the competitive advantage Nvidia has been displaying for the last couple of GPU generations, it’s high time for Apple to get real here and put behind petty vendettas. Nvidia offers something nobody else can match. It’s time Apple work to make that accessible to their professional customers who don’t care about bygones. Restricting yourself to a single GPU supplier is silly. It’s essentially putting them in the same boat with AMD as they just got out of with Intel.

A petty vendetta? Apple gets hit with a very, very major class action lawsuit because Nvidia refused to own up to the fact that Nvidia chips were bricking MacBook Pros? This literally cost Apple many years of ongoing bad publicity:

Apple had to take Nvidia to court to force them to admit to the flaws and help Apple pay for repairs Nvidia had to fork over $148.6 million for repairs that stretched over about 6-7 years:

And Apple missed the announced delivery date for its brand new 30 inch Cinema Displays by many months because Nvidia couldn’t deliver the chips on schedule. I linked to this in a previous post.

Then Nvidia yanked Apple into a patent lawsuit with Intel, which Apple had no intention of participating in:

Clearly, this is not “a petty vendetta.” It’s been a smart business move.

LOL. Good one. The only people who can afford that opinion are those who professionally haven’t had to rely on high-performance frameworks such as CUDA. Or those who can afford to wait twice as long for their GPU to return results.

Good one. The only people who can afford that opinion are those who professionally haven’t had to rely on high-performance frameworks such as CUDA. Or those who can afford to wait twice as long for their GPU to return results.

I think anyone who needs CUDA has already gone PC.

The vast majority of Mac graphics/video/animation users are happy with the alternatives, the overall ecosystem is more important to them.

I’m afraid that’s exactly the point, Tommy. Some Apple fans tend to have this myopic view that GPUs are about FCP. Scientific computing has zero interest in FCP, but it has been heavily involved with CUDA. Apple has lost likely around $200k from my department alone just because of their AMD single-sourcing folly. Apple used to care about scientific computing, now they seem to believe they can do well without. Maybe, maybe not. Regardless, tying yourself to a sole GPU supplier just makes no sense, even if Apple believes the only pros that matter are video studios.

It’s not my field but I thought the performance advantages of CUDA over OpenCL had been overstated. The remaining problem being CUDA having robust ecosystem of software libraries so you don’t have to write nearly so much yourself.

Or the cloud.

That’s been my experience, Curtis. You could attempt to write OpenCL code yourself and if you were really interested you might be able to make it perform almost as good.

People in the field I work in are for the most part not coders. We have scientific problems we are attempting to solve and our interest lies in the results, not the tools to get there. So we tend to use what’s already available, especially if that stuff is really good. If there are highly efficient CUDA libraries around or if we have ML tools nicely tailored to a small farm of Teslas that’s what we’ll want to use.

Could it be done in OpenCL or on AMD? Probably. Will people go the extra mile? No. Already before we did lots of computation on clusters, but there was always a fare amount of ‘local’ work, primarily testing and benchmarking. I’d say about half of my colleagues have now migrated to doing all of that on Linux boxes simply because Apple has been being dicky about GPU support. Especially those doing lots of ML. My corner of UC Berkeley is becoming quite a Google shop these days so common tools like Word or Keynote have effectively been replaced by Google docs anyway and they run in the same Chrome browser on any platform. These people have less and less reason to stay with Macs. Personally, I really like Linux and spend a lot of time using Linux tools, but I would definitely prefer I could do it all on my Mac rather than having to run several boxes. That’s probably niche. But of course so are those mixing rap music videos on $40,000 workstations that require $1000 wheel kits. ;)

I mentioned this once before, my cousin is an astrophysicist who has been working with NASA for decades and is also a professor at a major university. He loves Macs and Apple products as much as I do, and he’s always said how much they love Macs at NASA, and how the Curiosity Mars Rover was basically a G3 9600 Mac:

A radiologist friend of the family runs most of his practice on Macs, uses this software that he says is popular around the world:

An Optometrist neighbor uses this, and the company makes Mac software for other medical specialties:

A speech pathologist friend who is involved in research always talks about how important iPads and Macs are in the field.

Apple’s Research Kit and Care Kit for iOS and Macs are making headway in the medical and healthcare studies, as well as in communication between providers and patients:

There are many scientific fields that depend on visualization, rapid rendering and communication, as well as other fields that do not use CUDA.

Honestly thought CUDA was an old switch/button on the Mac motherboard.

That, too!

As could be expected, first benchmarks of the DTK have come out. It appears Rosetta only exposes 4 ARM cores (the performance cores?). Geekbench says on average 811 and 2,871 and that’s emulated x86!

Here’s a list of updated benchmarks.

My iMac has nothing to fear…

I too hope the support for Intel-based Macs lasts at least five years. I just replaced my 2013 MacBook Pro with a new 2019 MacBook Pro. I would like to get at least five years out of my recent purchase.

I’ve talked with developers, and they are all saying that running Intel based software on the A12Z chip with Rosetta 2 software runs almost as fast as a new MacBook Air. You must consider this:

So, running in emulation on a non-optimized emulator on a chip not designed for a Mac and is a generation behind the current chip is almost as fast as running on actual Intel hardware. That’s really impressive. Imagine the speed when running software compiled for ARM on a chip that’s designed to be used on a desktop machine.

When I first heard about Apple running Macs on this chip, it didn’t sound right. I figured that Apple was more likely making a desktop based iPad that would be less locked down than iPadOS.

When Apple went from the PowerPC to the Intel chip, the Mac platform was just renewed with OS X. The PowerPC chip couldn’t keep up with Intel and was at a dead end. Motorola wasn’t going to make a new generation of the PowerPC chip. Plus, running on an Intel chip would allow Windows and Linux virtualization — something extremely important in this world. It was a matter of survival for Apple to move from the PowerPC to Intel.

It is very different today. macOS is now over two decades old. Apple has a second generation platform in iOS/iPadOS running on ARM that is slowly moving into the desktop space. Intel’s i86 isn’t at a dead end. Intel is still working on the future generations of their chip, and even without the Mac, 90% of all computers will still use it. And moving the Mac to ARM may mean that developers who have used a Mac because they like the hardware, but need Windows emulation on a i86 chip will no longer be able to use new Macs. That’s a significant chunk of the Mac market.

There is only one reason for Apple to go through this trouble and tumult: Apple expects the new ARM Macs to blow every other desktop computer out of the water. Apple expects these new machines to be so much faster and so much more efficient, and the developer beta machine is barely hinting at what we can expect.

Not exactly. Rather, it’s ARM software running on an ARM chip. It’s translated in advance. That means there’s no emulation overhead, but there may be an overhead anyway from inefficiencies in the translation. Plus there are certain instruction sequences that just won’t run if apps are using them directly (though if they use them indirectly via Apple’s frameworks they’ll work, and in any case the programs that use these are the most likely to be updated).

The rest of this is true, though, and I echo your reasoning: I expect the products that Apple ships will be much faster than the DTK.

I’m wondering if Windows VMs in Parallels will continue to work via Intel emulation.

But what I REALLY would love to see is MacOS running on iPad Pro HW, with the new Magic Keyboard & trackpad, and the Apple Pencil. That way I could travel with just an iPad.

Apple says no. In fact that is one of only two exceptions Apple points out (the other being kexts).

In principle that might be possible since there will be a common instruction set. But Apple would have to allow for it which I highly doubt. Mac apps (including the entire OS) will have been designed with far superior performance in mind than what an iPad usually offers. Case in point: the current DTK is based on roughly current iPad Pro performance level, yet nobody believes that retail arm64 Macs will perform at this level. Far from it actually, at least according to those championing this transition from x86 to arm64.

It might be possible in theory, but very very unlikely, and for perfectly good technical reasons.

Rosetta works by “compiling” x86 code into ARM code. It is designed to translate all of the instructions that are likely to be used by applications. But it doesn’t translate all of them. Apple has, for example, said that not all of Intel’s latest vector math instructions will be supported.

It is also highly unlikely that they will translate privileged instructions and registers that are only used by operating system kernels. For example, those used to manage virtual memory page tables and direct access to PCIe buses.

All hypervisors work by intercepting access to privileged instructions and registers, redirecting them to manipulate virtualized versions of the corresponding hardware.

In a Rosetta environment, those instructions will have to be compiled into the ARM-equivalent privileged instructions/registers. Then the hypervisor’s code to trap the illegal accesses must be compiled into ARM code that can trap the translated privileged instructions. That’s a pretty ugly bit of code. It could certainly be implemented, but that’s a big engineering effort for something that is literally only useful for implementing x86-based VMs.

Any project for running a non-Mac x86 OS software will probably involve emulating the x86 platform (e.g. via QEMU). Fortunately, it should be possible to develop such a package (e.g. porting QEMU) without Apple providing explicit support for it.

I wonder if there will be a port of DosBox for ARM-based Macs. I saw a forum thread from 2006 where someone was working on developing an x86-ARM compiler module for portability to tablets and phones, so I’m hopeful that there are people with the skills and desire to develop this port.

But those issues pertain to running x86 apps through Rosetta 2.

If however you just take vanilla Big Sur along with arm64-compiled Mac apps all that should in principle run on an iPad. My point was that just because it can be done does not necessarily mean Apple would want it to be done, especially not if performance is bad and hence the entire user experience suffers.

I’m sorry, I got a bit turned around. There are two interlacing threads here - one about running Windows VMs (which I was talking about) and the other being ARM macOS on iOS devices.

The latter, as you point out, is theoretically possible, but it would require the installation of a VM or container environment on the iOS device. This kind of software has never been permitted on the iOS platforms in the past, and I wouldn’t expect it today.

Indeed. These are important caveats, and they were the same in the transition from PowerPC to Intel (although there we were going from VirtualPC to real PC!)

My day job involves a lot of JIT (Java compilation and execution) and running, testing and debugging Linux x86 execution environments under Docker and VirtualBox

Realistically, today, there’s no way we can avoid x86 (Java code does not run in a vacuum, and some of our third party tooling is currently x86 specific. While that may change, and AWS have just started offering ARM servers, Linux x86-64 servers seem destined to be with us a while yet)

Mac will remain my own personal choice but I shall greatly miss being able to work in a MacOS environment in my day job, as I’ve enjoyed for the last 10 years, once the x86-64 Apple hardware is obsolete.

Intel has announced it won’t be able to produce 7nm chips until 2021 or 2022.

Apple’s move to its own silicon is seeming more and more prescient. Unless Apple knew ahead of time that this was coming.

I doubt that they “knew”, but I’m sure they suspected.

Apple silicon (fabed by TSMC) started using 7nm in the A12 (September 2018) and 10nm in the A10X (June 2017). The fact that Intel hasn’t been able to keep up so far is enough to make one wonder about their abilities going forward.

And as a close Intel partner and good customer (so far at least) Apple was certainly privvy to more information than the general public, and definitely at an earlier stage.

Now we just need to see first Apple Silicon perform thrice as well at half the wattage in shipping Macs and we should be good to go for the next few years.

Intel seems to be trying to correct the problem using the stereotypical corporate solution: Reorganization.

The next few months should show if this was a good solution or just another Dilbertism.

Interesting.

I do feel compelled to quote this little gem of corporate newspeak.

I’d be tempted to ask a friendly fellow poster to translate that to common English, but I’m afraid we all know where that would end up.

In a few years, there may be almost no difference between a Mac and an iPad. Both will run the same OS and the difference (whether multi-window and menus Mac or whole screen and touch iPad) will be based on the size of your screen and your input devices.

I can see an iPad Pro where attaching a second large screen, a keyboard, and mouse will make the OS look like it was on a Mac.

Here’s another reason for Apple to move to its own custom chips—more control over security. This isn’t to say that Intel necessarily did anything horribly wrong, just that Mac users may be impacted by the Intel breach.

An interesting new plot twist to this story: