FAQ about Apple’s Expanded Protections for Children

Two privacy changes that Apple intends to reduce harm to children will roll out to iCloud Photos and Messages in the iOS 15, iPadOS 15, and macOS 12 Monterey releases in the United States.

The first relates to preventing the transmission and possession of photos depicting the sexual abuse of minor children, formally known by the term Child Sexual Abuse Material (CSAM) and more commonly called “child pornography.” (Since children cannot consent, pornography is an inappropriate term to apply, except in certain legal contexts.) Before photos are synced to iCloud Photos from an iPhone or iPad, Apple will compare them against a local cryptographically obscured database of known CSAM.

The second gives parents the option to enable on-device machine-learning-based analysis of all incoming and outgoing images in Messages to identify those that appear sexual in nature. It requires Family Sharing and applies to children under 18. If enabled, kids receive a notification warning of the nature of the image, and they have to tap or click to see or send the image. Parents of children under 13 can additionally choose to get an alert if their child proceeds to send or receive “sensitive” images.

Apple will also update Siri and Search to recognize unsafe situations, provide contextual information, and intervene if users search for CSAM-related topics.

As is always the case with privacy and Apple, these changes are complicated and nuanced. Over the past few years, Apple has emphasized that our private information should remain securely under our control, whether that means messages, photos, or other data. Strong on-device encryption and strong end-to-end encryption for sending and receiving data have prevented both large-scale privacy breaches and the more casual intrusions into what we do, say, and see for advertising purposes.

Apple’s announcement headlined these changes as “Expanded Protections for Children.” That may be true, but it could easily be argued that Apple’s move jeopardizes its overall privacy position, despite the company’s past efforts to build in safeguards, provide age-appropriate insight for parents about younger children, and rebuff governments that have wanted Apple to break its end-to-end encryption and make iCloud less private to track down criminals (see “FBI Cracks Pensacola Shooter’s iPhone, Still Mad at Apple,” 19 May 2020).

You may have a lot of questions. We know we did. Based on our experience and the information Apple has made public, here are answers to some of what we think will be the most common ones. After a firestorm of confusion and complaints, Apple also released its own Expanded Protections for Children FAQ, which largely confirms our analysis and speculation.

Question: Why is Apple announcing these technologies now?

Answer: That’s the big question. Even though this deployment is only in the United States, our best guess is that the company has been under pressure from governments and law enforcement worldwide to participate more in government-led efforts to protect children.

Word has it that Apple, far from being the first company to implement such measures, is one of the last of the big tech firms to do so. Other large companies keep more data in the cloud, where it’s protected only by the company’s encryption keys, making it more readily accessible to analysis and warrants. Also, the engineering effort behind these technologies undoubtedly took years and cost many millions of dollars, so Apple’s motivation must have been significant.

The problem is that exploitation of children is a highly asymmetric problem in two different ways. First, a relatively small number of people engage in a fairly massive amount of CSAM trading and direct online predation. The FBI notes in a summary of CSAM abuses that several hundred thousand participants were identified across the best known peer-to-peer trading networks. That’s just part of the total, but a significant number of them. The University of New Hampshire’s Crimes Against Children Research Center found in its research that “1 in 25 youth in one year received an online sexual solicitation where the solicitor tried to make offline contact.” The Internet has been a boon for predators.

The other form of asymmetry is adult recognition of the problem. Most adults are aware that exploitation happens—both through distribution of images and direct contact—but few have personal experience or exposure themselves or through their children or family. That leads some to view the situation somewhat abstractly and academically. Those who are closer to the problem—personally or professionally—may see it as a horror that must be stamped out, no matter the means. Where any person comes down on how far tech companies can and should go to prevent exploitation of children likely depends on where they are on that spectrum of experience.

CSAM Detection

Q: How will Apple recognize CSAM in iCloud Photos?

A: Obviously, you can’t build a database of CSAM and distribute it to check against because that database would leak and re-victimize the children in it. Instead, CSAM-checking systems rely on abstracted fingerprints of images that have been vetted and assembled by the National Center for Missing and Exploited Children (NCMEC). The NCMEC is a non-profit organization with a quasi-governmental role that allows the group to work with material that is otherwise illegal to possess. It’s involved in tracking and identifying newly created CSAM, finding victims depicted in it, and eliminating the trading of existing images. (The technology applies only to images, not videos.)

Apple describes the CSAM recognition process in a white paper. Its method allows the company to take the NCMEC database of cryptographically generated fingerprints—called hashes—and store that on every iPhone and iPad. (Apple hasn’t said how large the database is; we hope it doesn’t take up a significant percentage of a device’s storage.) Apple generates hashes for images a user’s device wants to sync to iCloud Photos via a machine-learning algorithm called NeuralHash that extracts a complicated set of features from an image. This approach allows a fuzzy match against the NCMEC fingerprints instead of an exact pixel-by-pixel match—an exact match could be fooled by changes to an image’s format, size, or color. Howard Oakley has a more technical explanation of how this works.

Apple passes the hashes through yet another series of cryptographic transformations that finish with a blinding secret that stays stored on Apple’s servers. This makes it effectively impossible to learn anything about the hashes of images in the database that will be stored on our devices.

Q: How is CSAM Detection related to iCloud Photos?

A: You would be forgiven if you wondered how this system is actually related to iCloud Photos. It isn’t—not exactly. Apple says it will only scan and check for CSAM matches on your iPhone and iPad for images that are queued for iCloud Photos syncing. A second part of the operation happens in the cloud based on what’s uploaded, as described next.

Images already stored in your iCloud accounts that were previously synced to iCloud Photos won’t be scanned. However, nothing in the system design would prevent all images on a device from being scanned. Nor is Apple somehow prohibited from later building a cloud-scanning image checker. As Ben Thompson of Stratechery pointed out, this is the difference between capability (Apple can’t scan) and policy (Apple won’t scan).

Apple may already be scanning photos in the cloud. Inc. magazine tech columnist Jason Aten pointed out that Apple’s global privacy director Jane Horvath said in a 2020 CES panel that Apple “was working on the ability.” MacRumors also reported her comments from the same panel: “Horvath also confirmed that Apple scans for child sexual abuse content uploaded to iCloud. ‘We are utilizing some technologies to help screen for child sexual abuse material,’ she said.” These efforts aren’t disclosed on Apple’s site, weren’t discussed this week, and haven’t been called out by electronic privacy advocates.

However, in its 2020 list of reports submitted by electronic service providers, NCMEC says that Apple submitted only 265 reports to its CyberTipline system (up from 205 in 2019), compared with 20,307,216 for Facebook, 546,704 for Google, and 96,776 for Microsoft. Apple is legally required to submit reports, so if it were scanning iCloud Photos, the number of its reports would certainly be much higher.

Q. How does Apple match images while ostensibly preserving privacy?

A: All images slated for upload to iCloud Photos are scanned, but matching occurs in a one-way process called private set intersection. As a result, the owner of a device never knows that a match occurred against a given image, and Apple can’t determine until a later stage if an image matched—and then only if there were multiple matches. This method also prevents someone from using an iPhone or iPad to test whether or not an image matches the database.

After scanning, the system generates a safety voucher that contains the hash produced for an image, along with a low-resolution version of the image. A voucher is uploaded for every image, preventing any party (the user, Apple, a government agency, a hacker, etc.) from using the presence or absence of a voucher as an indication of matches. Apple further seeds these uploads with a number of generated false positive matches to ensure that even it can’t create an accurate running tally of matches.

Apple says it can’t decrypt these safety vouchers unless the iCloud Photos account crosses a certain threshold for the quantity of CSAM items. This threshold secret sharing technology is supposed to reassure users that their images remain private unless they are actually actively trafficking in CSAM.

Apple encodes two layers of encryption into safety vouchers. The outer layer derives cryptographic information from the NeuralHash of the image generated on a user’s device. For the inner layer, Apple effectively breaks an on-device encryption key into a number of pieces. Each voucher contains a fragment. For Apple to decode safety vouchers, an undisclosed number of images must match CSAM fingerprints. For example, you might need 10 out of 1000 pieces of the key to decrypt the vouchers. (Technically, we should use the term secret instead of key, but it’s a bit easier to think of it as a key.)

This two-layer approach lets Apple check only vouchers that have matches without being able to examine the images within the vouchers. Only once Apple’s servers determine a threshold of vouchers with matching images has been crossed can the secret be reassembled and the matching low-resolution previews extracted. (The threshold is set in the system’s design. While Apple could change it later, that would require recomputing all images according to the new threshold.)

Using a threshold of a certain number of images reduces the chance of a single false positive match resulting in serious consequences. Even if the false positive rate were, say, as high as 0.01%, requiring a match of 10 images would nearly eliminate the chance of an overall false positive result. Apple writes in its white paper, “The threshold is selected to provide an extremely low (1 in 1 trillion) probability of incorrectly flagging a given account.” There are additional human-based checks after an account is flagged, too.

Our devices also send device-generated false matches. Since those false matches use a fake key, Apple can decrypt the outer envelope but not the inner one. This approach means Apple never has an accurate count of matches until the keys all line up and it can decrypt the inner envelopes.

Q: Will Apple allow outside testing that its system does what it says?

A: Apple controls this system entirely and appears unlikely to provide an outside audit or more transparency about how it works. This stance would be in line with not allowing ne’er-do-wells more insight into how to beat the system, but also means that only Apple can provide assurances.

In its Web page covering the child-protection initiatives, Apple linked to white papers by three researchers it briefed in advance (see “More Information” at the bottom of the page). Notably, two of the researchers don’t mention if they had any access to source code or more than a description of the system as provided in Apple’s white paper.

A third, David Forsyth, wrote in his white paper, “Apple has shown me a body of experimental and conceptual material relating to the practical performance of this system and has described the system to me in detail.” That’s not the kind of outside rigor that such a cryptographic and privacy system deserves.

In the end, as much as we’d like to see otherwise, Apple has rarely, if ever, offered even the most private looks at any of its systems to outside auditors or experts. We shouldn’t expect anything different here.

Q: How will CSAM scanning of iCloud Photos affect my privacy?

A: Again, Apple says it won’t scan images that are already stored in iCloud Photos using this technology, and it appears that the company hasn’t already been scanning those. Rather, this announcement says the company will perform on-device image checking against photos that will be synced to iCloud Photos. Apple says that it will not be informed of specific matches until a certain number of matches occurs across all uploaded images by the account. Only when that threshold is crossed can Apple gain access to the matched images and review them. If the images are indeed identical to matched CSAM, Apple will suspend the user’s account and report them to NCMEC, which coordinates with law enforcement for the next steps.

It’s worth noting that iCloud Photos online storage operates at a lower level of security than Messages. Where Messages employs end-to-end encryption and the necessary encryption keys are available only to your devices and withheld from Apple, iCloud Photos are synced over secure connections but are stored in such a way that Apple can view and analyze them. This design means that law enforcement could legally compel Apple to share images, which has happened in the past. Apple pledges to keep iCloud data, including photos and videos, private but can’t technically prevent access as it can with Messages.

Q: When will Apple report users to the NCMEC?

A: Apple says its matching process requires multiple images to match before the cryptographic threshold is crossed that allows it to reconstruct matches and images and produce an internal alert. Human beings then review matches—Apple describes this as “manually”—before reporting them to the NCMEC.

There’s also a process for an appeal, though Apple says only, “If a user feels their account has been mistakenly flagged they can file an appeal to have their account reinstated.” Losing access to iCloud is the least of the worries of someone who has been reported to NCMEC and thus law enforcement.

(Spare some sympathy for the poor sods who perform the “manual” job of looking over potential CSAM. It’s horrible work, and many companies outsource the work to contractors, who have few protections and may develop PTSD, among other problems. We hope Apple will do better. Setting a high threshold, as Apple says it’s doing, should dramatically reduce the need for human review of false positives.)

Q. Couldn’t Apple change criteria and scan a lot more than CSAM?

A: Absolutely. Whether the company would is a different question. The Electronic Frontier Foundation states the problem bluntly:

…it’s impossible to build a client-side scanning system that can only be used for sexually explicit images sent or received by children. As a consequence, even a well-intentioned effort to build such a system will break key promises of the messenger’s encryption itself and open the door to broader abuses.

There’s no transparency anywhere in this entire system. That’s by design, in order to protect already-exploited children from being further victimized. Politicians and children’s advocates tend to brush off any concerns about how efforts to detect CSAM and identify those receiving or distributing it may have large-scale privacy implications.

Apple’s head of privacy, Erik Neuenschwander, told the New York Times, “If you’re storing a collection of C.S.A.M. material, yes, this is bad for you. But for the rest of you, this is no different.”

Given that only a very small number of people engage in downloading or sending CSAM (and only the really stupid ones would use a cloud-based service; most use peer-to-peer networks or the so-called “dark web”), this is a specious remark, akin to saying, “If you’re not guilty of possessing stolen goods, you should welcome an Apple camera in your home that lets us prove you own everything.” Weighing privacy and civil rights against protecting children from further exploitation is a balancing act. All-or-nothing statements like Neuenschwander’s are designed to overcome objections instead of acknowledging their legitimacy.

In its FAQ, Apple says that it will refuse any demands to add non-CSAM images to the database and that its system is designed to prevent non-CSAM images from being injected into the system.

Q: Why does this system concern civil rights and privacy advocates?

A: Apple created this system of scanning user’s photos on their devices using advanced technologies to protect the privacy of the innocent—but Apple is still scanning users’ photos on their devices without consent (and the act of installing iOS 15 doesn’t count as true consent).

It’s laudable to find and prosecute those who possess and distribute known CSAM. But Apple will, without question, experience tremendous pressure from governments to expand the scope of on-device scanning. This is a genuine concern since Apple has already been forced to compromise its privacy stance by oppressive regimes, and even US law enforcement continues to press for backdoor access to iPhones. Apple’s FAQ addresses this question directly, saying:

We have faced demands to build and deploy government-mandated changes that degrade the privacy of users before, and have steadfastly refused those demands. We will continue to refuse them in the future. Let us be clear, this technology is limited to detecting CSAM stored in iCloud and we will not accede to any government’s request to expand it.

On the other hand, this targeted scanning could reduce law-enforcement and regulatory pressure for full-encryption backdoors. We don’t know how much negotiation behind the scenes with US authorities took place for Apple to develop this solution, and no current government officials are quoted in any of Apple’s materials—only previous ones, like former U.S. Attorney General Eric Holder. Apple has opened a door of possibility, and no one can know for sure how it will play out over time.

Security researcher Matthew Green, a frequent critic of Apple’s lack of transparency and outside auditing of its encryption technology, told the New York Times:

They’ve been selling privacy to the world and making people trust their devices. But now they’re basically capitulating to the worst possible demands of every government. I don’t see how they’re going to say no from here on out.

Image Scanning in Messages

Q: How will Apple enable parental oversight of children sending and receiving images of a sexual nature?

A: Apple says it will build a “communication safety” option into Messages across all its platforms. It will be available only for children under 18 who are part of a Family Sharing group. (We wonder if Apple may be changing the name of Family Sharing because its announcement calls it “accounts set up as families in iCloud.”) When this feature is enabled, device-based scanning of all incoming and outgoing images will take place on all devices logged into a kid’s account in Family Sharing.

Apple says it won’t have access to images, and machine-learning systems will identify the potentially sexually explicit images. Note that this is an entirely separate system from the CSAM detection. It’s designed to identify arbitrary images, not match against a known database of CSAM.

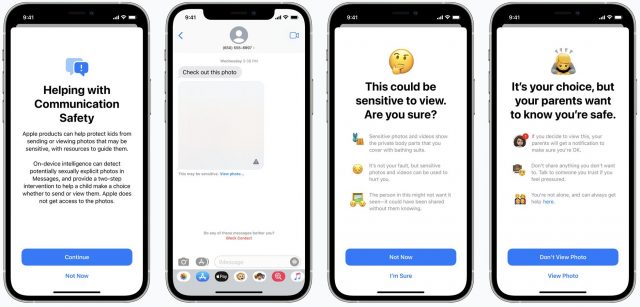

Q: What happens when a “sensitive image” is received?

A: Messages blurs the incoming image. The child sees an overlaid warning sign and a warning below the image that notes, “This may be sensitive. View photo…” Following that link displays a full-screen explanation headed, “This could be sensitive to view. Are you sure?” The child has to tap “I’m Sure” to proceed.

For children under 13, parents can additionally require that their kids’ devices notify them if they follow that link. In that case, the child is alerted that their parents will be told. They must then tap “View Photo” to proceed. If they tap “Don’t View Photo,” parents aren’t notified, no matter the setting.

Q: What happens when children try to send “sensitive images”?

A: Similarly, Messages warns them about sending such images and, if they are under 13 and the option is enabled, alerts them that their parents will be notified. If they don’t send the images, parents are not notified.

Siri and Search

Q: How is Apple expanding child protection in Siri and Search?

A: Just as information about resources for those experiencing suicidal ideation or knowing people in that state now appears in relevant news articles and is offered by home voice assistants, Apple is expanding Siri and Search to acknowledge CSAM. The company says it will provide two kinds of interventions:

- If someone asks about CSAM or reporting child exploitation, they will receive information about “where and how to file a report.”

- If someone asks or searches for exploitative material related to children, Apple says, “These interventions will explain to users that interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue.”

Has Apple Opened Pandora’s Box?

Apple will be incredibly challenged to keep this on-device access limited to a single use case. Not only are there no technical obstacles limiting the expansion of the CSAM system into additional forms of content, but primitive versions of the technology are used by many organizations and codified into most major industry security standards. For instance, a technology called Data Loss Prevention that also scans hashes of text, images, and files is already widely used in enterprise technology to identify a wide range of arbitrarily defined material.

If Apple holds its line and limits the use of client-side scanning to identify only CSAM and protect children from abuse, this move will likely be a footnote in the company’s history. But Apple will come under massive pressure from governments around the world to apply this on-device scanning technology to other content. Some of those governments are oppressive regimes in countries where Apple has already adjusted its typical privacy practices to be allowed to continue doing business. If Apple ever capitulates to any of those demands, this announcement will mark the end of Apple as a champion of privacy.

It would have been a lot better received by the user base and privacy folk like EFF and others if the hashing was done only on images uploaded to their servers and not on the user’s device…that’s the first step to what FBI/LEO want which is a real government back door which we know is an oxymoron…and it’s a very strange step for a privacy centric company to take. The DB that they hash against will be required by China for instance to contain other images that the Chinese government considers subversive.

Then there’s the issue that they’re only going to find kiddie porn that has already been discovered and hashed for the DB…it will do nothing to find the snapshot the pervert took of the little girl down the street this morning and sent to his buddies…since that one has never been adjudicated as kiddie porn and hashed into the DB.

I suppose it might be true that they see the handwriting on the wall re privacy and decided to give a little now in hopes of preventing more intrusive government requirements down the road…but doing the hashing on only uploaded photos and doing it on their servers rather than user devices would accomplish the same purpose while still allowing them to say “we have no access to the iPhone’s contents…because now clearly they will have access and will get pressured by FBI/etc to give them more…and China will just pass a law saying they have to add images to the DB that China says to add and since Apple obeys local country laws they’ll do so and the dissidents will be oppressed even more.

What’s worse…once this gets out all a user has to do to prevent detection is to open the image on their phone and change a single pixel…which means that a different hash gets generated that is completely different since that’s the way hashes work…and thus the image will pass the DB hash test.

Offline encryption is pretty easy anyway…and while this particular special set of circumstances seems good…how long will it be until they’re pressured to modify again for another ‘critical national need’ like anti terrorism. Although to tell you the truth…any halfway smart terrorist is probably already off lining if they are any good…PGP and the like work just fine and the age old book method of selecting words is pretty much unbreakable…as long as the members of whatever group it is use the same edition and printing and don’t pass that info around then it’s just a 123-45 for 45th word on page 123 and a whole series of numbers like that. Essentially a one time pad and especially secure if some obscure book is used…even the NSA doesn’t have enough computers to run it through every possible book that was printed.

I don’t like it…

It’s possible Apple is already doing that. If so, why this?

I don’t understand that at all – server-side would be far more invasive in terms of privacy. Apple has gone to tremendous lengths to do this client-side specifically to preserve our privacy as this way even Apple can’t see the images.

Many think this is the first step toward Apple encrypting iCloud backups – which wouldn’t work if Apple was going to implement a client-side scan. Encrypting iCloud backups would be awesome for user privacy and way outweigh this minor “invasion” of CSAM flagging.

I also don’t see how this is a “slippery slope.” The system Apple has built is so complicated and so specific to CSAM data, how could this be exploited by governments or others? For instance, there is only this one database of image fingerprints – it’s not like the system is set up to have databases for different topics. Which means adding in other fingerprints would get them mixed in with CSAM data, confusing the end results. Say Country A demands Apple include some fingerprints for dissident images, the threshold system still applies, so X infringing images have to found – there’s no way for the system to know how many of those are CSAM and how many are other types.

Sure, as Gruber says, Apple could change the way the system works – but I think we can trust Apple enough to know they wouldn’t do that. (They’re the same company that refused to build a compromised OS for the FBI even though technically, they have to ability to do so. This would be the same thing: “Yes, we could compromise our security, but we won’t.”)

(Some argue that Apple has already compromised by using servers in China for Chinese users as the law there requires, but that’s vastly different from Apple writing software to spy on its users. In that case China is doing any spying, not Apple.)

That’s not how this image fingerprinting works. The hash created is apparently the same regardless of the image size (resolution), color, and even cropping. I have no idea how that works, but it’s apparently not easy to modify the image to avoid the same fingerprint.

Isn’t the theory that Apple may eventually turn on encrypted backups, in which case server-side scanning couldn’t happen?

That’s the only scenario that makes sense of this to me. Apple is infamous for long-term planning, so it wouldn’t surprise me if this is part of a long process to go fully encrypted.

Backups wouldn’t affect iCloud Photos. Apple would have to disable web-based access to iCloud Photos in order to enable E2EE as they do for the face-matching characteristics based among devices for Photos’ People album, iCloud Keychain, and a variety of other miscellaneous elements.

Anything accessible via iCloud.com through a simple account login lacks E2EE. I’m not sure Apple is at the point where they would want to disable calendar, contacts, notes, and photos at iCloud.com—or build reliable in-browser device-based encryption, which is doable but would need to be locked to Safari on Apple devices, in which case, why not use a native app?

Related, email will always not be E2EE until such a point as a substantial number of email clients embed and enable automatic person-to-person encrypted messages. People and companies have been working on that for decades.

It’s true that the actual matching and computation as to whether thresholds are met is done on the client Mac. However, it is only done on photos that are candidates to be uploaded (i.e. being imported into iCloud Library). If the process was carried out on the server ,then Apple would see the scores for EVERY photo uploaded. So, by having the computation happen on the client, Apple only gets involved when the threshold is met, rather than being involved in the upload of every photo.

Speak for yourself. I don’t trust them to do that. Perhaps here in the US, but I certainly wouldn’t trust them with that kind of power in China.

There’s a world of difference between saying we can’t build something from scratch vs. we can’t change this parameter from 20 to 2 or we can’t add another 1000 hashes to the 20 million we’re already checking against.

They wouldn’t have had to “build something from scratch” – all the FBI wanted was for Apple to turn off the escalating time limits for wrong passcodes. That could probably be easily done by turning off a few flags in the OS and recompiling a special less secure version of the OS.

And modifying this new CSAM checking isn’t just adding some new hashes – the whole system would have to be redesigned to work a completely different way for it to do other kinds of searching. That’s not trivial and would require much engineering and research.

As Rich put it (I’m not sure it’s in the article), Apple is distributing the computational task across all users. But 100% of images slated for upload are scanned and every uploaded images has a safety voucher attached. A voucher marks that scanning occurred, but reveals nothing without extra steps about whether a match was made.

Not the way the system is described. Apple could add hashes and tag them for different countries or purposes, fracture keys across matches in different ways, etc. They can do anything at this point and not tell us. (They could have done in the past, but likely would have violated various U.S. laws without providing any disclosure of it.)

The entire system is based on using an arbitrary set of image hashes without knowing what is in the pictures. These same kind of hashes could be created from other images.

Apple’s argument in that case was as Simon says: the feds wanted them to create FedOS that would have different rules and help the FBI install this replacement OS without deleting the data in Secure Enclave or elsewhere. FedOS would have allowed far easier passcode cracking.

That’s a good point. The only other reason I can think of Apple adding this then, is that doing it this way (client-side) is more private (Apple never sees the images unless the system flags them and they have to be verified by a human).

In theory Apple could do it the same way server-side, but that’s a slipperier slope, as Apple could change the behavior with no warning to the user, and Apple is essentially scanning every image with no verification that it’s done privately (like it would be on-device).

Most companies would be going out of their way to do it server-side – it’s far easier – but Apple is going to extraordinary lengths to do this client-side to preserve our privacy. Yet the outcry is that Apple is suddenly evil for doing this.

Yes, but wouldn’t those all be flagged as CSAM? Is the system really built so they can flag different kinds of content? From my (limited) reading it seemed that there is no setting for the type of content. It seemed very binary, either CSAM or not. Mixing in political or other “bad” content hashes would trigger the same way (once it exceeds the threshold) and it would take extra processing (or a human) to figure out what is what.

Speaking of the human side, what good would adding other trigger content do to whatever organization wanted it? This info isn’t automatically passed on to the authorities – it goes to human processor first. So would an Apple employee really be in charge of reporting a dissident image to China, for instance? That sounds like a scenario Apple would go to great lengths to avoid.

Creating a special OS build for the Feds alone and putting it onto an existing iOS device remotely without tampering with any user data on it in the process is easy?

OMG “completely redesigned”. Now that’s just patently false.

If you read all the white papers Apple published, the system is only described at a high level. The three researchers who published papers Apple quoted only describe it at a high level. We have no idea of how the implementation works.

The CSAM hashes are a database that Apple creates from data provide by NCMEC (not sure if NCMEC runs the algorithm Apple gives them against images or what). That database can certainly have additional fields. One field can be “country to apply.” Another can be "kind of image type: CSAM, human rights activist faces, etc.

That’s a structure Apple describes, not a, say, legal process nor is there any transparency.

What you’re describing are a large array of assumptions, sorry! These include:

Most likely, China would provide a series of hashes to Apple and would require Apple forward matching images and account information to Chinese censors to review.

Until China passes a law requiring companies to include image hashes that the Chinese government wants…and Apple will obey the local country laws.

Interesting title.

The Hungarian government also just introduced a law with the exact same phrasing: For the expanded protection for children. Among other things, it also outlaws the showing of homosexuality on TV-shows, as it is not according to the Hungarian tradition or whatever, and in their point of view just as dangerous for children to see on-screen.

I wonder if they could even show an interview with Tim Cook on Hungarian TV given this - and now they will ask Apple to scan for photos in breach of that law too. Perhaps Tucker Carlson can find out doing his gig in Budapest this week…

The road to hell is still paved with good intentions - and Apple is killing the main reason to be a loyal customer: privacy.

PS. Years ago, I had a support case with a Windows user whose anti-virus app - which was using hashes similar to the way this new system is supposed to work to find viruses - stopped them from working on a GIS-data set, that apparently had the same hash as a virus - but was definitely not. This will happen too in this case.

Nonsense…if the same images are scanned/hashed on the server the privacy implications re those images are the same…and if on the server then our devices haven’t been compromised with a capability that can easily be abused by requiring changes to the DB.

I really don’t understand why you’re in favor of server-side scanning. That to me is 100x worse. Apple can change the scanning algorithms at any time and none of us would know. They could add on scans for anything, even facial recognition looking for known criminals or whatever. Client-side is a lot more limited and that’s better from a privacy perspective.

I’m not saying they can’t change the on-device method, but that’s at least a lot more complicated and requires an OS update, etc. That seems a lot safer from a user-perspective.

This is speculation. While I agree we haven’t seen Apple’s code and can only read the high-level white papers describing their system, from what I’ve read I don’t see a way for this type of information to be included and/or passed on to the server.

For example, Apple’s white paper shows that the way an image is reported as a positive hit is by successful decryption. In other words, the image’s status (positive or negative as matching the database) is used in the encryption key (so to speak) so when the server tries to decrypt it, only positive hits are successful. There’s no flag or tag on the file that tells it is CSAM or something else. It’s just binary – successful or not.

Because of the second layer of encryption, nothing more can be deduced about the image until the threshold quantity is exceeded. So until then, there is no real information. Even if additional information about the image type – “CSAM”, “terrorist”, “activist”, “jaywalker” – is included, it would have to be included within the voucher which is inside the second layer of encryption which can only be seen once the threshold is exceeded.

That to me seems like a very poor system for finding terrorists or dissidents or others, as everything is still lumped together into one “exceeded the threshold” group of images.

Then you get into the issue of accuracy. Presumably Apple included the threshold system to prevent false positives and only flag egregious users (i.e. an account with a lot of CSAM). We don’t know what that threshold level is (Apple isn’t saying), but clearly it must be a certain amount in order for Apple to calculation their “1 in a trillion” odds of false positives.

Now if the system is modified to start watching for additional content (terrorists and jaywalkers), and those results are mixed into the group that exceeded the threshold, wouldn’t that interfere with Apple’s “1 in a trillion” calculation?

For example, say 10 is the threshold. Someone has to have at least 11 CSAM images to be reported. Apple has decided that the odds of someone with 9 images is lower than “1 in a trillion” and too risky (as some of those might be false positives), so they set the threshold higher.

But if the threshold is exceeded and there are 3 terrorist hits, 3 activists, 2 jaywalker, and 3 CSAM, none of those categories have enough hits to ensure an accurate report (you need at least 11 of each to ensure the 1 in a trillion odds). Yes, a human could look at the images and decide, but you’ve basically ruined the whole threshold approach by mixing in multiple types of content reporting into one system.

Now some countries wouldn’t care about threshold, of course, and would be willing to crack down on an individual just based on suspicion, but this seems like a weird system for Apple to create to let it be abused like that. From the description, it seems Apple only wants this to apply to the most egregious users, and the way that it’s designed that would apply to whatever content was being searched for (CSAM or something else).

If Apple just wanted to include a back door, there would be much easier ways of doing it. This system seems to me to be deliberately designed to be extremely limited – and any of the doomsday scenarios panicky people describe seem really unlikely. There are probably 100 other places we’re trusting Apple to do what they say that are more vulnerable than this supposed “backdoor.”

Correct. We don’t know anything except they’re building a system that they entirely control, offer no transparency into, and will not allow outside audits of.

The threshold could be set to 1. The sharding of the encryption happens on device. This can modified. It likely will be.

The best way for totalitarian governments to implement surveillance is on the back of systems that people all agree are necessary.

The most dangerous phrase in the world is “trust me.”

Thank you, Glenn.

*The most dangerous phrase in the world is “trust me.”

I agree. I have to admit that with Apple’s move I have lost trust in this company. This makes me sad.

In my view Apple crosses several red lines, foremost in respect to its reputation as a company that values the privacy of its users above anything else, independently whether this reputation is justified or not.

Specifically, Apple crosses red lines concerning the functionality of its apps:

Detecting child pornography or any other type of misbehaviour has no place in an operating system.

N. E. Fuchs

The CSAM image fingerprinting sounds like it is based on the PhotoDNA algorithms, which detect specific known images (despite transformations on those images), and are not image “recognition” techniques per se (they don’t recognise a known face in an arbitrary image). There’s some extra techniques described in there to prevent it being used to “test” images locally, and to not reveal information until a threshold number of matching images has been reached.

Quite clever stuff.

I don’t see how it could be repurposed for recognising “persons of interest” in any picture, or for detecting arbitrary documents or information - the fingerprint technique would be completely unsuitable, and another would have to be implemented (“that’s not what we’ve built”).

It clearly could be used to detect other commonly shared images, such as those of Tiananmen Square in 1989.

I think the question is absolutely about what future service development is facilitated, if Apple are already checking uploaded iCloud pictures against the database (which I understand they are already required to do, by US law).

One could also ask why they should only be required to obey US laws, whilst doing business across the world. Indeed Apple already do follow local laws where required to do so, if they want to continue doing business. I think Tim Cook himself did say of the FBI request, if that’s what the US Congress wants then they can pass laws to require it.

Tim Cook:

I think that applies here. It will change behavior. These devices follow us, monitoring everything we do (even our cars have these abilities). Even worrying about such things opens us up to, “Why are you worried about such things? Are you hiding something?”

And some people worry about a national ID card?

I’m not particularly…just not in favor of scanning on my device unless I can choose to turn it off…and disabling iCloud Photos requires losing a major function of iCloud. In addition…scanning on device is introducing a back door although it might be just a back window if one wants to debate semantics…and opening that access for on device scanning of any sort opens the door to t(e government demanding more access…and they can no longer say ‘we can’t do that’.

In addition…once all the court cases are decided…I believe that the courts will hold that anything uploaded to any cloud has no expectation of privacy…I don’t expect any today already so anything that gets uploaded that I want to remain private falls into Steve Gibson’s TNO (trust no one) and PIE (pre internet encryption) rule.

My preferred solution is that iCloud and iPhone provide universal encryption for which Apple doesn’t hold the key and for the courts to hold that providing your face or fingerprint is protected just like providing the password already is.

And therein lie two potential problems.

If you believe that the database will have more than a small fraction of the world’s kiddie porn images…there’s some swampland in NJ I can sell you where Jimmy Hoffa and JFK are living like hermits. Yes…there are some identified and traded images… it most of them are home grown.

Second…since this is some sort of hash and image recognition (DNA) combination…the hash portion will be completely different if you change the image…and the recognition potential can and will be degraded by altering the image. The paper I read talked about ‘minor cropping’ being still recognized… it that leaves major cropping, expanding the canvas with Photoshop content aware fill, changing other image parameters…eventually the DNA match will fail and it will still be kiddie porn, just a so far not well known image. Which gets us back to the ‘this will only affect a small percentage of kiddie porn images on the internet.

It isn’t kiddie porn…but just for example I just did a search on a well known porn site with wife as the search term…and got 200,000 results…the round number tells me that the buffer just quit, not that it ran out of images matching the search.

This is only a minor bandaid solution anyway…so why is Apple sacrificing a long standing company policy that user privacy is their number one concern.

We also really don’t know why they did it…I’m sure the idea and decision were hashed out over hundreds of hours of internal discussion unless Tim Cook made a unilateral decision…and I also know that unless Apple monitors places like Tidbits Talk, Twitter, Mac oriented sites and the like and decides to change a decision based on what they see…and I highly doubt they do o4 will…there isn’t a darned thing we can do about it so it probably isn’t worth all the effort we have collectively put into these threads.

I think I will still be using iCloud Photos.

My problem is that Apple is putting code on my devices that use resources, but which cannot benefit me directly. Recently they started the Find My network, but that is optional, and can benefit me if my iPhone is offline. The recent COVID-19 Exposure Notifications function was optional. In-App Ratings & Reviews is optional.

But CSAM-scanning is, to me, like software DRM, including all the means that Apple takes to avoid jailbreaking: in that it really benefits someone else more than me, directly.

What I have not seen mentioned is how much disk space the hashes from NCMEC images take up. And how much power/battery NeuralHash and Private Set Integration take up. Are these hashes added with system updates, or sent to devices in the background? And if the number of NCMEC images increase, do the hashes ever decrease in size? As in: it’s 2030. Therefore all iOS devices have 2GB less storage because of ever-increasing image hashes, and your battery life is now 1 hour shorter.

For some time now, Apple has been criticized for not having an anti child porn policy when Facebook, etc. have had very active ones. It hasn’t seemed to matter that their policies haven’t seemed to be very effective over the long term at all. They still regularly get mostly very positive press for continuing to try:

Apple’s PR department seems to have done an exceedingly crappy job handling the press with this initiative. They need to respond better, and fast; Apple has been eating dog poop over this since it was announced. If I were Tim Cook I’d be kicking some PR butt.

The description Apple provides of the technology makes it clear that it’s designed to find close matches while also deal with issues of orientation, obscuring, etc., designed to fool pixel-by-pixel matches.

This is definitely distinct from the “find all cows” or “find all pictures of Enemy of the State XYZ.” However, the difference isn’t extreme? We know they already have a “find all cows” ML algorithm for Photos that runs locally. Those matches are not performed in the cloud. So a coarse “category” matching already existing in all its operating systems with Photos. A tight matching algorithm will now be added for CSAM.

First, I’d make the polite suggestion that the term “kiddie porn” is inappropriate given the nature of the material. I think the term emerged because people didn’t want to deal with the reality of what CSAM is—it’s not pornography, it’s violent criminal activity.

Second, the NCMEC database is 100% about preventing re-victimization, yes. While an important goal, it’s not a way to remove children who in actively dangerous situations, because NCMEC-validated and fingerprinted images are ones that have gone through a rigorous screening process.

Third, the database is certainly only some subset of CSAM circulating. It’s not prospective in finding new material (hence not protecting actively harmed children).

There’s a reasonable amount of attention that should be focused on keeping this material from circulating. The abrogation of civil rights and privacy of everyone using devices seems pretty high without any real discussion. Cloud-stored images seem like a different category (and peer-to-peer networks entirely differently) because they pass over public and private systems and have a lower expectation of privacy than stuff stored on devices in our possession. Since the point of this exercise is to prevent circulation of images, checking device uploads seems like the wrong focus, too, particularly if Apple already scans iCloud, which it may be doing? (Like is doing?)

I agree that the redistribution of known images is bad…and I’m all for Apple as well as Google, FB, LEO and the DA to go after them…just don’t go after them on my device…last time I checked a search required a warrant and while Apple isn’t the cops…if they turn users over tothe group and they turn them in to t(e cops then Apple is acting like law enforcement and the search requirements apply. I’m just fine with them scanning, hashing, reviewing, and turning over images on their computer over to t(e cops…but implementing what is essentially a back door in the OS that…right now for this specific good purpose …i# all i5 will be used for…but we know that China will require Apple to use the same PhotoDNA techniques on the images that China wants to know about (or the Chinese will roll their own photoDNA and tell Apple to use it…whatever)…and we know that FBI, et. al. Will try and use the back door to get Apple to take care of some other good purposes…white supremacy, black supremacy, the Moors, terrorists, what have you…and Apple can no longer say “No, we won’t put a back door in our system because the user’s right to privacy is paramount.

That’s my biggest gripe…that Apple…who says trust us, we believe in user privacy…is deliberately introducing the capability to donducr warrant less searches on our iPhones. Yes…today it is only photos that get uploaded to Apple’s iCloud server…but next month they can easily scan all of our photos sans warrant and narc us out to the cops just because Tim Cook decides that’s the thing to do.

My second biggest gripe is that…just like the security theater at the airport and the meaningless python roundup in the Everglades that happened recently and caught 230 or so snakes…out of many 10s of thousands that are there…is essentially a meaningless feel good gesture that won’t accomplish anything…because the vast majority of that material on the net or in iPhones is self generated and has never been legally ruled as illegal and photoDNA’ed to put it into the database. The material is only illegal if the child is under 18…and for vast quantities of material out there…it is simply not feasible to determine illegality or not…having worked on a case of this back in my working days the forensic gynecologist told me that determining age based on a photo of a teen female was at best +/- 2 years and in most cases it was 3.5 years based on physical appearance. So…this might catch a bad person or even a dozen or more… it that’s an insignificant impact on the problem and mostly let’s them say we are doing something about it. Maybe spending R&D money to eliminate zero day flaws that allow things like Pegasus (or whatever the correct name is if that’s not it)…is more productive in device security although maybe not as goodFirst, I’d make the polite suggest that the term “kiddie porn” is inappropriate given the nature of the material. I think the term emerged because people didn’t want to deal with the reality of what CSAM is—it’s not pornography, it’s violent criminal activity.

Solving this issue…unless we go to a police state…is an impossible task…which isn’t to say that LEO shouldn’t try…but Apple ain’t LEO and meaningless PR stunts aren’t going to help.

They’ve taken a significant step away from their ‘privacy is in our DNAk and user privacy first history.

A couple of Twitter threads that I found interesting and thought this group might as well:

(post deleted by author)

Me too…sorry if my last reply caused you to think I was uncivil…but in my view I was just telling it like it is. No worries though…I’m actually surprised that Adam hasn’t squashed this thread already even though I though the debates were civil and reasonable althoughi recognized them as pretty much pointless as Apple doesn’t really care what we users think IMO.

I’ve been offline all weekend while spending time with visiting friends and family, and I’m disappointed that this topic devolved as it did.

If you think you’re posting something I’m going to take exception to, don’t post it. Saves everyone trouble. I’ve removed all the back-and-forth sniping, and I will continue to cut hard on anything that doesn’t directly relate to discussion of what Apple’s doing here. If it continues to get out of hand, I’ll shut the thread down entirely.

As the Discourse FAQ says:

Howard Oakley has posted on this subject:

Apple has now posted its own FAQ. We’re pondering how to integrate it into our coverage.

I don’t agree. Apple has responded (often in significant ways) to pushback on policies. But the reason to have a discussion here isn’t to get Apple’s attention. It’s to understand what’s going on and hash out opinions about what we think.

I strongly suspect that coverage like ours and discussions like this prompted Apple’s FAQ this morning. If they’d been planning to release it all along, it would likely have come with the original materials.

So yes, Apple does pay attention. Not necessarily to anything specific or any particular complaint, but to the general tenor of reactions and discussions. I wouldn’t be surprised if Apple’s FAQ expands as well, as the people in charge feel the need to respond in more detail or to other concerns.

I’m reminded of the performer who is trying to keep multiple plates spinning atop poles. As he dashes from pole to pole to adjust the spin, our eyes are drawn to the plates most in danger of falling, not the ones successfully spinning.

That seems to be our nature – looking for the worst to happen.

And yes, the worst does sometimes happen, but if we resist trying to make something better because our actions might have consequences we did not intend, then we will never get better.

I applaud Apple for making the attempt, and have confidence that they will do what they can to minimize unintended consequences. Will it be enough? Only time will tell.

Ben Thompson’s Stratechery column hits some of the same issues we’re talking about here, the difference between capability and policy. In the past, Apple said there was no capability to compromise the privacy of your device; now it’s saying that that it has no policy to do so.

https://stratechery.com/2021/apples-mistake/

Absolutely. I guarantee you that Tim Cook gets regular briefings on the general tenor of press coverage, forum discussions, social media, complete with quotes.*

*multiple family members in PR and PR adjacent industries as reference.

It’s disturbing. I think this paragraph from the article gets to the heart of the problem:

Child sexual exploitation IS a current problem.

The “slippery slope” issue is a potential problem.

It comes down to balancing the rights of privacy, with the rights of the victims of unspeakable crimes. In this case I agree with Apple’s decision to do what it can to protect children.

Folks, I agree with everything stated in this comment section. Both points of view and we should help to protect kids who can’t really protect themselves. BUT…

In my belief, Apple doing what it has proposed is tantamount to making everyone who owns an Apple device, “guilty until proven innocent.” In my estimation this effectively is akin to an “unreasonable” search. Warrantless. The perverts that trade or engage in such heinous activities should be dealt with forcefully but that doesn’t give anyone the right to invade my privacy, papers, property, things. No matter how well intentioned the process.

Whatever happened too, “being secure, in your person, papers, things…”?

I’m viewing the iCloud Photos NeuralHash feature less as keeping individuals safe, or persecuting baddies, and rather as an attempt to keep such material off iCloud Photo servers.

Framed as a “if you want to pay to copy your files onto our server, then it’ll be NeuralHashed first”, it seems… almost acceptable-ish.

And over time, if the hashes are on the iOS devices, I would not be surprised if Safari and other programs that can upload data also NeuralHash data before uploading. Not saying that I am ok with that.

That applies only to the government. With a private company like Apple, you’d be agreeing to the system by virtue of the legal agreements you enter into when using the device.

Of course in this case it’s a bit more nuanced because Apple is implementing technology and company procedures that amount to an extended arm of government (LE & prosecution).

Several people have already pointed out that Apple could well be implementing this in order to preempt possible government action. If that is indeed the case, this IMHO moves us even closer to 4th Amendment territory.

Here’s another interesting take on the situation:

https://www.hackerfactor.com/blog/index.php?/archives/929-One-Bad-Apple.html

That doesn’t make it right.

“Right” is a different question. The point is that the Fourth Amendment explicitly refers to the government. @Simon is suggesting there may be more of a connection because NCMEC has special status within the government, and that may be true, but that’s an issue for experts in Constitutional law to hash out. And we aren’t.

Yes, private companies are not bound by the Bill of Rights.

Unless they are acting on behalf of or at the request of a government agency.

This is one of the big issues in the news today regarding social media companies censoring what their customers post. They have been saying that as a private company, they can block or allow anything they want for any reason they want. But when news recently broke implying that government agencies are working with them in order to determine what “misinformation” should be blocked, that changes the game.

It’s no longer a clear-cut case of a private company doing what it wants on its own systems, but is now a case of the government ordering (strongly suggesting?) that they do so. Which means the Constitution now applies. The government can’t dodge its Constitutional obligations by telling a private corporation to do what it isn’t allowed to do.

With a warrantless government search, you are helpless (with protection only from the court system, you hope.). With this action by a private company, you still have choices; don’t use iCloud Photos; or use another mobile device that doesn’t search all of your photos; or, don’t use a connected device at all. And, of course, use your voice to make your displeasure known.

Then Apple should just have said so. As I said before…I’m quite fine with them hashing anything on their servers and even turning them over to the cops…as long as they tell users that they’re going to do that and either grandfather in images uploaded before they made the announcement or offered users an opportunity to delete them from iCloud photos before the hashing started…either of those would protect the privacy the user thought they had before the new hashing stuff.

Then they should say that this is part of a long range plan…or at least leak it in some not to be attributed fashion like they probably do a lot already…I know that they don’t talk about unannounced products…but informing users of something coming down the pike would be nice even if they couched it in a ’this is our current plan, no firm date and no firm commitment because it might not work out’ vein…

And therein lies the problem…policies can be changed a whole bunch easier than capabilities.

Adam’s absolutely right about the Fourth Amendment applying to government entities not private ones. That said, that doesn’t mean that Apple searching your phone without your permission (or car or house) wouldn’t be illegal. What constitutes “your permission” is an interesting question.

You may be right there…I’m sure folks like you are much better informed about all things Apple than us mere mortals are. Now whether than actually change the way that this is supposed to work because of what they see here or elsewhere…or whether they just send our FAQs to try and silence the rumbling…doesn’t really change what they’re doing and why. Just like all the complaints I see on MacStories and other places about the horrible things being done to iOS and iPadOS Safari…yes, the thing keeps changing in beta; but will/does Apple really go back to the old way of how Safari works on iOS/iPadOS or will they just do what they’re going to do anyway? There might be a little of the former…but by and large Apple’s going to do what Apple is going to do and mostly isn’t going to change course because a few users complain. They’ve always been about a consistent UI and not allowing things to change…even though many users would prefer a different thing than Home Screens and default apps…but Apple in it’s wisdom ignores that (mostly) and does what they feel like doing. Given that history…are they really going to change how this works because a few users complain about it? I’m thinking the odds on that are pretty darned low.

Protecting children is good…but as I said in another message on the thread this will only affect a small percentage of the relevant material around. Anything that was originated by an individual user…or that was shared by another user to him/her and hasn’t been through the official declaration as illegal and got the classifying/hashing voodoo done to it…will not be discovered by this system…and while I’m not an expert on the subject I would guess the home grown variety quantity of material far exceeds the known/classified/hashed quantity…so this seems much more like a feel good PR move so that Apple can say “we’re doing something about it”…and it may even be more secure and less privacy invasive than what FB/Twitter/google/whatever is doing…but it’s still a big step back from user privacy first which has heretofore always been the top priority. I just can’t see how something that (a) won’t affect most of the material in question and (b) is thus mostly a feel good PR thing is worth it for taking that big step back.

Maybe your filter is different from mine…but I haven’t noticed any incivility or personal attacks…just passionately expressed ideas and explanations of their opinions. It ain’t my circus here though…it’s Adam’s so he and whoever he allows get to make the rules and enforce them…and the rest of us get to live by those rules even if we don’t particularly like some of his decisions regarding what gets posted…but then his filter might be as different from mine as Glenn’s is.

Just sayin’

Technically…you’re correct. However…if Apple is turning this over to the cops…or to an organization that will turn them over to the cops…then IMO they’re almost acting as a government agent and are at least violating the spirit of the constitutional right if not the actual letter of the law and we would have to see what the courts said about that.

If Apple was officially acting as a government agent for this…then I believe that the courts have held that the constitutional right still applies. If Apple is not acting as an agent…and happens to come across it accidentally and turns it into the cops…then the evidence is admissible in court.

However…if Apple is doing this as a result sort of a wink wink nudge nudge conversation in the mens room that was along the lines of “it would be nice if you could do t his but we’re not officially asking”…then IMO they are acting as a de facto government agent and the right applies.

Useful TechCrunch interview with Apple’s head of privacy.

Since 9/11 we’ve all been asked, “if you see something, say something.” This can be thought of as Apple saying something. If the authorities take action after being informed by Apple, they will be acting only after obtaining a warrant.

Another way to think of this is that Apple is taking action to prevent collections of CSAM from being uploaded to their iCloud servers. If they scan after the upload, that means that they were hosting these collections prior to the scan.

Only dumb or careless people will be caught. I don’t think that this is really about catching child pornographers, etc. - I think it is about Apple not wanting collections of this material on their property.

IMHO that’s a false equivalency.

Apple isn’t just saying something after they happen to “see” something. They’re building the Hubble Space Telescope and then plan to report on observing alleged occurrences in the outer galaxy.

In essence, they are now trying to sell to us that their telescope can only be used to detect criminal behavior at the far outer edges of the galaxy and that if we’re all good citizens of the universe, we have nothing to fear from their peaking.

Yep! Which has led to countless people of color getting the cops called on them by overaggressive observers, so I’m not sure we should invoke that as a model for Apple.

The app requires parents to opt in to run the app on the iPhones of their kids; they need to make the choice to activate it. It works by scanning Messages and iCloud images, not everything that lives on anyone’s iPhone. The app does not directly scan the photos on the phones. It uses hash case matching instead, and Apple’s live monitors will check the images to verify them. They won’t look at anything that isn’t isn’t a match with the database. If the match is verified, children and parents will be warned. Children will also be prevented from sending images that match the database. They are not randomly scanning photos on anyone or everyone’s iPhones, as the CEO of Facebook’s WhatsApp and many others are claiming:

“By the way, do you know which messaging service isn’t encrypted? Facebook’s. That’s why Facebook is able to detect and report more than 20 million CSAM images every year sent on its services. Obviously, it doesn’t detect any messages sent with WhatsApp because those messages are encrypted, unless users report them. Apple doesn’t detect CSAM within Messages either.”

https://www.inc.com/jason-aten/the-ceo-of-whatsapp-attacked-apple-over-privacy-he-seems-to-have-forgotten-he-works-for-facebook.html

You’re still conflating two separate parts of the program, MM. You need to stop doing that.

IMHO, two halves = one whole.

OK, first off, @silbey is right. The Communications Safety in Messages and the CSAM Detection for iCloud Photos uploads are completely different systems that work in completely different ways. The only sense in which they’re related is that they’re both designed to protect children. So let’s not continue that branch of the discussion.

Second, Alex Stamos of Stanford has an extremely good thread on Twitter about this.

As I said before…I’m happy for Apple to see something say something…but they should scan on their end and not my end. That’s better…much better…for my privacy on my iPhone and also according to a link Adam (I think) posted earlier today Apple might be in the wrong legally if they scan on the device.

Transmission of an illegal image to anybody but the NCEC (or whatever it’s name is) is expressly prohibited by federal law…the article referenced above has the cite…so if Apple is scanning on the device and then transmitting the ‘highly suspected as illegal’ image to themselves instead then technically they’re in violation of federal law. Checking on their end…they did no transmission to themselves…but saw something on an image that was uploaded (exactly as google, FB, et al do) and then tell the appropriate authorities.

For Apple to scan on our devices…and you know that most likely eventually they’ll change this to all photos on the device period…is essentially saying (as another reply suggested) that all iPhone users are subject to a warrantless search because of a few bad apples. And while Apple doesn’t need a warrant…they’re getting quite close to acting at the behest of the government here and in that case the warrant requirement applies as well as privacy and unreasonable search rights.

I applaud them for doing…something…even through I personally think it’s a feel good PR thing and not an actual help with the problem…but they’re giving up a long held “user privacy is our primary goal, it’s in our DNA” position for essentially little or no gain that I can see.

My personal guess is that either Tim Cook…or somebody that has his ear…has a mission in life to help eliminate this illegal material, perhaps because of a family member it happened to or whatever…and this whole thing started out from that mission. I’m against illegal material myself…but an effective solution that doesn’t treat all users as guilty until proven innocent seems like a much better “privacy first” company approach. I don’t know what exactly that approach should be…but this doesn’t seem like the right one…especially as we absolutely know that the Chinese will require Apple to scan for their ‘we don’t like these images’ hashes as well…China will pass a law saying they have to do so and Apple obeys local country laws so they’ll give in just like they did with other privacy related stuff in China.

Another article that may be useful here. The writer here points out why what Apple is doing is legal, but what we’re afraid of is not.

Of course, if you believe that Apple will violate the law and their own policies despite their statements to the contrary, this won’t do a thing to change your mind.

And here is Jason Snell’s view from 10,000 feet.

A good analysis, but he seems to miss one key point (that everybody else seems to have missed as well).

The CSAM database is a set of hashes of specific, already identified photos.

If someone takes a different picture of the same subject, and that photo’s hash isn’t in the CSAM database (e.g. because it hasn’t been circulated), then Apple’s scanner won’t detect anything.

This is what makes it less than useful for governments trying to use it to crack down on subversive people. Sure, they can use the tech to detect, block and report specific images, but they can’t use it to detect all similar images.

In other words, while China may be able to use this tech to block well-known photos and videos of Tank Man, they wouldn’t be able to block the generic category of all content referring to the Tiananmen Square massacre, unless they somehow managed to collect a copy of every picture, video clip and audio recording taken. And even then, they still wouldn’t be able to block new and original content (e.g. artwork, re-enactments, discussion and analysis, etc.)

It’s the difference between (for example) looking for a pirated copy of a music song and looking for any person or band’s cover of that song or for every audio recording of people taking about the song. One is pretty easy (once you have the database of hashes) and the other requires a massive amount of computing horsepower.

And this is why on-device scanning is actually better than in-cloud scanning.

The amount of processing needed to accurately identify the subject of a piece of media (photo, video, audio clip, .etc.) is more than can be done practically on a phone. A phone might be able to do the scan if it has enough memory and a big enough neural processor, but it would consume all of its CPU power while scanning (making it get very hot and drain the battery) and the AI model necessary to accurately perform the identification would be huge - enough that people would easily notice the amount of storage consumed by it. Especially when you consider that, to be useful, the model would have to be updated rather frequently (to accommodate new subjects to detect), meaning lots of very large downloads and re-scans on a regular basis.

In other words, although it might be possible, it would have a massive impact on user experience, and there is no way they could do it in secret. People would immediately notice the massive and constant drain on all system resources, investigations would happen, and we’d all find out very soon afterward.

On the other hand, that kind of surveillance/analysis is nothing unusual for a cloud-based service, and it happens all the time. While we certainly know about much of it (e.g. YouTube content matching), I’m certain there’s a lot more analysis going on that we never find out about until some whistleblower creates a scandal.

In short, Apple’s decision to do all this scanning on your phone and not in the cloud probably goes a long way toward preventing the kinds of abuses many of us are afraid of. Of course, the tech can still be abused, but not nearly to the same extent that would be possible with in-cloud scanning (which some have said would be better).

That would mean that someone owning CSAM could change something small about a known image and have it escape detection. I’m pretty sure Apple is doing something to guard against that, which suggests that no, it’s not quite the same as only the exact precise image will get picked up.

Yep! From Apple’s tech summary:

“The main purpose of the hash is to ensure that identical and visually similar images result in the same hash.”

Emphasis mine. What “visually similar” means is an interesting question.

When I say “similar”, I’m not describing the same thing Apple is.

According to Apple’s CSAM technical summary document, they are using a “Neural Hash” algorithm. This document doesn’t explain it in detail, but they say it generates the hash based on “perceptual features” of an image instead of the specific bit-values.

People familiar with lossy audio encoding (e.g. MP3, AAC) will recognize this term. When used for data compression, you delete data that the algorithm believes will not be perceived by the listener, in order to get better compression ratios. I think something similar is going on here - an algorithm is identifying the data that is most significant to recognizing the subject of the image and is only hashing that.

Their example shows an image converted from color to B&W without changing the hash value. I would assume that other basic transformations (different color depths, different DPI resolutions, cropping out backgrounds, rotations, etc.) would also not impact the hash unless they are so extreme that the subject is no longer recognizable (e.g. reduce the resolution to the point where the subject is significantly pixelated).

Assuming this is correct, it would be hard (but definitely not impossible) to modify an image enough to produce a different hash. The intent of the algorithm (as I understand it) is that the kinds of changes needed to produce a different hash will be big enough so that the result is no longer “visually similar” to the original.

But this is talking about detecting well-known images after various photographic transformations have been applied to them. They are not talking about trying to (for example) detect a different photo of the same person, which would generate a different hash.

Again, this algorithm, if used for a hypothetical situation of the Chinese government looking to squash dissident material, would be able to detect well-known photos of Uyghur concentration camps, but it wouldn’t be able to detect photos of these camps not previously known to the Chinese government (e.g. ones that were recently taken, or taken from a different angle).

Given the lack of detail in Apple’s explanations and the assumptions you’re making in your analysis, I don’t think we can be particularly confident about things.

Apple actually supplies quite a bit of detail, but do you want a lesson in neural networks?

A convolutional neural network is a common model that is used for image processing. It is used for many different purposes including image identification (e.g. identify what a photo is a picture of), object detection (e.g. identify every instance of different kinds of known objects), pose detection (e.g. determine the position of a person’s body) and facial recognition.

The NeuralHash algorithm is an instance of this that is trained to produce a large number (a “hash”) that uniquely identifies an image.

They train the neural network using the original CSAM images (from the NCMEC database). But they don’t just use the original image for training. They apply a variety of transformations to the image (e.g. color space changes, rotation, scaling, etc.) and train the network on those images as well, so that they will all produce the same hash. And they use transformations that produce dissimilar images for negative training (ensure that they don’t produce the same hash).

Assuming they didn’t screw it up in the implementation, the result will be a neural network that is trained to recognize images from the set in the NCMEC database, that is resilient to image alteration but with a low probability of false positives.

Unfortunately, I don’t know enough about the guts of neural network training to be able to explain this in greater detail. I hope it helps you understand what’s actually going on in here.

Jason’s well aware of that. If you listen to the latest episode of Upgrade, he and Myke discuss this in detail.

This is why originally I couldn’t understand the concerns about using the system to crack down on political dissidents. But as Jason & Myke discuss, China in this example wouldn’t be trying to block all content referring to Tiananmen Square. They would add a set of images to the database that are known to be circulating amongst people opposed to the government, and use positive matches to identify and target the people, not the content.