#1575: More CSAM Detection details emerge, many-stop route planning with MapQuest, Speedify VPN, macOS 11.5.2

The controversy surrounding Apple’s announcement that iOS 15 would detect child sexual abuse material being uploaded to iCloud Photos continues. Last week, Apple posted yet another explanatory document and sent software chief Craig Federighi to explain in an interview with the Wall Street Journal. Adam Engst dives into the details. Ever needed to solve the traveling salesperson problem in the real world? Adam did, and he shares his MapQuest-driven solution. If you’re struggling with slow or unreliable Internet access, we have a look at Speedify, a VPN that bonds multiple connections to improve performance and reliability. Finally, Apple released macOS 11.5.2 with no details at all about what changed. Should you update? We don’t know! Notable Mac app releases this week include 1Password 7.8.7, Firefox 91, and Timing 2021.5.1.![]()

![]()

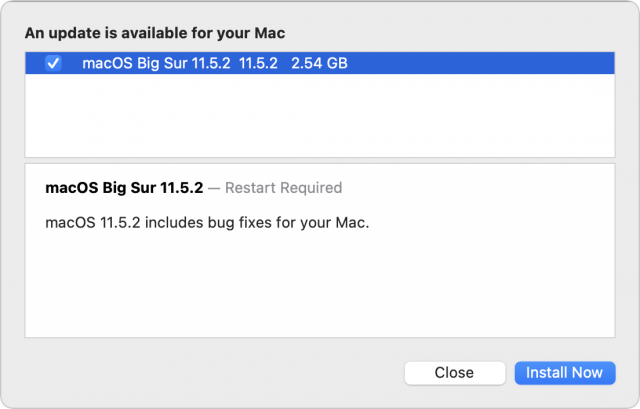

Apple Ships Mysterious macOS 11.5.2 Update

Apple has released macOS Big Sur 11.5.2. The release notes mention only “bug fixes.” There are no security notes. What does it fix? We don’t know! Should you install it sooner or later? We have no idea! If you install it, let us know in the comments if you notice any difference at all.

Two things that aren’t mysterious: the macOS 11.5.1 Big Sur update weighs in at 2.54 GB for a 2020 27-inch iMac, and you can install it in System Preferences > Software Update.

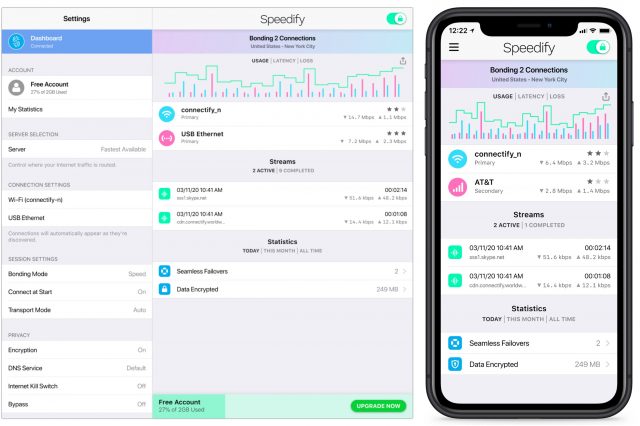

Speedify Bonds Multiple Internet Connections for Speed and Reliability

Are there times when you have various possible Internet connections—Wi-Fi, DSL, satellite, cellular—none of which are particularly fast or reliable? That isn’t a problem I have these days, so I haven’t tested Speedify, but I find it a sufficiently compelling idea that I wanted to mention it briefly.

In essence, Speedify is a software-based VPN that promises to combine multiple Internet sources into a single connection that’s faster, more secure, and more reliable. Channel bonding increases performance, automatic failover boosts reliability, and automatic prioritization of audio and video streams makes it suitable for streaming.

I could imagine Speedify being useful for people who live in rural areas with dodgy DSL, spotty satellite, and sketchy cellular. Even if none of those connections work well all the time, they could be fine together. Then there are those who regularly commute on trains and might benefit from bonding the train Wi-Fi with a cellular connection to participate in video calls while on the move. Other travelers could benefit from supplementing an overwhelmed hotel or conference Internet connection with a cellular connection. With standalone cellular modems—some of these scenarios require additional hardware—you could even bond cellular connections from two different carriers. The upshot is that if you’re willing to pay for multiple accounts, Speedify might be the best way to get faster, more reliable Internet access.

Speedify apps are available for Mac, iOS, Windows, Android, and Linux. There’s a 30-day free trial, after which unlimited data plans start at $4.99 per month for a 3-year commitment. A month-by-month plan costs $14.99 per month, or you can split the difference with a 1-year commitment for $7.49 per month. It works on up to five devices simultaneously, and there are also family plans that offer up to five accounts.

If you give Speedify a try, let us know how you’re using it and how it works for you in the comments!

Try MapQuest for Many-Stop Route Planning

With one of my other hats on, I’m president and chief rabble-rouser for the Finger Lakes Runners Club. Last year, faced with the possibility of more COVID-caused race cancellations, I developed the FLRC Challenge, a series of 10 virtual races around the area in which runners can run courses multiple times, record results with the Webscorer app, and check standings on a dynamic leaderboard. It was a ton of fun to come up with all the rules, coordinate the design work, and put together all the technology to make it happen. It has been a big hit in the community, with 146 runners putting in over 12,000 miles so far this year.

To help promote the FLRC Challenge in the community, I designed and printed lawn signs—those things that sprout like mushrooms every election season. Club members signed up to host them via a simple Google Form that asked for their name and address, and once the signs arrived from Signs.com, I was faced with how best to distribute them. Our area isn’t that large, so Tonya and I decided to spend the afternoon of July 4th driving around and installing them. (It was glorious summer weather, we were driving our all-electric Nissan Leaf, and many of the people who asked for signs are friends, so it was a nice way to spend the day.)

But here’s the question. When faced with needing to visit 43 different addresses, what was the most efficient route? It’s easy enough to enter an address into Apple’s Maps or Google Maps, and even add an extra stop or two. But 43 stops in an optimal route? It’s possible to import a list of addresses into Google’s My Maps, but I could see no way to load a custom map in the Google Maps iPhone app and have it navigate from one spot to another.

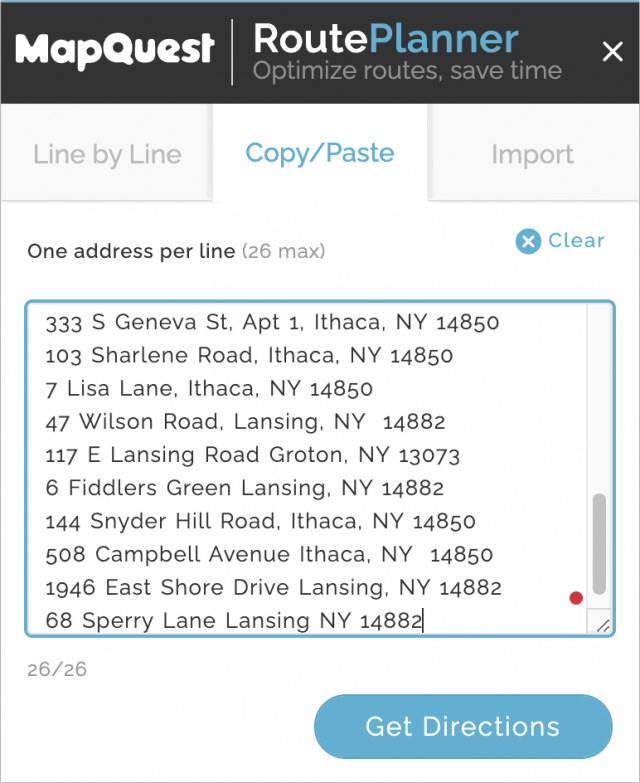

A little research revealed that MapQuest—remember MapQuest from the days when we all printed directions?—offers precisely what I needed. MapQuest’s RoutePlanner lets you enter up to 26 addresses that it can rearrange to give you the shortest route. (Initially, I couldn’t see why it should be limited to 26 addresses, but once I previewed a route, I realized that every address gets a single letter identifying its pin.) Being limited to 26 stops when I had 43 addresses didn’t prove to be a problem since it made more sense to make separate trips to some of the outlying stops—there was no need to do it all at once.

You can enter addresses one at a time, but the tool also lets you paste in a list of addresses, one per line, or import an Excel or CSV file, if you already have the address, city, state, and ZIP code in separate columns. I opted to Command-click the addresses in the Google Sheet created by Google Forms, after which I copied and pasted the entire batch into RoutePlanner.

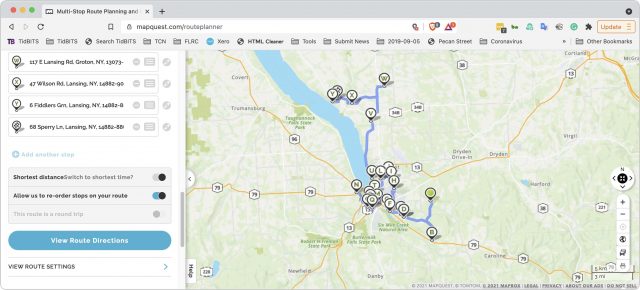

Clicking the Get Directions button switched me to the RoutePlanner’s Line by Line tab, where I scrolled to the bottom (fixing one malformed and thus highlighted address on the way), selected “Allow us to re-order stops on your route,” and clicked View Route Directions to see my route on the map. I then tweaked the order of a couple of stops based on local knowledge.

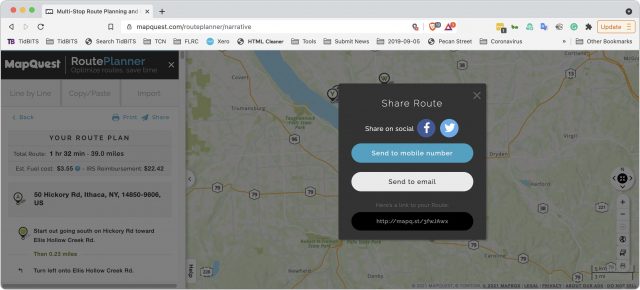

Good enough, but the next question was how to translate that route into something that an iPhone could use to direct us. The answer was to click the Share button at the top. That produced a dialog with a URL and let me text, email, or copy it to my iPhone.

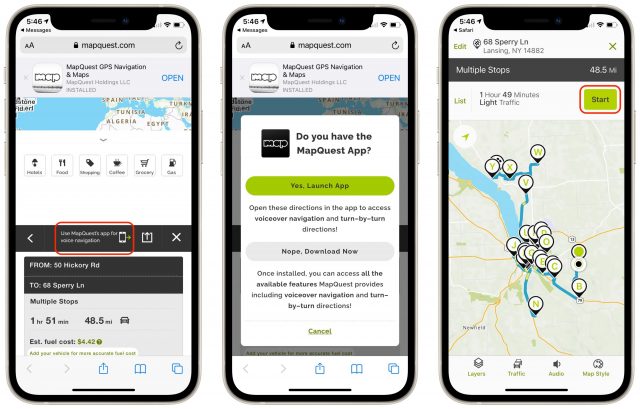

When I loaded that URL in Safari on the iPhone, it provided an option to open in the MapQuest app. I tapped the little iPhone icon to do that and then tapped the Start button.

(To be upfront, the previous two paragraphs took me over a week to write just now, which doesn’t instill confidence in MapQuest. At first, the short URLs that MapQuest generated for sharing the route produced error pages on most loads. I reported the problem to MapQuest and was told they were working on fixing it but never got a confirmation of a fix. However, it seems to work now. Plus, neither the SMS nor email sharing methods worked on recent tries, though I was able to move the URL over to my iPhone in other ways. While troubleshooting the sharing issues, I tried logging in to MapQuest and saving the route to My Maps, but the site could never maintain a login state long enough to do that. Then, in Safari on the iPhone, the black bar with the option to open in the app wouldn’t appear until I force quit Safari and tried again. I had none of these problems last month, and I eventually resolved them this time, but it was frustrating.)

Once you’re actually navigating in the car, the MapQuest app works more or less like Apple’s Maps or Google Maps. It sometimes gets a little confused if you don’t quite make it to a stop, and we once ended up referring to the list view, trying to navigate using local knowledge, and completely missing the stop in question. I’d recommend following its directions carefully and not deviating to the extent possible, since that’s where you’ll confuse the app.

As with “A Solution for Group “Best Wishes” Certificates During the Pandemic” (6 August 2021), this is a solution for a particular problem that most people won’t have, but if you do, I hope you find it useful.

New CSAM Detection Details Emerge Following Craig Federighi Interview

It isn’t often Apple admits it messed up, much less on an announcement, but there was a lot of mea culpa in this interview of Apple software chief Craig Federighi by the Wall Street Journal’s Joanna Stern. The interview followed the company’s first explanations a week ago of how it would work to curb the spread of known child sexual abuse material (CSAM) and separately reduce the potential for minors to be exposed to sexual images in Messages (see “FAQ about Apple’s Expanded Protections for Children,” 7 August 2021).

Apple’s false-footed path started with a confusing page on its website that conflated the two unrelated protections for children. It then released a follow-up FAQ that failed to answer many frequently asked questions, held media briefings that may (or may not?) have added new information, trotted out lower-level executives for interviews, and ended up offering Federighi for the Wall Street Journal interview. After CEO Tim Cook, Federighi is easily Apple’s second-most recognized executive, showing how seriously the company has been forced to work to get its story straight. Following the interview’s release, Apple posted yet another explanatory document, “Security Threat Model Review of Apple’s Child Safety Features.” And Bloomberg reports that Apple has warned staff to be ready for questions. Talk about a PR train wreck.

(Our apologies for the out-of-context nature of the rest of this article if you’re coming in late. There’s just too much to recap, so be sure to read Apple’s previously published materials and our coverage linked above first.)

In the Wall Street Journal interview, Stern extracted substantially more detail from Federighi about what Apple is and isn’t scanning and how CSAM will be recognized and reported. She also interspersed even better clarifications of the two unrelated technologies Apple announced at the same time: CSAM Detection and Communications Safety in Messages.

The primary revelation from Federighi is that Apple built “multiple levels of auditability” into the CSAM detection. He told Stern:

We ship the same software in China with the same database as we ship in America, as we ship in Europe. If someone were to come to Apple [with a request to match other types of content], Apple would say no. But let’s say you aren’t confident. You don’t want to just rely on Apple saying no. You want to be sure that Apple couldn’t get away with it if we said yes. Well, that was the bar we set for ourselves, in releasing this kind of system. There are multiple levels of auditability, and so we’re making sure that you don’t have to trust any one entity, or even any one country, as far as what images are part of this process.

This was the first time that Apple mentioned auditability within the CSAM detection system, much less multiple levels of it. Federighi also revealed that 30 images must be matched during upload to iCloud Photos before Apple can decrypt the matching images through the corresponding “safety vouchers.” Most people probably also didn’t realize that Apple ships the same version of each of its operating systems across every market. But that’s all that was said about auditability in the video interview.

Apple followed the interview with the release of another document, the “Security Threat Model Review of Apple’s Child Safety Features,” which is clearly what Federighi had in mind when referring to multiple levels of auditability. It provides all sorts of new information on both architecture and auditability.

While this latest document better explains the CSAM detection system in general, we suspect that Apple also added some details to the system in response to the firestorm of controversy. Would Apple otherwise have published the necessary information for users—or security researchers—to verify that the on-device database of CSAM hashes was intact? Would there have been any discussion of third-party auditing of the system on Apple’s campus? Regardless, here is the new information that struck us as most important:

- The on-device CSAM hash database is actually generated from the intersection of at least two databases of known illegal CSAM images from child safety organizations not under the jurisdiction of the same government. It initially appeared—and Apple’s comments indicated—that it would use only the National Center for Missing and Exploited Children (NCMEC) database of hashes. Only CSAM image hashes that exist in both databases are included. Even if non-CSAM images were somehow added to the NCMEC CSAM database or other, hitherto unknown CSAM databases, through error or coercion, it’s implausible that all could be exploited in the same way.

- Because Apple distributes the same version of each of its operating systems globally and the encrypted CSAM hash database is bundled rather than being downloaded or updated over the Internet, Apple claims that security researchers will be able to inspect every release. We might speculate that Apple dropped a lawsuit against security firm Corellium over its software (which allows security experts to run virtualized iOS devices for research purposes) to add credibility to its claim of the likelihood of outside inspection.

- Apple says it will publish a Knowledge Base article containing a root hash of the encrypted CSAM hash database included with each version of every Apple operating system that supports the feature. Researchers (and average users) will be able to compare the root hash of the encrypted database present on their device to the expected root hash in the Knowledge Base article. Again, Apple suggests that security researchers will be able to verify this system. We believe this is the case based on how Apple uses cryptography to protect its operating systems against modification.

- This hashing of the database approach also enables third-party auditing. Apple says it can—in a secure on-campus environment—provide an auditor technical proof that the intersection of hashes and blinding were performed correctly. The suggestion is that participating child safety organizations might wish to perform such an audit.

- NeuralHash doesn’t rely on machine-learning classification, the way Photos can identify pictures of cats, for instance. Instead, NeuralHash is purely an algorithm designed to validate that one image is the same as another, even if one of the images has been altered in certain ways, like resizing, cropping, and recoloring. In Apple’s tests against 100 million non-CSAM images, it encountered 3 false positives when compared against NCMEC’s database. In a separate test of 500,000 adult pornography images matched against NCMEC’s database, it found no false positives.

- As revealed in the interview, Apple’s initial match threshold is expected to be 30 images. That means that someone would have to have at least that many images of known illegal CSAM being uploaded to iCloud Photos before Apple’s system would even know any matches took place. At that point, human reviewers would be notified to review the low-resolution previews bundled with the matches that can be decrypted since the threshold was exceeded. That threshold should ensure that even an extremely unlikely false positive has no ill effect.

- Since Apple’s reviewers aren’t legally allowed to view the original databases of known CSAM, all they can do is confirm is that decrypted preview images appear to be CSAM, not that they match known CSAM. (One expects the images to be detailed enough to recognize human nudity without identifying individuals.) If a reviewer thinks the images are CSAM, Apple suspends the account and hands the entire matter off to NCMEC, which performs the actual comparison and can bring in law enforcement.

- Throughout the document, Apple repeatedly uses the phrase “is subject to code inspection by security researchers like all other iOS device-side security claims.” That’s not auditing per se, but it indicates that Apple knows that security researchers try to confirm its security claims and is encouraging them to dig into these particular areas. It would be a huge reputational and financial win for a researcher to identify a vulnerability in the CSAM detection system, so Apple is likely correct in suggesting that its operating system releases will be subject to even more scrutiny than before.

I’m perplexed by just how thoroughly Apple botched this announcement. The initial materials raised too many questions that lacked satisfactory answers for both technical and non-technical users, even after multiple subsequent interviews and documents. It seems that Apple felt that for saying anything at all, we’d pat them on the back and thank them for being so transparent. After all, other cloud-based photo storage providers are already scanning all uploaded photos for CSAM without telling their users—Facebook filed over 20 million CSAM reports with NCMEC in 2020 alone.

But Apple badly underestimated the extent to which implying “Trust us” didn’t mesh with “What happens on your iPhone, stays on your iPhone.” It now appears that Apple is asking us instead to “Trust, but verify” (a phrase with a fascinating history originating as a paraphrase of Vladimir Lenin and Joseph Stalin before being popularized in English by Ronald Reagan). We’ll see how security and privacy experts respond to these new revelations, but at least Apple now seems to be trying harder to share all the relevant details.