#1713: Apple Intelligence security and privacy, Slack for iPhone posting problem, In Your Face persistent notifications, Apple longevity white paper

Curious about the security, privacy, and safety issues surrounding Apple Intelligence? TidBITS security editor and cloud computing expert Rich Mogull explains how Apple Intelligence achieves industry-leading security, privacy, and safety for AI. Adam Engst looks at In Your Face, a Mac app that provides event and task notifications you can’t ignore. He also shares the solution to a problem that prevents Slack users from posting from the iPhone version. Finally, we link to Apple’s latest white paper, which provides an illuminating look at how the company thinks about device longevity. Notable Mac app releases this week include Fantastical 3.8.19 and Cardhop 2.2.18, Final Cut Pro 10.8, Compressor 4.8, and Motion 5.8, Lightroom Classic 13.4, OmniFocus 4.3.1, and Quicken 7.8.

Fixing Slack for iPhone’s “Only Some People Can Post” Error

Over the past week, I’ve encountered a problem with Slack on my iPhone that blocks me from posting. Instead, Slack shows “Only some people can post.” at the bottom, where I would normally enter a message (below left). It wasn’t a simple permissions problem—I’m an administrator and the owner of the channels in question.

The first time it happened, I just switched to my Mac to post, which indicated that it was a client app issue, not something associated with my account. For another day or two, I didn’t need to post anything to Slack from my iPhone, but the next time I did, the restriction annoyed me enough that I force-quit Slack in irritation (below right). To my relief, that simple action solved the issue because I had no other ideas about how to regain the posting privileges a user of my level should enjoy.

Nevertheless, I attributed the problem to cosmic rays and moved on with my life. A few days later, however, Glenn Fleishman said he had run into the same issue, and the next day, the problem recurred on my iPhone. Clearly, the problem wasn’t just a one-off occurrence. If you run into the problem, force-quit Slack by swiping up on it in the App Switcher.

Remember that force-quitting iPhone and iPad apps is helpful only for resetting badly behaved or frozen apps—it uses more memory and battery power than letting iOS manage the apps automatically (see “Why You Shouldn’t Make a Habit of Force-Quitting iOS Apps or Restarting iOS Devices,” 21 May 2020).

In Your Face Provides Persistent Notifications for Events and Tasks

In “A Call to Alarms: Why We Need Persistent Calendar and Reminder Notifications” (11 May 2023), I argued that Apple’s default approach to notifying users about upcoming events in Calendar and tasks in Reminders was insufficient. It’s all too easy to miss or ignore a notification and fail to show up or complete a task on time.

The problem is right there in the name—Apple has given us notifications, but we need alarms. Banner notifications show only briefly before disappearing, and alert notifications remain until you dismiss them, but nothing forces you to dismiss them. In contrast, alarms you set in the Clock app play until you stop them—they command your attention and require interaction.

Since that article, I’ve discovered two apps that ensure you don’t miss calendar events and timed reminders. While they improve on what Apple has provided, neither completely solves the problem, so I’ll continue to agitate for Apple to add alarms as a third notification alongside banners and alerts. In this article, I’ll look at the Mac app In Your Face from Blue Banana Software; in a future piece, I’ll examine the cross-platform app Due.

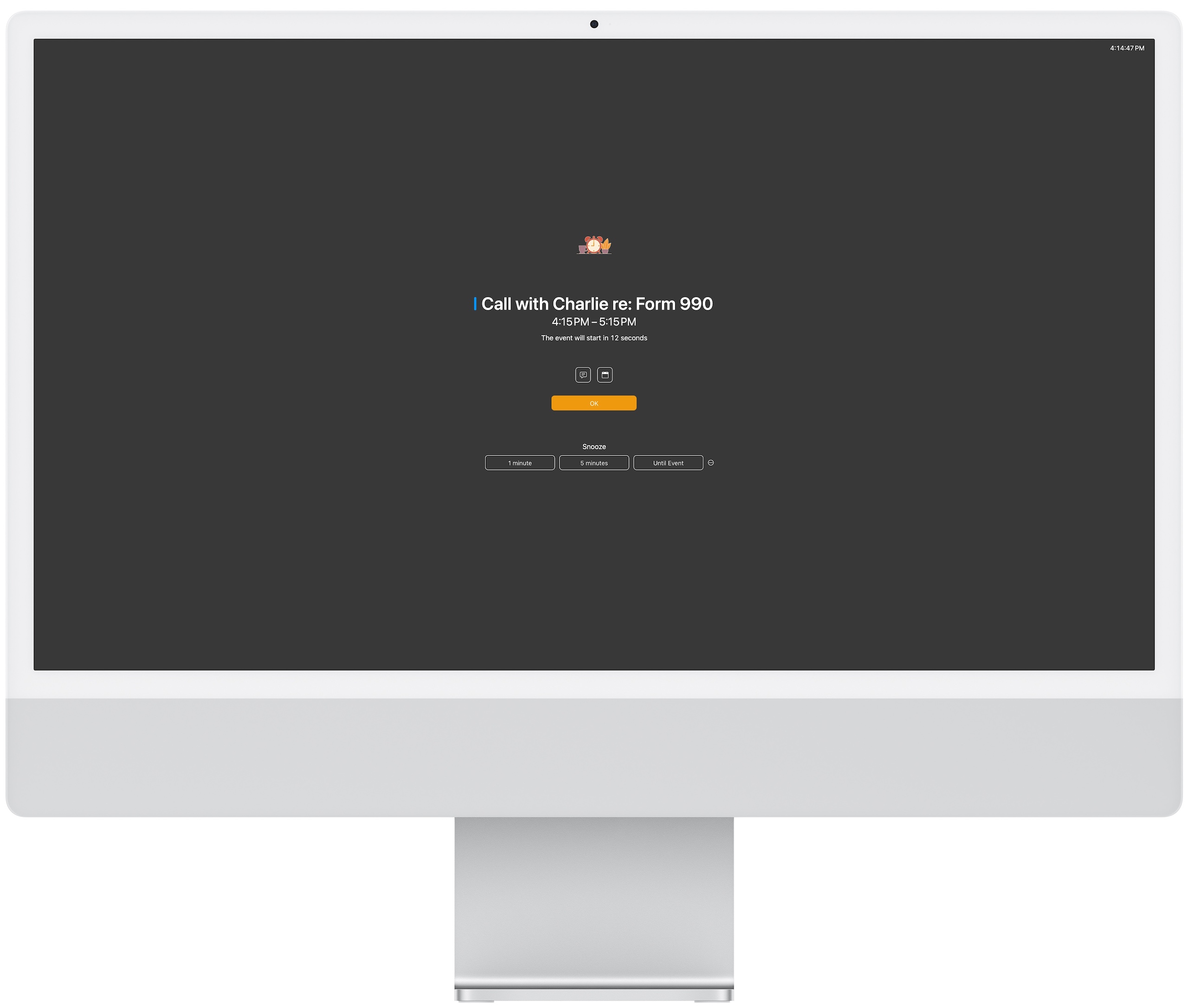

In Your Face Gets In Your Face

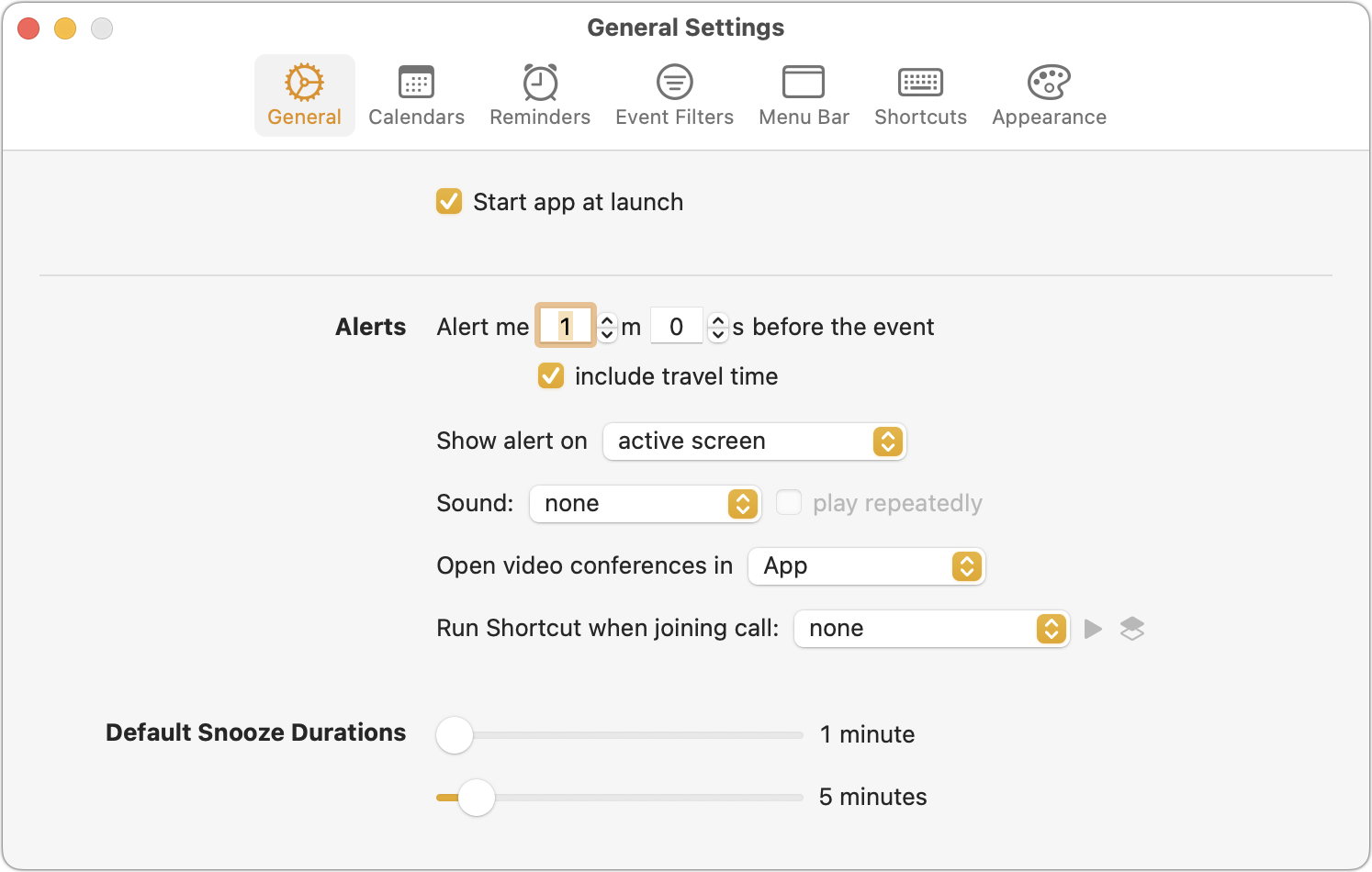

In Your Face ensures you don’t miss timed events or reminders by displaying the mother of all modal dialogs. We’re talking about a themed full-screen alert that takes over the entire screen and requires that you click the OK button to dismiss it and return to using your Mac. Buttons at the bottom of the alert also let you snooze the alert for prespecified amounts of time or until the event starts. I seldom like snoozing, but in this case, you could have In Your Face alert you 15 minutes before meetings and then use snooze to display another alert 14 minutes later to ensure you’re aware of what’s coming up but don’t become distracted in the intervening time.

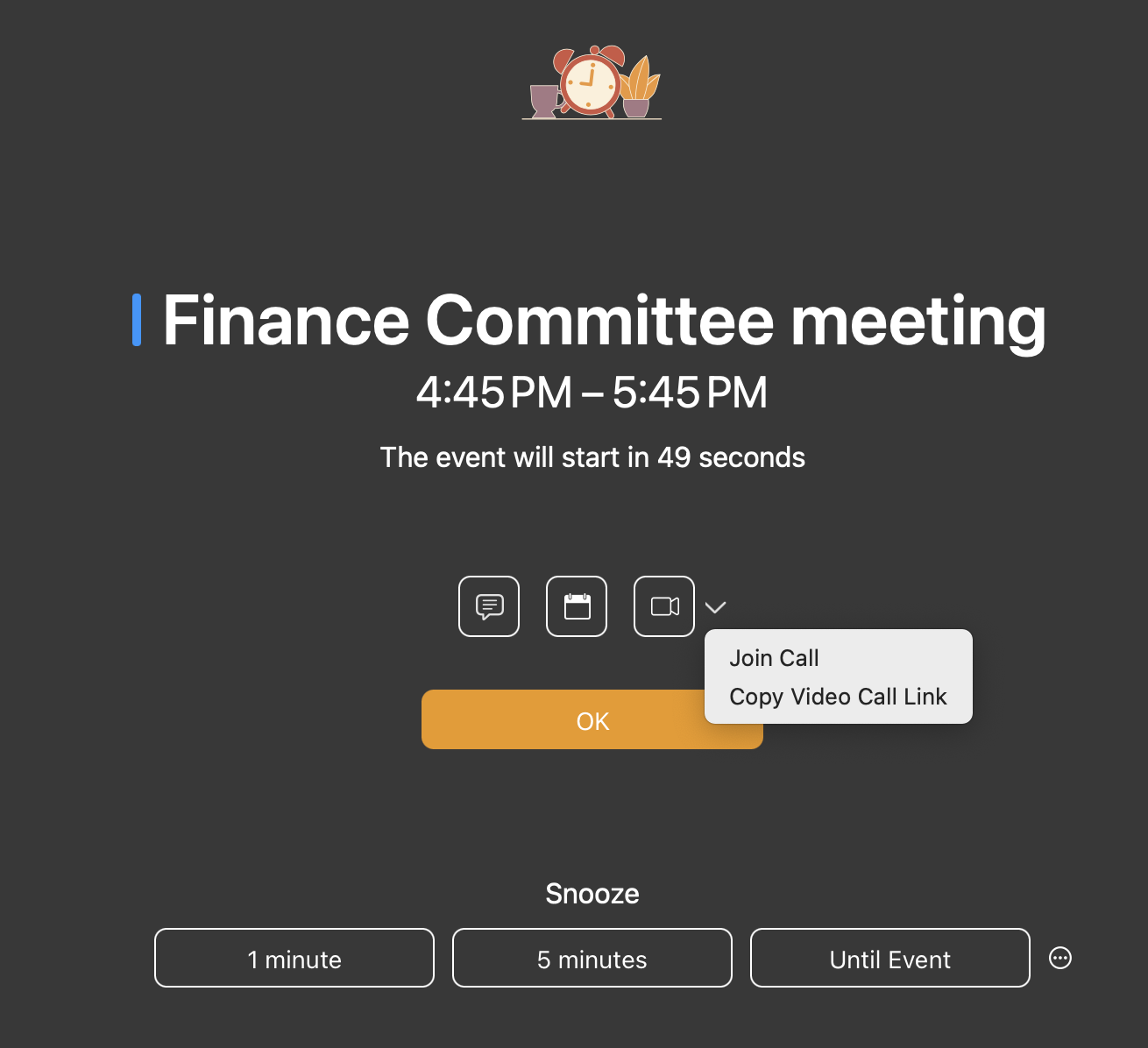

Icons above the OK button let you access notes for the event and open the event in your default calendar app. When an event contains a video call link, In Your Face makes it easy to join. On the alert screen, you can click the video camera icon, press J, or use the pop-up menu. After the event has started, you can also join the call by clicking the video camera icon for the event in In Your Face’s menu bar list or using a user-defined keyboard shortcut.

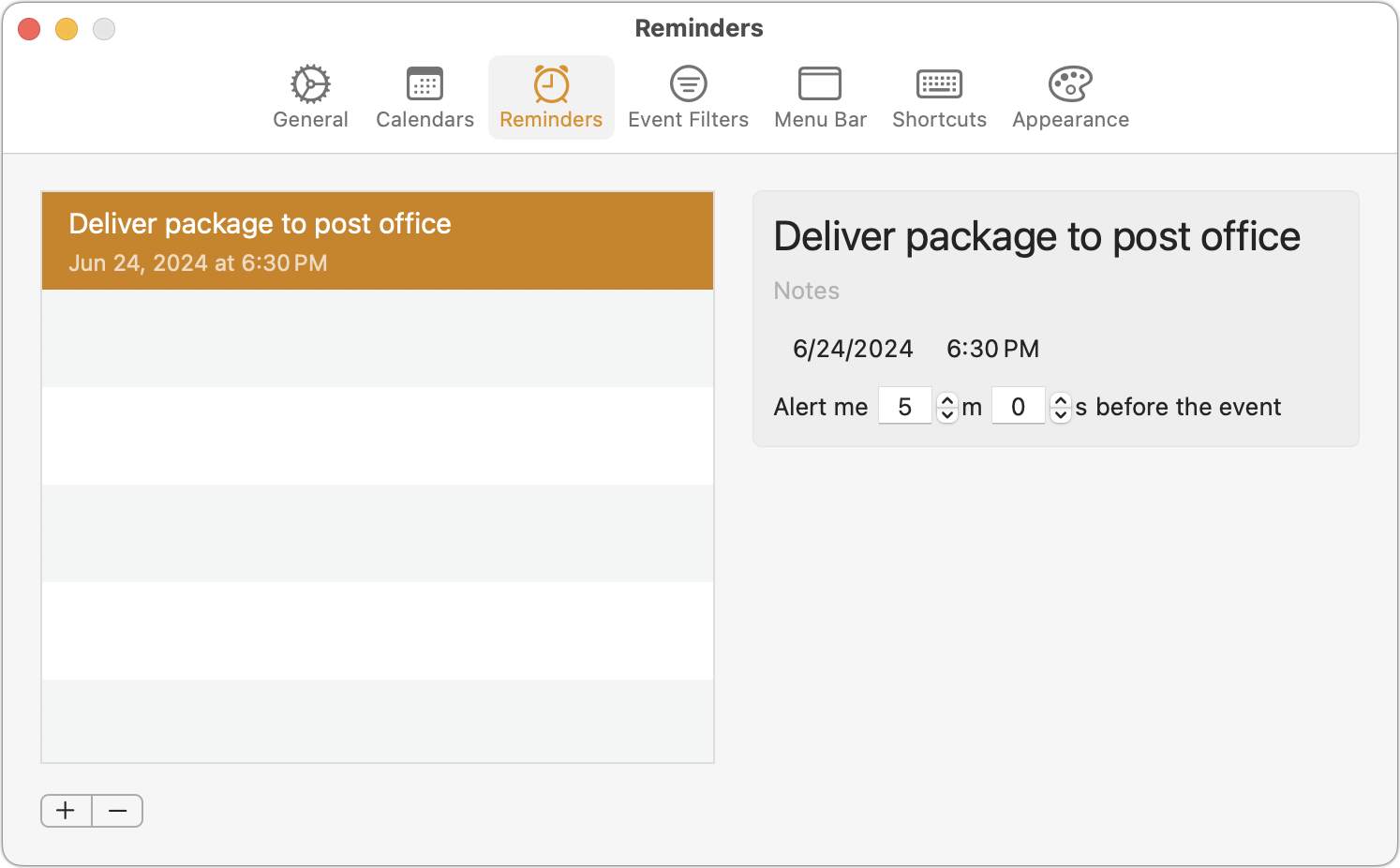

Configuring In Your Face

In Your Face’s copious settings let you specify which calendars and Reminders lists to watch, which is handy because it gets annoying fast when it interrupts you for non-essential events and reminders. I turned it off for all my reminders because the times I assign to them exist mainly to cause them to display a notification; I seldom need to accomplish the task at that exact time. If I did have tasks like that, I’d create a special list for them that In Your Face could watch.

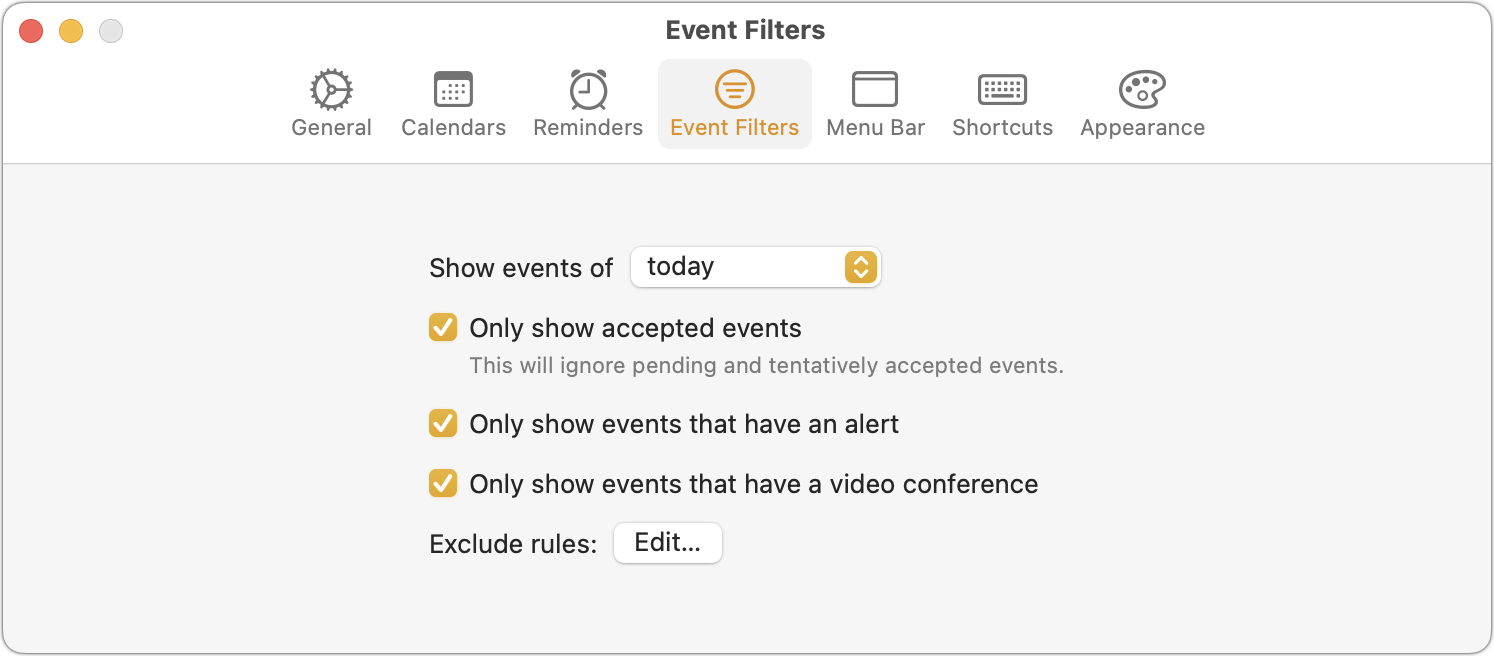

If calendar/list-level control isn’t sufficiently granular for you, In Your Face lets you create Event Filters that limit alerts to accepted events, events with an alert, and events that contain a link to a video conference. Still not enough? You can also create exclusion rules to avoid showing alerts for events with text in the title, notes, or location. You want more? The exclusion rules even support pattern matching. If you work in a multi-floor building and only want alerts for events on other floors, you could build a pattern that matches appropriate room numbers.

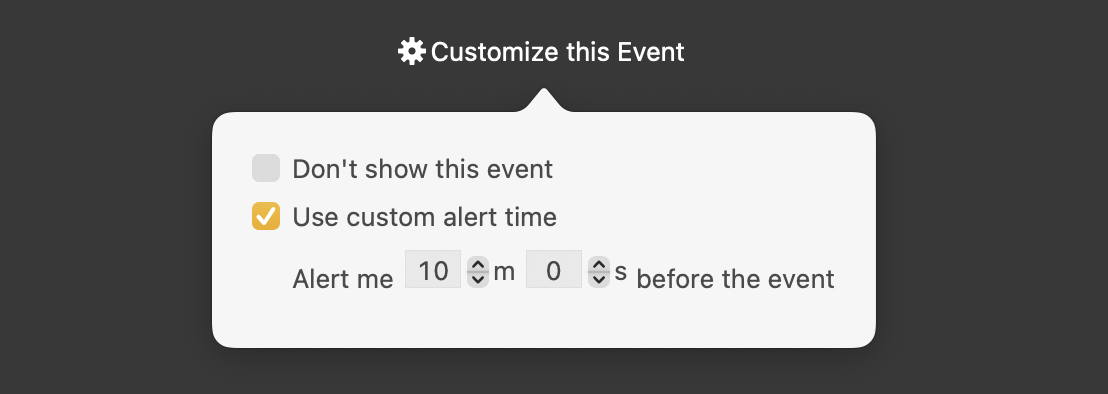

You can also set how long before an event you’re alerted, which of multiple screens should show the alert, what sound to play along with the alert, and the default snooze durations. In Your Face is even smart enough to include travel time if specified.

Additional In Your Face Features

Although In Your Face has one core function, developer Martin Hoeller has added a few additional features that some may appreciate:

- In Your Face displays your upcoming events in a menu bar list that also provides access to the settings window and lets you pause all alerts for a specified amount of time (essential if you give public presentations). Optionally, the menu bar item can change color to indicate that alerts are paused. Choosing an event from the list previews its full-screen alert and lets you customize it, either skipping its alert or giving it a different alert time.

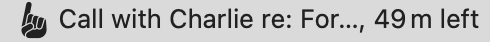

- The menu bar item can also display the current and next events, with a countdown timer showing the time remaining in the event or before it occurs.

- If you want reminders for tasks that aren’t on your calendar, In Your Face lets you create your own, with a title, notes, date and time, and alert time. This feature seems to exist mainly for those who don’t want to use Reminders.

I don’t use any of these additional features. For calendaring, I rely on Fantastical, which also provides a menu bar list of upcoming events and can display the current event in the menu bar. I also use Reminders heavily, so it’s easier for me to add tasks to Reminders using Siri on my iPhone, Apple Watch, or HomePod than it is to use In Your Face’s custom reminders.

Nevertheless, I appreciate In Your Face’s core capability and haven’t missed an event since I installed it. It may essentially be a one-trick pony, but it’s a good trick! In Your Face is available from the Mac App Store for $1.99 per month or $19.99 per year, and it’s also available in Setapp. For those allergic to subscriptions, a lifetime license that works on up to three Macs is available for $59.99.

How Apple Intelligence Sets a New Bar for AI Security, Privacy, and Safety

Everyone expected Apple’s announcement of Apple Intelligence, but the details on security, privacy, and safety still came as a (welcome) surprise to a security community already accustomed to Apple’s strong baselines. Apple deftly managed to navigate a series of challenges with innovations that extend from the iPhone to the cloud, exceeding anything we’ve seen elsewhere.

I’ve spent over a decade in cloud security, even longer working in cybersecurity overall, and I’m thoroughly impressed. The design of Apple Intelligence and the Private Cloud Compute system backing it sets a new standard for the AI industry by leveraging a range of Apple’s security and privacy investments over the past decade.

To understand why this is so important and how Apple pulled it off (assuming everything works as documented), we need to start with a quick overview of “this” kind of AI, the risks it creates, and how Apple plans to address those risks.

What kind of AI is Apple Intelligence, and how is it different from Siri?

There are many kinds of artificial intelligence, all of which use mathematical models to solve problems based on learning, such as recognizing patterns (please, AI researchers, don’t hurt me for the simplification). Your iPhones and Macs already rely on AI for numerous features like Siri voice recognition, identifying faces in Photos, and image enhancement for iPhone photos. In the past, Apple described these features as powered by “machine learning,” but the company now calls them “AI.”

By now, you’ve undoubtedly heard of ChatGPT, a generative AI chatbot. Generative AI algorithms create new content on request, including text, images, and more. Unlike Siri, which is largely limited to canned responses, a generative AI chatbot can handle a much broader range of requests. Ask Siri to tell you a story, and it might pull one from a database. Ask ChatGPT to tell you a story, and it will write a new one on the spot.

Open AI’s ChatGPT, Anthropic’s Claude, and Google Gemini are all examples of a Generative Pre-trained Transformer (GPT, get it?). In fact, GPTs are a subset of Large Language Models (LLMs) pre-trained on massive volumes of text (large chunks of the Internet), which use that data to create new answers by predicting which words should come next in response to a prompt. LLMs are for text, but other flavors of generative AI create images, audio, and even video (all of which can be abused for deepfakes). Generative AI is extremely impressive but requires massive computing power and often fails spectacularly. It also creates new security problems and privacy concerns, and suffers from inherent safety issues.

Apple’s first foray into generative AI comes under the Apple Intelligence umbrella. Apple is working to prioritize security, privacy, and safety in ways that weren’t necessary with its previous AI features.

What are the differences between AI security, privacy, and safety?

Although these terms are related and rely on each other to some degree, each has its own domain:

- Security involves preventing an adversary from doing something they shouldn’t with the AI system. For example, an attack known as prompt injection tries to trick the model into revealing or doing something inappropriate, such as revealing another user’s private data.

- Privacy ensures that your data remains under your control and can’t be viewed or used by anyone without your permission, including the AI provider. Your questions to AI should remain private and unreadable by others.

- Safety means the AI should never return harmful responses or take harmful actions. An AI should not tell you how to harm yourself, create a biological weapon, or rob a bank.

Security is the foundation on which privacy and safety are built; if the system is insecure, we cannot guarantee privacy or safety.

As with any online service, privacy is a choice; providers choose which privacy options to offer, and consumers choose whether and how to use a service. Many consumer AI providers will, by default, use your prompts (the questions you ask the AI) to improve their models. This means anything you enter could be used, probably piecemeal, in someone else’s answer. On the plus side, most let you opt out of having your prompts used for training and offer options to delete your data and history.

Most AI providers work hard to ensure safety, but like social networks, they use different definitions and have different tolerances for what they consider acceptable. Inevitably, some people do not agree.

How does generative AI work?

AI is incredibly complex, but for our purposes, we can simplify and focus on three core components and a couple of additional options. These are combined to produce a model:

- Hardware to run the AI model: Although models can run on regular CPUs, they benefit from specialized chips designed to run special kinds of software common in AI. These are mostly GPGPUs (General-Purpose Graphics Processing Units) derived from graphics chips, largely from stock market darling Nvidia, or dedicated AI chips like Apple’s Neural Engine and Google’s Tensor Processing Units.

- AI software/algorithms: These are the brains of the models and consist of multiple components. Most current models use neural networks, which emulate how a biological neuron (brain cell) works and communicates with other neurons.

- Training data: All generative AI models need a corpus of knowledge from which to learn. Current consumer models like ChatGPT, Claude, and Gemini were trained by scraping the Web, much like search engines scrape the Web to build indices. This is controversial, and there are lawsuits in progress.

As you might imagine, a bigger brain composed of a greater number of more efficiently connected neurons trained on a larger dataset generally provides better results. When a company builds a massive model designed for general use, we call it a foundation model. Foundation models can be integrated into many different situations and enhanced for specific use cases, such as writing program code.

Two main optional components are used to enhance foundation models:

- Fine-tuning data adapts a pre-trained model to provide personalized results. For example, you can fine-tune a foundation model that understands human language with samples of your own writing to emulate your personal style. Fine-tuning a model enhances training data with more specific data.

- Retrieval Augmented Generation (RAG) enhances output by incorporating external data that isn’t in the pre-trained or fine-tuned data. Why use RAG instead of fine-tuning? Fine-tuning retrains the model, which requires more time and processing power. RAG is better for enhancing results with up-to-date data. Depending on the model size and volume of fine-tuning data, it may take weeks or longer to update a model.

Here’s how it all fits together, using the example of integrating AI into a help system. The foundation model AI developers produce a new LLM that they load onto a massive compute cluster and then train with a massive data set. The result is something like ChatGPT, which “understands” and writes in the languages it was trained on. In response to a prompt, it decides what words to put in what order, based on all that learning and the statistical probabilities of how different words are associated and connected.

A customer then customizes the foundation model by adding its own fine-tuning data, such as documentation for its software platforms, and integrating the LLM into its help system. The foundation model understands language, and the fine-tuning provides specific details about those platforms. If things change a lot, the developers can use RAG to have the tuned model retrieve the latest documentation and augment its results without having to retrain and retune.

When it works, it feels like magic. The problem is that LLMs (and other generative AI, including image generators) are prone to failure. They understand statistically likely associations but not necessarily… reality. I once asked ChatGPT for a list of PG-13 comedies for family movie night. Half the results were rated R (including the one we picked, so maybe it wasn’t so wrong, after all). These kinds of mistakes are commonly called “hallucinations,” and it is widely believed that they can never be totally eliminated. Some have suggested that “confabulations” may be a better term, since “hallucination” has connotations of wild fancy, whereas “confabulation” is more about fabrication without any intent to deceive.

How does generative AI affect security, privacy, and safety?

The sheer complexity of generative AI creates a wide range of new security issues. Rather than trying to cover them all, let’s focus on how they could affect Apple’s provision of AI services to iPhone users. The crux of the problem is that for Apple Intelligence to be any good, it will need to run at least partially in the cloud to have enough hardware muscle. Here are some challenges Apple faces:

- To provide personal results, the AI models need access to personal data Apple would rather not collect.

- You can only do so much on a single device. Foundation models usually run in the cloud due to massive processing requirements. So, personalization requires processing personal data in the cloud.

- One risk of AI is that an attacker could trick a model into revealing data it shouldn’t. That could be personal user data (like your prompts) or safety violations (like information about the most effective way to bury a body in the desert, although this is considered common knowledge here in Phoenix).

- Anything running in the cloud is open to external attack. A security incident in the cloud could result in a privacy breach that reveals customer data.

- Apple is big, popular, and targeted by the most sophisticated cyberattacks known to humankind. Evildoers and governments would love access to a billion users’ personal questions and email summaries.

These challenges are highly complex. Most major foundation models are decently secure, but they have access to all customer prompts. The problems are thornier for Apple because iPhones, iPads, and Macs are so personal and thus have access to private information locally and in iCloud. No one is asking Apple to create another generic AI chatbot to replace ChatGPT—people want an Apple AI that understands them and provides personal results using the information on their iPhones and in iCloud.

Apple’s challenge is to leverage the power of generative AI securely, using the most personal of personal data, while keeping it private even from intimates, criminals, and governments.

How does Apple Intelligence manage security and privacy?

Apple’s approach leverages its complete control of the hardware and software stacks on our devices. Apple Intelligence first tries to process an AI prompt on the local system (your iPhone, iPad, or Mac) using Neural Engine cores built into the A17 Pro or M-series chip. When a task requires more processing power, Apple Intelligence sends the request to a system called Private Cloud Compute, which runs Apple’s foundation AI models in the cloud.

Rather than relying on public foundation models, Apple built its own foundation models and runs them on its own cloud service, powered by Apple silicon chips, using many of the same security capabilities that protect our personal Apple devices. Apple then enhanced those capabilities with additional protections to ensure no one can access customer data—including malicious Apple employees, possible plants in Apple’s physical or digital supply chain, and government spies.

It’s an astounding act of security and privacy engineering. I’m not prone to superlatives—security is complex, and there are always weaknesses for adversaries to exploit—but this is one of the very few situations in my career where I think superlatives are justified.

While Apple hasn’t revealed all the details of how Apple Intelligence will work, it has published an overview of its foundation models, an overview of the security of Private Cloud Compute, and information on the Apple Intelligence features. Combining that information with the company’s previously published Platform Security Guide, which details how Apple security works across devices and services, we can understand the fundamentals of how Apple plans to secure Apple Intelligence.

What foundation models does Apple use, and do they include customer data?

Apple created its foundation models using the Apple AXLearn framework, which it released as an open source project in 2023. Keep in mind that a model is the result of various software algorithms trained on a corpus of data.

Apple does not use customer data in training, but it does use licensed data and Internet data collected using a tool called AppleBot, which crawls the Web. Although AppleBot isn’t new, few people have paid it much attention before now. Because personal data from the Internet shows up in training data, Apple attempts to filter out such details.

Like other creators of foundation models, Apple needs massive volumes of text to train the capabilities of its models—thus the requirement for a Web crawler. Web scraping is contentious because these tools scoop up intellectual property without permission for integration into models and search indices. I once asked ChatGPT a question on cloud security, an area in which I’ve published extensively, and the result looked very close to what I’ve written in the past. Do I know for sure that it was copying me? No, but I do know that ChatGPT’s crawler scraped my content.

Apple now says it’s possible to exclude your website from AppleBot’s crawling, but only going forward. Apple has said nothing about any way to remove content from its existing foundation models, which were trained before the exclusion rules were public. While that’s not a good look for the company, it would likely require retraining the model on the cleaned data set, which is certainly a possibility.

Apple also filters for profanity and low-value content; although we don’t know for sure, it likely also filters out harmful content to the extent possible. During training, the models also integrate human feedback, and Apple performs adversarial testing to address security issues (including prompt injection) and safety issues (such as harmful content).

Was the foundation model fine-tuned with my data?

Apple fine-tunes adapters rather than the base pre-trained model. Adapters are fine-tuned layers for different tasks, like summarizing email messages. Returning to my general description of generative AI, Apple fine-tunes a smaller adapter instead of fine-tuning the entire model—just as my example company fine-tuned its help system on product documentation.

Apple then swaps in an appropriate adapter on the fly, based on which task the user is attempting. This appears to be an elegant way of optimizing for both different use cases and the limited resources of a local device.

Based on Apple’s documentation, fine-tuning does not appear to use personal data—especially since the fine-tuned adapters undergo testing and optimization before they are released, which wouldn’t be possible if they were trained on individual data. Apple also uses different foundation models on-device and in the cloud, only sending the required personal semantic data to the cloud for each request, which again suggests that Apple is not fine-tuning with our data.

How does Apple Intelligence work securely on our devices?

In some ways, maintaining security on our devices is the easiest part of the problem for Apple, thanks to over a decade of work on building secure devices. Apple needs to solve two broad problems on-device:

- Handling data and making it available to the AI models

- Communicating with the cloud service when required

As mentioned, Apple Intelligence will first see if it can process a request on-device. It will then load the appropriate adapter. If the task requires access to your personal data, that’s handled on-device, using a semantic index similar to Spotlight’s. While Apple has not specified how this happens, I suspect it uses RAG to retrieve the necessary data from the index. This work is handled using different components of Apple silicon, most notably the Neural Engine. That’s why Apple Intelligence doesn’t work on all devices: it needs a sufficiently powerful Neural Engine and enough memory.

At this point, extensive hardware security is in play, well beyond what I can cover in this article. Apple leverages multiple layers of encryption, secure memory, and secure communications on the A-series and M-series chips to ensure that only approved applications can talk to each other, data is kept secure, and no process can be compromised to break the entire system.

App data is not indexed by default, so Apple can’t see your banking information. All apps on iOS are compartmentalized using different encryption keys, and an app’s developer needs to “publish” their data into the index. This data includes intents, so an app can publish not only information but also actions, which Apple Intelligence can make available to Siri. Developers can also publish semantic information (for example, defining what a travel itinerary is) for their apps.

In other words, your app data isn’t included in Apple Intelligence unless the developer allows it and builds for it. I also suspect users will be able to disable inclusion since that capability already exists for Siri and Spotlight in Settings > Siri & Search on the iPhone and iPad and System Settings > Siri & Spotlight > Siri Suggestions & Privacy on the Mac.

The existing on-device security also restricts what information an app can see, even if a Siri request combines your personal data with app data. Siri will only provide protected data to an app as part of a Siri request if that app is already allowed access to that protected data (such as when you let a messaging app access Contacts). I expect this to remain true for Apple Intelligence, preventing something that security professionals call the confused deputy problem. This design should prevent a malicious app from tricking the operating system into providing private data from another app.

How does Apple Intelligence decide whether a request should go to the cloud?

Apple has not detailed this process yet, but we can make some inferences.

As I mentioned, we call a request to most forms of generative AI a prompt, such as “proofread this document.” First, the AI converts the prompt into tokens. A token is a chunk of text an AI uses for processing. One measure of the power of an LLM is the number of tokens it can process. The vocabulary of a model is all the tokens it can recognize.

In my proofreading example above, the number of tokens is based on the size of the request and the size of the data (the document) provided in the request. In its model overview post, Apple said the vocabulary is 49,000 tokens on devices and 100,000 in the cloud. An iPhone 15 Pro can generate responses at a rate of 30 tokens per second.

I think Apple uses at least two criteria to decide whether to send a request to the cloud:

- Prompts with more than a certain number of tokens

- Prompts for certain kinds of information that need a larger vocabulary, more memory, or processing power

What happens when a request is sent to the cloud?

Here is where Apple outdid itself with its security model. The company needed a mechanism to send the prompt to the cloud securely while maintaining user privacy. The system must then process those prompts—which include sensitive personal data—without Apple or anyone else gaining access to that data. Finally, the system must assure the world that the prior two steps are verifiably true. Instead of simply asking us to trust it, Apple built multiple mechanisms so your device knows whether it can trust the cloud, and the world knows whether it can trust Apple.

How does Apple handle all these challenges? Using its new Private Cloud Compute: a custom-built hardware and software stack for securely and privately hosting LLMs with verifiable trust.

From the Private Cloud Compute announcement post:

We designed Private Cloud Compute to make several guarantees about the way it handles user data:

- A user’s device sends data to PCC for the sole, exclusive purpose of fulfilling the user’s inference request. PCC uses that data only to perform the operations requested by the user.

- User data stays on the PCC nodes that are processing the request only until the response is returned. PCC deletes the user’s data after fulfilling the request, and no user data is retained in any form after the response is returned.

- User data is never available to Apple—even to staff with administrative access to the production service or hardware.

At a high level, Private Cloud Compute falls into a family of capabilities we call confidential computing. Confidential computing assigns specific hardware to a task, and that hardware is hardened to prevent attacks or snooping by anyone with physical access. Apple’s threat model includes someone with physical access to the hardware and highly sophisticated skills—about the hardest scenario to defend against. Another example is Amazon Web Service’s Nitro architecture.

Let’s break Private Cloud Compute into bite-sized elements—it’s pretty complex, even for a lifelong security professional with experience in cloud and confidential computing like me.

What data is sent to the cloud?

The prompt, the desired AI model, and any supporting inferencing data. I believe this would include contact or app data not included in the prompt typed or spoken by the user.

How does my device know where to send the request and ensure it’s secure and private?

The core unit of Private Cloud Compute (PCC) is a node. Apple has not specified whether a node is a collection of servers or a collection of processors on a single server, but that’s largely irrelevant from a security perspective. PCC nodes use an unspecified Apple silicon processor with the same Secure Enclave as other Apple devices. The Secure Enclave handles encryption and manages encryption keys outside the CPU. Think of it as a highly secure vault, with a little processing capability available solely for security operations.

Each node has its own digital certificate, which includes the node’s public key and some standard metadata, such as when the certificate expires. The private key that pairs with the public key is stored in the Secure Enclave on the node’s server. Any data encrypted with a public key can only be decrypted with the matching private key. This is public key cryptography, which is used basically everywhere.

Client software on the user’s device first contacts the PCC load balancer with some simple metadata, which enables the request to be routed to a suitable node for the needed model. The load balancer returns a list of nodes ready to process the user’s request. The user’s device then encrypts the request with the public keys of the selected nodes, which are now the only hardware capable of reading the data.

Remember, thanks to the Secure Enclave, there should be no way to extract the private keys of the nodes (a problem with software-only encryption systems), and thus, there should be no way to read the request outside those servers. The load balancer itself can’t read the requests—it just routes them to the right nodes. Even if an attacker compromised the load balancer and steered traffic to different hardware, that hardware still couldn’t read the request because it would lack the decryption keys.

Can someone track which request is mine or steer my request to a specified node?

No, and this is a very cool feature. In short, Apple can’t see your IP address or device data because it uses a third-party relay that strips such information. However, that third party also can’t pretend to be Apple or decrypt data.

The initial request metadata sent to the load balancer to get the list of nodes contains no identifying information. It essentially says, “I need a model for proofreading my document.” This request does not go directly to Apple—instead, it’s routed through a third-party relay to strip the IP address and other identifying information.

So, Apple can’t track a request back to a device, which prevents an attacker from doing the same unless they can compromise both Apple and the relay service. Should an attacker actually compromise a node and want to send a specific target to it, Apple further defends against steering by performing statistical analysis of load balancers to detect any irregularities in where requests are sent.

Finally, Apple says nothing about this in its documentation, but we can infer that the node certificates are signed using the special signing keys embedded in Apple operating systems and hardware. Apple protects those as the crown jewels they are. This signature verification prevents an attacker from pretending to be an official Apple node. Your device encrypts a request for the nodes specified by the load balancer, ensuring that even other PCC nodes can’t read your request.

How is my data protected in Apple Intelligence running in the cloud?

At this point in the process, your device has said, “I need PCC for a proofreading request,” and Apple’s relay service has replied, “Here is a list of nodes that can provide that.” Next, your device checks certificates and keys before encrypting the request and sending it to the nodes.

The load balancer then passes your request to the nodes. Remember, nodes run on special Apple servers built just for PCC. These servers use the same proven security mechanisms as your personal Apple devices, further hardened to protect against advanced attacks. How?

All the PCC hardware is built in a secure supply chain, and each server is intensely inspected before being provisioned for use. (These techniques are essential for avoiding back doors being embedded before the servers even reach Apple.) The overall process appears to include high-resolution imaging, testing, and supply chain tracking, and a third party monitors everything. Once a server passes these checks, it’s sealed, and a tamper switch is activated to detect any attempts at physical modification.

When a server boots, it uses Apple’s Secure Boot process, described in the Platform Security Guide. This approach leverages the Secure Enclave and multiple steps to ensure the loaded operating system is valid, code can’t be injected, and only approved applications can run. Like macOS, PCC servers use a Signed System Volume, meaning the operating system is cryptographically signed to prove it hasn’t been tampered with and runs from read-only storage.

All the software running on PCC servers is developed and signed by Apple, reducing the chance of problems caused by a malicious developer compromising an open source tool. Much of it is written in Swift, a memory-safe language that resists cracking by certain common exploits. And everything uses sandboxing and other standard Apple software security controls, just like your iPhone.

On boot, random encryption keys are generated for the data volume (the storage used for processing requests). Thus, your data is encrypted when stored on the server, and everything is protected using the Secure Enclave.

It gets better. After a node processes a request, Apple tosses the encryption keys and reboots the node. That node can no longer read any previously stored user data since it no longer has the encryption key! The entire system resets itself for the next request. Just to be safe, Apple even occasionally recycles the server’s memory in case something was still stored there.

To piece it together, after you send your request to Apple, it goes to highly secure Private Cloud Compute nodes. They process the request, keeping your data encrypted the entire time. Once the request is finished, they cryptographically wipe themselves, reboot, and are ready for the next request.

Is my data exposed to administrators, in log files, or to performance monitoring tools?

No. Apple doesn’t include any software that could allow this kind of monitoring (called privileged runtime access) into the stack. PCC nodes do not have command shells, debugging modes, or developer tools. Performance and logging tools are limited and designed to strip out any private data.

How will Apple prove that we can trust nodes… and its word?

Apple says it will make every production software build of Private Cloud Compute publicly available for researchers to evaluate. Devices will only send requests to nodes that can prove they are running one of these public builds. This is another unique part of the Apple Intelligence ecosystem.

Apple will achieve this by using a public transparency log, which uses cryptography to ensure that once something is written to the log, it can’t be changed—a good use of blockchain technology. This log will include measurements of the code (not currently specified) that can be used to validate that a binary blob of the operating system and its applications matches the logged version.

Plus, Apple will publish the binary images of the software stack running on PCC nodes. That’s confidence and a great way to ensure the system is truly secure—not just “secure” because it’s obscure.

As noted, our devices will only send requests to nodes running expected software images. Apple is a bit vague here, but I suspect the nodes will also publish their cryptographically signed measurements, which will need to match the measurements for the current version of software published in the transparency log.

Finally, Apple will also release a PCC Virtual Research Environment for security researchers to validate their claims by testing the behavior of a local, virtual version of a PCC node. It will also release some source code, including some plain-text code for sensitive components the company has never previously released.

But isn’t Apple sending requests to ChatGPT?

Apple Intelligence focuses on AI tasks that revolve around your devices and data. For more general requests that require what Apple calls world knowledge, Apple Intelligence will prompt the user to send the request—initially to ChatGPT and to other services in the future.

Each prompt requires user approval (which may become annoying), and Apple has stated that OpenAI (which runs ChatGPT) will not track IP addresses for anonymous requests. If you have a paid account with ChatGPT or another third-party AI service Apple supports in the future, any privacy would be handled by that service according to its privacy policy.

What about China and other countries where Apple is required to allow government access to data? What about the European Union?

Apple has not said whether it will release Apple Intelligence in countries like China. The documented Private Cloud Compute model does not satisfy Chinese laws.

Apple has already announced that it will not initially release Apple Intelligence in the EU due to the Digital Markets Act. Although Apple Intelligence will eventually be able to send requests to third-party services for world knowledge, these requests do not include the private data processed on-device or in PCC. It’s hard to see how Apple could maintain user privacy while allowing an external service the same deep access to on-device data, which the EU could require for DMA compliance.

Why did Apple put so much effort into building a private AI platform?

As much as many people prefer to dismiss artificial intelligence as the latest technology fad, it is highly likely to have a significant impact on our lives and society over time.

AI models continue to evolve at a breakneck pace. I’ve used generative AI to save myself weeks of work on coding projects, and I find it helpful as a writing assistant to organize my thoughts and perform lightweight research—which I validate before using, just like anything else I read on the Internet.

Today’s models work reasonably well for summarizing content in a paper, helping write and debug application code, creating images, and more. But they cannot act as a personal agent that could help me get through an average day. For that, the AI needs access to all my personal data: email, contacts, calendars, messages, photos, and more. ChatGPT can’t “send my wife my travel details for my flight next month”—and I wouldn’t give it the necessary information.

When Apple Intelligence ships, its features will mostly be a less-stupid Siri, small niceties like email summaries in message lists, relatively humdrum summaries, and parlor tricks like Image Playground and Image Wand. But within a few years, if things go well, Siri may grow to resemble the personal digital agents from science fiction—notably Apple’s Knowledge Navigator. Despite the astonishing performance of Apple silicon, some AI-driven tasks will always require the cloud, which motivated Apple’s work in designing, building, and scaling Private Cloud Compute. Apple wants us to trust its AI platforms with our most sensitive data and recognizes that trust must be earned. The theory is good—when Apple Intelligence features start becoming available, we’ll see how the reality compares.˜